The latest innovations around AI-based tech are pushing the boundaries of what we once thought was possible with AI. However, with chatbots like ChatGPT and Bing Chat getting nearly as good as humans at several things, is it time to hit the brakes for a while?Elon Musk and several AI researchers are among the 1,188 people (at the time of writing) that think so. A letter published by the non-profit Future of Life institute calls for a six-month pause on training AI technologies better than GPT 4, but is a pause really required?

What Is the Future of Life Open Letter All About?

The letter published by the Future of Life institute points out that AI labs have been "locked in an out-of-control race" to develop and deploy more and more powerful AI models that no one, including their creators, can "understand, predict, or reliably control".

It also points out that contemporary AI systems are now becoming human-competitive at general tasks and asks whether we should develop "nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us".

The letter eventually calls on all AI labs to immediately pause the training of AI systems more powerful than GPT-4 for at least six months. The pause should be public and verifiable across all key actors as well. It also states that if such a pause cannot be enforced quickly, governments should step in to prohibit AI model training temporarily.

Once the pause is active, AI labs and independent experts are asked to use it to jointly develop and implement a "shared set of security protocols" to ensure that systems adhering to these rules are "safe beyond a reasonable doubt".

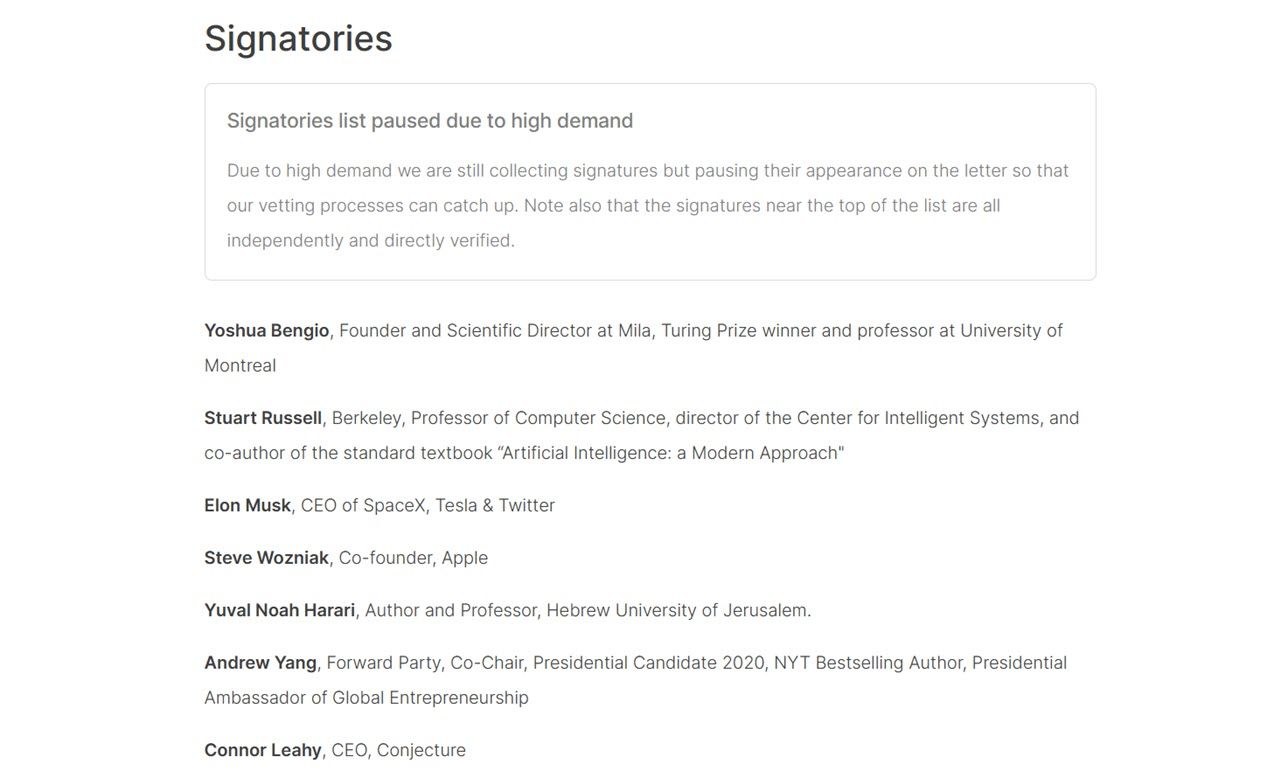

This letter has been signed by quite a few named personalities, including Elon Musk, Steve Wozniak, and AI researchers and authors. In fact, the signatories list is currently paused due to high demand.

What Are Musk's Concerns With Advanced AI Tech and OpenAI?

While signing Future of Life's letter might indicate that Musk is concerned about the safety risks such advanced AI systems pose, the real reason might be something else.

Musk co-founded OpenAI with current CEO Sam Altman back in 2015 as a non-profit. However, he butted heads with Altman later in 2018 after he realized he wasn't happy with the company's progress. Musk reportedly wanted to take over to speed up development, but Altman and the OpenAI board shot down the idea.

Musk walked away from OpenAI shortly after and took his money with him breaking his promise to contribute 1 billion in funding and only giving 100 million before leaving. This forced OpenAI to become a private company shortly after in March 2019 to raise funding to continue its research.

Another reason why Musk left was that the AI development at Tesla would cause a conflict of interest in the future. It's obvious knowledge that Tesla needs advanced AI systems to power its Full Self-Driving features. Since Musk left OpenAI, the company has run away with its AI models, launching GPT3.5-powered ChatGPT in 2022 and later following up with GPT-4 in March 2023.

The fact that Musk's AI team is nowhere close to OpenAI needs to be accounted for every time he says that modern AI models could pose risks. He also had no problems rolling out the Tesla Full Self-Driving beta on public roads, essentially turning regular Tesla drivers into beta testers.

This doesn't end here, either. Musk has also been rather critical of OpenAI on Twitter, with Altman going as far as to say that he's attacking them while appearing on the "On with Kara Swisher" podcast recently.

At the moment, it seems like Musk is just using the Future of Life letter to halt development at OpenAI and any other firm catching up to GPT-4 to give his companies a chance to catch up instead of actually being concerned about the potential dangers these AI models pose. Note that the letter also asks to pause the "training" of AI systems for six months, which can be relatively easily sidestepped to continue developing them in the meantime.

Is a Pause Really Needed?

The necessity for a pause depends on the state of AI models going forward. The letter definitely is a bit dramatic in tone, and we're not risking losing control of our civilization to AI, as it so openly states. That said, AI technologies do pose a few threats.

Given that OpenAI and other AI labs can come up with better safety checks in place, a pause would do more harm than good. However, the letter's suggestion of a set of shared safety protocols that are "rigorously audited and overseen by independent outside experts" does sound like a good idea.

With tech giants like Google and Microsoft pouring billions into AI development and integration in their products, it is unlikely that the letter will affect the current pace of AI development.

AI Is Here to Stay

With tech giants pushing AI integration in their products further and putting in billions of dollars in research and development, AI is here to stay, regardless of what the opposition says.

But it's not a bad idea to implement safety measures to prevent these technologies from going off the rails and potentially turning into tools for harm.