Google's TensorFlow platform allows its users to train an AI by providing tools and resources for machine learning. For a long time, AI engineers have used traditional CPUs and GPUs to train AI. Although these processors can handle various machine learning processes, they are still general-purpose hardware used for various everyday tasks.

To speed up AI training, Google developed an Application Specific Integrated Circuit (ASIC) known as a Tensor Processing Unit (TPU). But, what is a Tensor Processing Unit, and how do they speed up AI programming?

What Are Tensor Processing Units (TPU)?

Tensor Processing Units are Google's ASIC for machine learning. TPUs are specifically used for deep learning to solve complex matrix and vector operations. TPUs are streamlined to solve matrix and vector operations at ultra-high speeds but must be paired with a CPU to give and execute instructions. TPUs may only be used with Google's TensorFlow or TensorFlow Lite platform, whether through cloud computing or its lite version on local hardware.

Applications for TPUs

Google has used TPUs since 2015. They have also confirmed the use of these new processors for Google Street View text processing, Google Photos, and Google Search Results (Rank Brain), as well as to create an AI known as AlphaGo, which has beaten top Go players and the AlphaZero system that won against leading programs in Chess, Go, and Shogi.

TPUs can be used in various deep learning applications such as fraud detection, computer vision, natural language processing, self-driving cars, vocal AI, agriculture, virtual assistants, stock trading, e-commerce, and various social predictions.

When to Use TPUs

Since TPUs are high specialized hardware for deep learning, it loses a lot of other functions you would typically expect from a general-purpose processor like a CPU. With this in mind, there are specific scenarios where using TPUs will yield the best result when training AI.

The best time to use a TPU is for operations where models rely heavily on matrix computations, like recommendation systems for search engines. TPUs also yield great results for models where the AI analyzes massive amounts of data points that will take multiple weeks or months to complete. AI engineers use TPUs for instances without custom TensorFlow models and have to start from scratch.

When Not to Use TPUs

As stated earlier, the optimization of TPUs causes these types of processors to only work on specific workload operations. Therefore, there are instances where opting to use a traditional CPU and GPU will yield faster results. These instances include:

- Rapid prototyping with maximum flexibility

- Models limited by the available data points

- Models that are simple and can be trained quickly

- Models too onerous to change

- Models reliant on custom TensorFlow operations written in C++

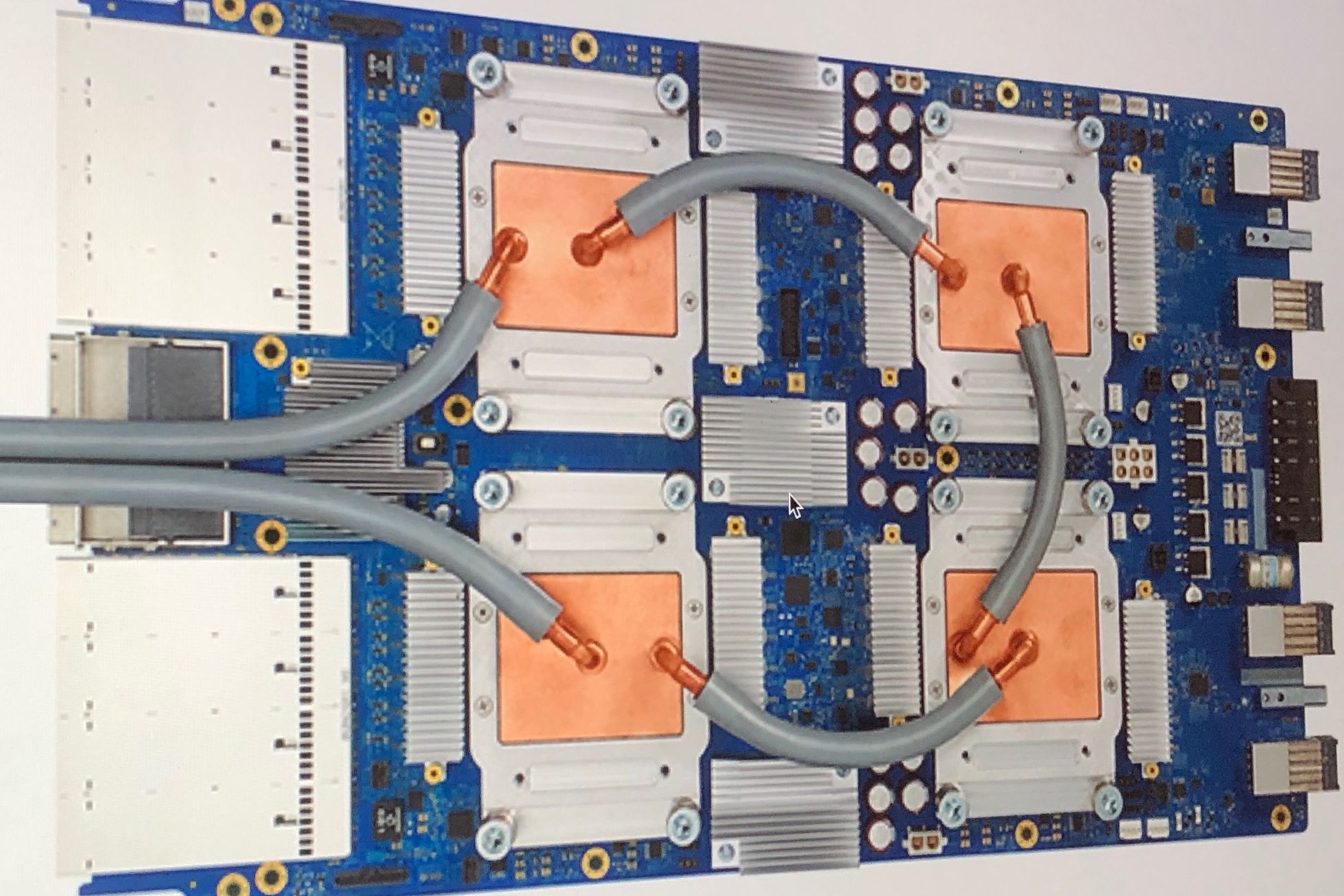

TPU Versions and Specifications

Since Google announced its TPUs, the public has been continually updated about the latest versions of TPUs and their specifications. The following is a list of all the TPU versions with specifications:

|

TPUv1 |

TPUv2 |

TPUv3 |

TPUv4 |

Edgev1 |

|

|---|---|---|---|---|---|

|

Date Introduced |

2016 |

2017 |

2018 |

2021 |

2018 |

|

Process node(nm) |

28 |

16 |

16 |

7 |

|

|

Die size (mm²) |

331 |

<625 |

<700 |

<400 |

|

|

On-chip Memory |

28 |

32 |

32 |

144 |

|

|

Clock Speed (MHz) |

700 |

700 |

940 |

1050 |

|

|

Smallest Memory Configuration (GB) |

8 DDR3 |

16 HBM |

32 HBM |

32 HBM |

|

|

TDP (Watts) |

75 |

280 |

450 |

175 |

2 |

|

TOPS (Tera Operations Per Second) |

23 |

45 |

90 |

? |

4 |

|

TOPS/W |

0.3 |

0.16 |

0.2 |

? |

2 |

As you can see, TPU clock speeds don't seem all that impressive, especially when modern desktop computers today can have clock speeds 3-5 times faster. But if you look at the bottom two rows of the table, you can see that TPUs can process 23-90 tera-operations per second using only 0.16—0.3 watts of power. TPUs are estimated to be 15-30 times faster than modern CPUs and GPUs when using a neural network interface.

With each version released, newer TPUs show significant improvements and capabilities. Here are a few highlights for each version.

- TPUv1: The first publicly announced TPU. Designed as an 8-bit matrix multiplication engine and is limited to solving only integers.

- TPUv2: Since engineers noted that TPUv1 was limited in bandwidth. This version now has double the memory bandwidth with 16GB of RAM. This version can now solve floating points making it useful for training and inferencing.

- TPUv3: Released in 2018, TPUv3 has twice the processors and is deployed with four times as many chips as TPUv2. The upgrades allow this version to have eight times the performance over previous versions.

- TPUv4: This is the latest version of TPU announced on May 18, 2021. Google's CEO announced that this version would have more than twice the performance of TPU v3.

- Edge TPU: This TPU version is meant for smaller operations optimized to use less power than other versions of TPU in overall operation. Although only using two watts of power, Edge TPU can solve up to four terra-operations per second. Edge TPU is only found on small handheld devices like Google's Pixel 4 smartphone.

How Do You Access TPUs? Who Can Use Them?

TPUs are proprietary processing units designed by Google to be used with its TensorFlow platform. Third-party access to these processors has been allowed since 2018. Today, TPUs (except for Edge TPUs) can only be accessed through Google's computing services through the cloud. While Edge TPU hardware can be bought through Google's Pixel 4 smartphone and its prototyping kit known as Coral.

Coral is a USB accelerator that uses USB 3.0 Type C for data and power. It provides your device with Edge TPU computing capable of 4 TOPS for every 2W of power. This kit can run on machines using Windows 10, macOS, and Debian Linux (it can also work with Raspberry Pi).

Other Specialized AI Accelerators

With artificial intelligence being all the rage for the past decade, Big Tech is constantly looking for ways to make machine learning as fast and efficient as possible. Although Google's TPUs are arguably the most popular ASIC developed for deep learning, other tech companies like Intel, Microsoft, Alibaba, and Qualcomm have also developed their own AI accelerators. These include the Microsoft Brainwave, Intel Neural Compute Stick, and Graphicore's IPU (Intelligence Processing Unit).

But while more AI hardware is being developed, sadly, most are yet to be available in the market, and many never will. As of writing, if you really want to buy AI accelerator hardware, the most popular options are to buy a Coral prototyping kit, an Intel NCS, a Graphicore Bow Pod, or an Asus IoT AI Accelerator. If you just want access to specialized AI hardware, you can use Google's cloud computing services or other alternatives like Microsoft Brainwave.