Have you ever wondered why a program you wrote took so long to run? Perhaps you would like to know if you can make your code more efficient. Understanding how code runs can bring your code to the next level. Big-O notation is a handy tool to calculate how efficient your code really is.

What Is Big-O Notation?

Big-O notation gives you a way to calculate how long it will take to run your code. You can physically time how long your code takes to run, but with that method, it is hard to catch small time differences. For example, the time it takes between running 20 and 50 lines of code is very small. However, in a large program, those inefficiencies can add up.

Big-O notation counts how many steps an algorithm must execute to gauge its efficiency. Approaching your code in this manner can be very effective if you need to tune your code to increase efficiency. Big-O notation will enable you to measure different algorithms by the number of steps it requires to run and objectively compare the algorithms' efficiency.

How Do You Calculate Big-O Notation

Let's consider two functions that count how many individual socks are in a drawer. Each function takes the number of pairs of socks and returns the number of individual socks. The code is written in Python, but that does not affect how we would count the number of steps.

Algorithm 1:

def sockCounter(numberOfPairs):

individualSocks = 0

for x in range(numberOfPairs):

individualSocks = individualSocks + 2

return individualSocks

Algorithm 2:

def sockCounter(numberOfPairs):

return numberOfPairs * 2

This is a silly example, and you should be able to easily tell which algorithm is more efficient. But for practice, let's run through each.

Algorithm 1 has many steps:

- It assigns a value of zero to the variable individualSocks.

- It assigns a value of one to the variable i.

- It compares the value of i to numberOfPairs.

- It adds two to individualSocks.

- It assigns the increased value of individualSocks to itself.

- It increments i by one.

- It then loops back through steps 3 to 6 for the same number of times as (indiviualSocks - 1).

The number of steps we have to complete for algorithm one can be expressed as:

4n + 2

There are four steps that we have to complete n times. In this case, n would equal the value of numberOfPairs. There are also 2 steps that are completed once.

In comparison, algorithm 2 just has one step. The value of numberOfPairs is multiplied by two. We would express that as:

1

If it wasn't already apparent, we can now easily see that algorithm 2 is more efficient by quite a bit.

Big-O Analysis

Generally, when you are interested in the Big-O notation of an algorithm, you are more interested in the overall efficiency and less so in the fine-grain analysis of the number of steps. To simplify the notation, we can just state the magnitude of the efficiency.

In the examples above, algorithm 2 would be expressed as one:

O(1)

But algorithm 1 would be simplified as:

O(n)

This quick snapshot tells us how the efficiency of algorithm one is tied to the value of n. The larger the number the more steps the algorithm will need to complete.

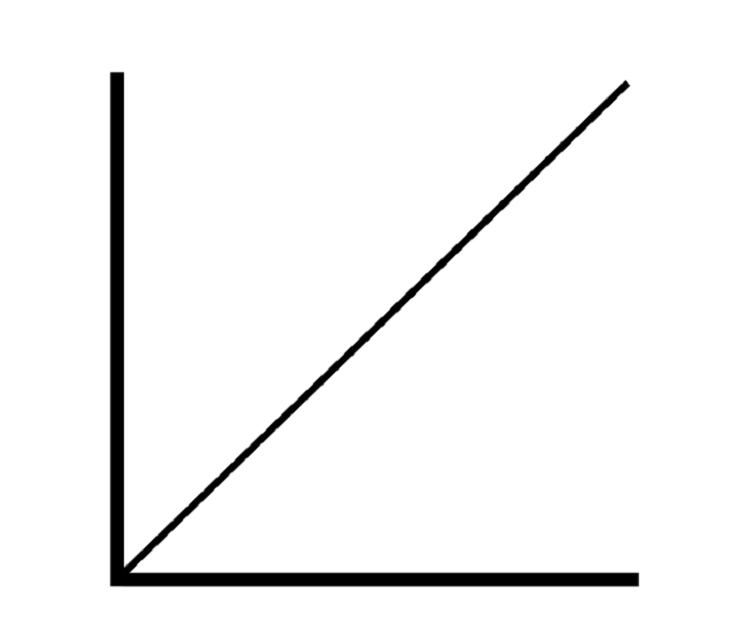

Linear Code

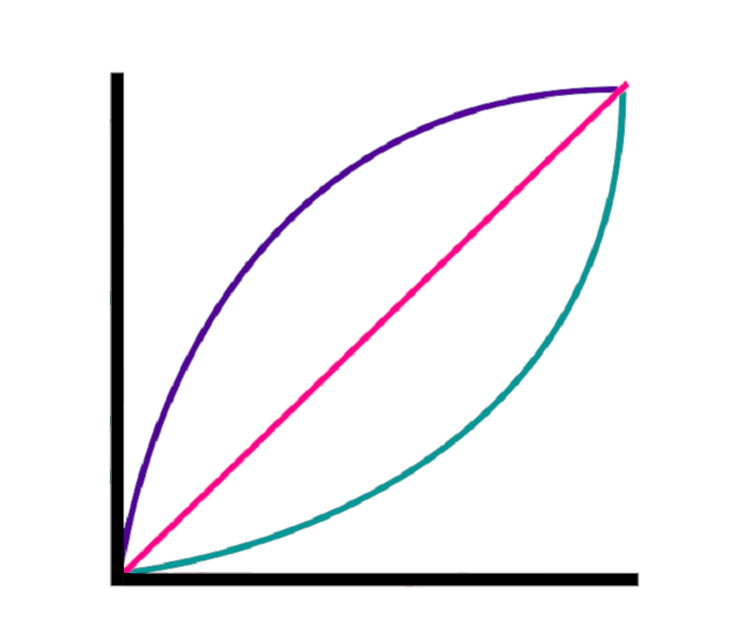

Because we do not know the value of n, it is more helpful to think about how the value of n affects the amount of code that needs to run. In algorithm 1 we can say that the relationship is linear. If you plot the number of steps vs. the value of n you get a straight line that goes up.

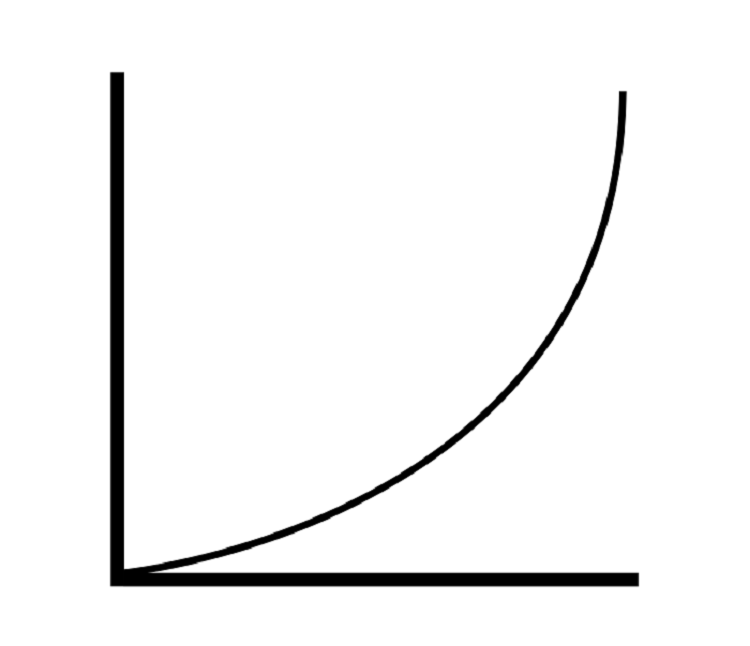

Quadratic Code

Not all relationships are as simple as the linear example. Imagine you have a 2D array and you would like to search for a value in the array. You might create an algorithm like this:

def searchForValue(targetValue, arraySearched):

foundTarget = False

for x in arraySearched:

for y in x:

if(y == targetValue):

foundTarget = True

return foundTarget

In this example, the number of steps depends on the number of arrays in arraySearched and the number of values in each array. So, the simplified number of steps would n * n or n².

This relationship is a quadratic relationship, which means that the number of steps in our algorithm grows exponentially with n. In Big-O notation, you would write it as:

O(n²)

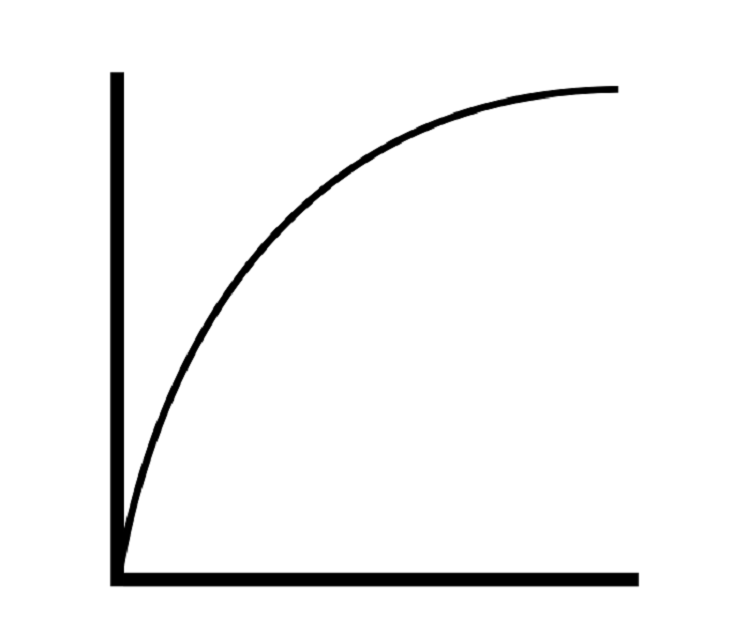

Logarithmic Code

Although there are many other relationships, the last relationship we will look at is logarithmic relationships. To refresh your memory, the log of a number is the exponent value required to reach a number given a base. For example:

log 2 (8) = 3

The log equals three because if our base was 2, we would need an exponent value of 3 to get to the number 8.

So, the relationship of a logarithmic function is the opposite of an exponential relationship. As n increases, fewer new steps are required to run the algorithm.

At a first glance, this seems counter-intuitive. How can a algorithm's steps grow slower than n? A good example of this is binary searches. Let's consider an algorithm to search for a number in an array of unique values.

- We will start with an array to search that is in order of smallest to largest.

- Next, we will check the value in the middle of the array.

- If your number is higher, we will exclude the lower numbers in our search and if the number was lower, we will exclude the higher numbers.

- Now, we will look at the middle number of the remaining numbers.

- Again, we will exclude half the numbers based on whether our target value is higher or lower than the middle value.

- We will continue this process until we find our target, or determine that it is not in the list.

As you can see, since binary searches eliminate half of the possible values every pass, as n gets larger, the effect on the number of times we check the array is barely affected. To express this in Big-O notation, we would write:

O(log(n))

The Importance of Big-O Notation

Big-O nation gives you a quick and easy way to communicate how efficient an algorithm is. This makes it easier to decide between different algorithms. This can be particularly helpful if you are using an algorithm from a library and do not necessarily know what the code looks like.

When you first learn to code, you begin with linear functions. As you can see from the graph above, that will get you very far. But as you become more experienced and begin to build more complex code, efficiency begins to become a problem. An understanding of how to quantify the efficiency of your code will give you the tools you need to begin tuning it for efficiency and weighing the pros and cons of algorithms.