Imaging technology has advanced drastically in the last few decades to the point that we’re able to take high-quality photographs without needing expensive or hard-to-learn equipment. Much of this is due to advances in imaging sensors.

Imaging sensors can differ in many ways that relate to their function. These categories are mainly: sensor structure, chroma type, shutter type, resolution, frame rate, pixel size, and sensor format.

This article covers the basic technology behind how the most common imaging sensors capture photographs.

Capturing Light

An imaging sensor converts incoming light (in the form of photons) into an electrical signal. This electrical signal is then able to be processed into an image on a screen. The two main types of sensors are CCD (charged-couple device) and CMOS (complementary metal-oxide-semiconductor).

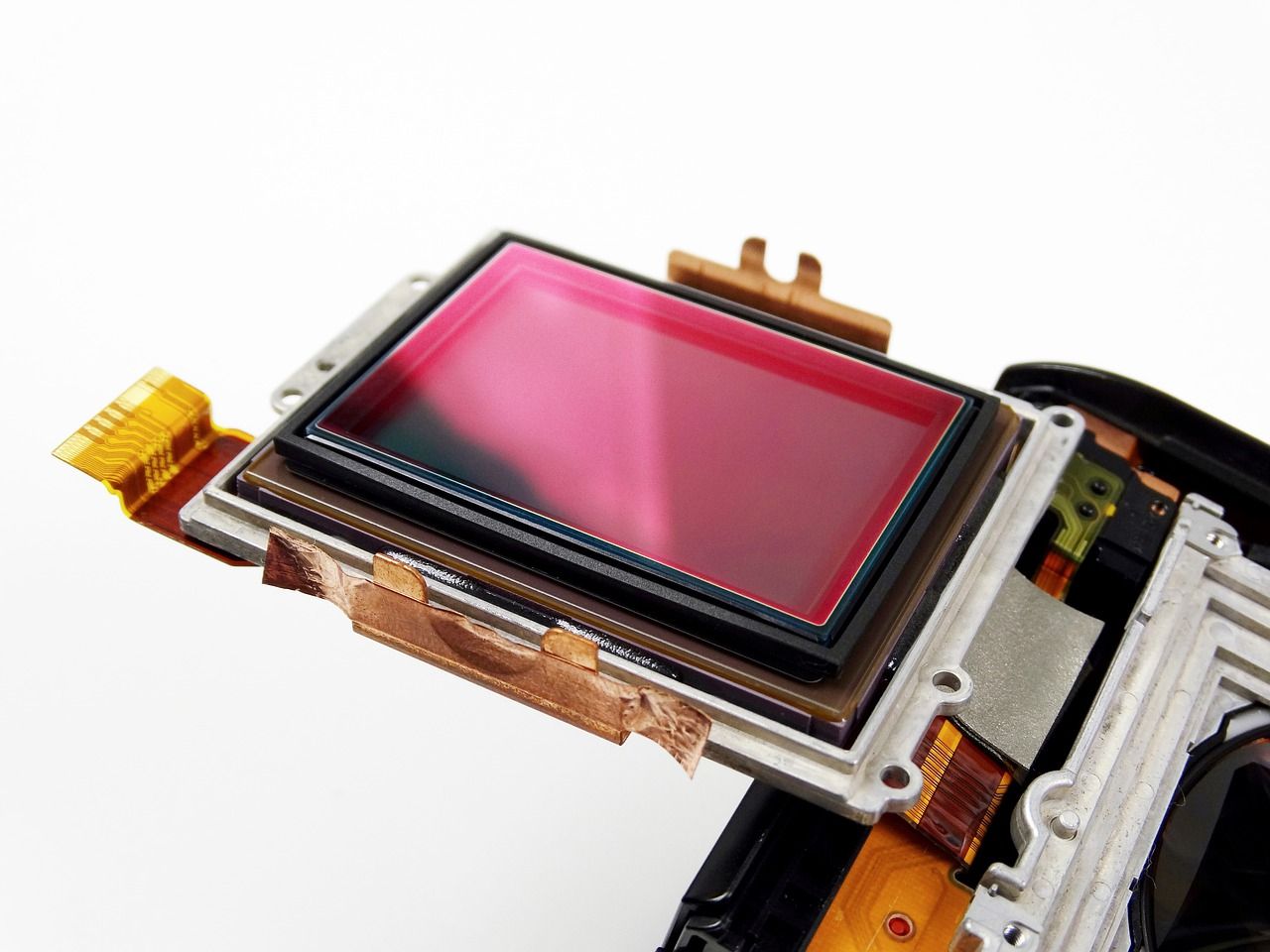

CCD and CMOS sensors are made up of a silicon wafer divided into an array of photosites (light-sensitive regions). These sensors can contain millions of these photodiodes, which can capture light and convert it into an electrical signal.

Each photosite in a CCD sensor is actually an analog device. The photodiode captures light and immediately stores this information as an electrical charge. This charge signal is then moved from the photosite to a readout device (known as a shift register). The electrical signal is converted to a voltage by a capacitor, which becomes a digital signal via an analog-to-digital converter (ADC).

Because of how CCD sensors work, they are vulnerable to two kinds of artifacts. These are known as smearing and blooming. Smearing occurs when there’s high light intensity, and it appears as a vertical bright line in the image.

This occurs because CCD sensors use vertical transfer registers, shifting charge signals vertically to the readout device. The charge can “leak” into this vertical transfer register and create the appearance of a bright line in the final image.

Blooming is when the light entering a particular pixel exceeds its saturation level. This means that it cannot capture any more light, so the photos begin to fill neighboring pixels (particularly in a horizontal direction). This reduces the accuracy of neighboring pixels and creates image artifacts.

In comparison, a CMOS sensor is a digital system at its core. A CMOS sensor converts light to charge to a voltage at the photosite itself. This is possible because each photosite has a transistor switch, which allows the signals to be amplified individually. These voltages are then sent simultaneously to a readout device.

Due to this structure, CMOS sensors are typically smaller, cheaper, and easier to produce. CMOS sensors also use less power and don’t suffer from smearing (because they don’t have vertical shift registers) or blooming (because they have much higher pixel saturation levels).

Chroma Type

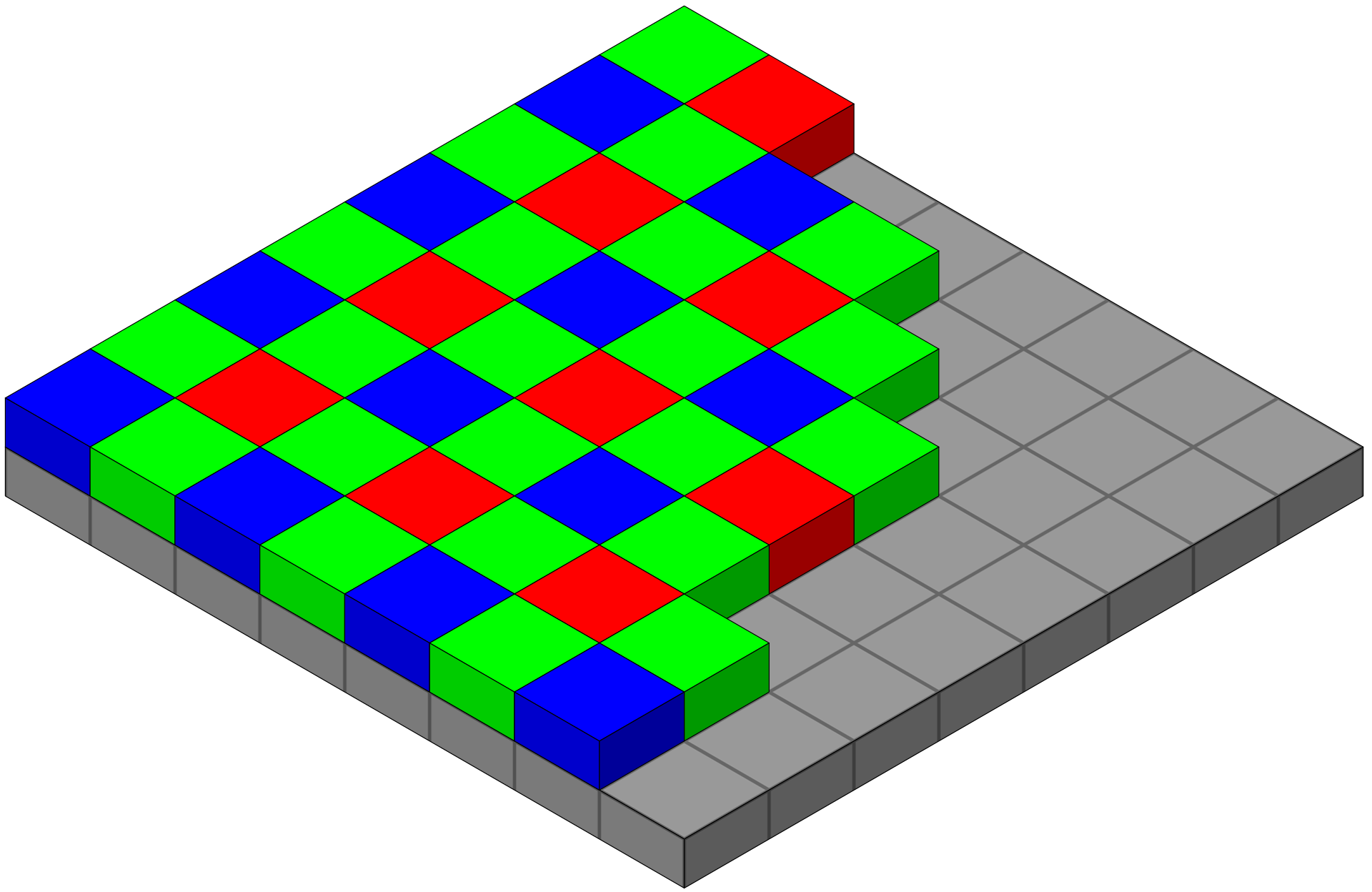

The chroma type of imaging sensor refers to whether it’s a monochromatic or color sensor. In monochromatic sensors, each pixel can absorb color from all visible light wavelengths. This means that there’s no differentiation between colors.

Color sensors have an additional layer called a “color layer” (often a mosaic Bayer filter) which absorbs certain light colors. In a Bayer filter, there are red, blue, and green filters (with twice as many green filters because the human eye is more sensitive to green). This enables each pixel of the sensor to absorb wavelengths of just one color.

The information from the sensor is then run through an algorithm to determine the true color (since only three colors are recognized). This analyses information from neighboring pixels to calculate the accurate color.

To achieve even greater color accuracy, modern sensors now utilize a process called “pixel shift.” Pixel shift works by taking multiple exposures, each time moving the sensor very slightly to capture the light on a different part of the sensor. This results in an image with not only much greater resolution but also better color accuracy. This is because the color information can be overlaid and analyzed to avoid normal inaccuracies.

Shutter Type

There are two types of shutter: global and rolling. A global shutter (as used by CCD sensors and some newer CMOS sensors) means that each pixel starts and stops simultaneously.

A rolling shutter (as used by most CMOS sensors) means that the sensor achieves an exposure one row of pixels at a time. This happens very quickly, but because of this incremental exposure, it can often result in “bent object” artifacts if camera movement is involved (such as during video).

As you can see in the photograph below, which was taken at 50 miles per hour, the fence posts are slanted due to how the sensor captures the image with a rolling shutter.

Imaging Sensor Format and Pixel Size

Imaging sensors come in various formats (known as sensor sizes). These include large format, medium format, full-frame, APS-C, and micro four thirds. The difference between sensor formats is usually the resolution and size of pixels.

Larger pixels come with various advantages, including larger field-of-view, higher resolution, higher dynamic range, and a higher signal-to-noise ratio. This is because larger pixels can accurately measure light (they can absorb more light in general). Because larger sensors typically utilize larger pixels, for this reason, they also typically have better image quality.

Shutter Speed and Frame Rate

The shutter speed is how long each photosite is exposed to light. This is why when taking a photo, it’s called “an exposure” or “exposure time.” Frame rate, on the other hand, is how many full exposures a sensor can take per second.

If you want to learn more about exposure, check out this guide to the exposure triangle in photography.

Many older cameras had much slower shutter speeds, whereas now it’s typical to see 1/8000th of a second. The frame rate (and shutter speed) depends on how fast the sensor can capture, convert, and output light as digital data.

Things affecting this process include the number of pixels (more pixels equals more data), the type of cabling (data transfer rate), and the kind of sensor.

With increases in imaging sensor technology advances, we’re now seeing ridiculously fast frame rates become possible.

Let There Be Light

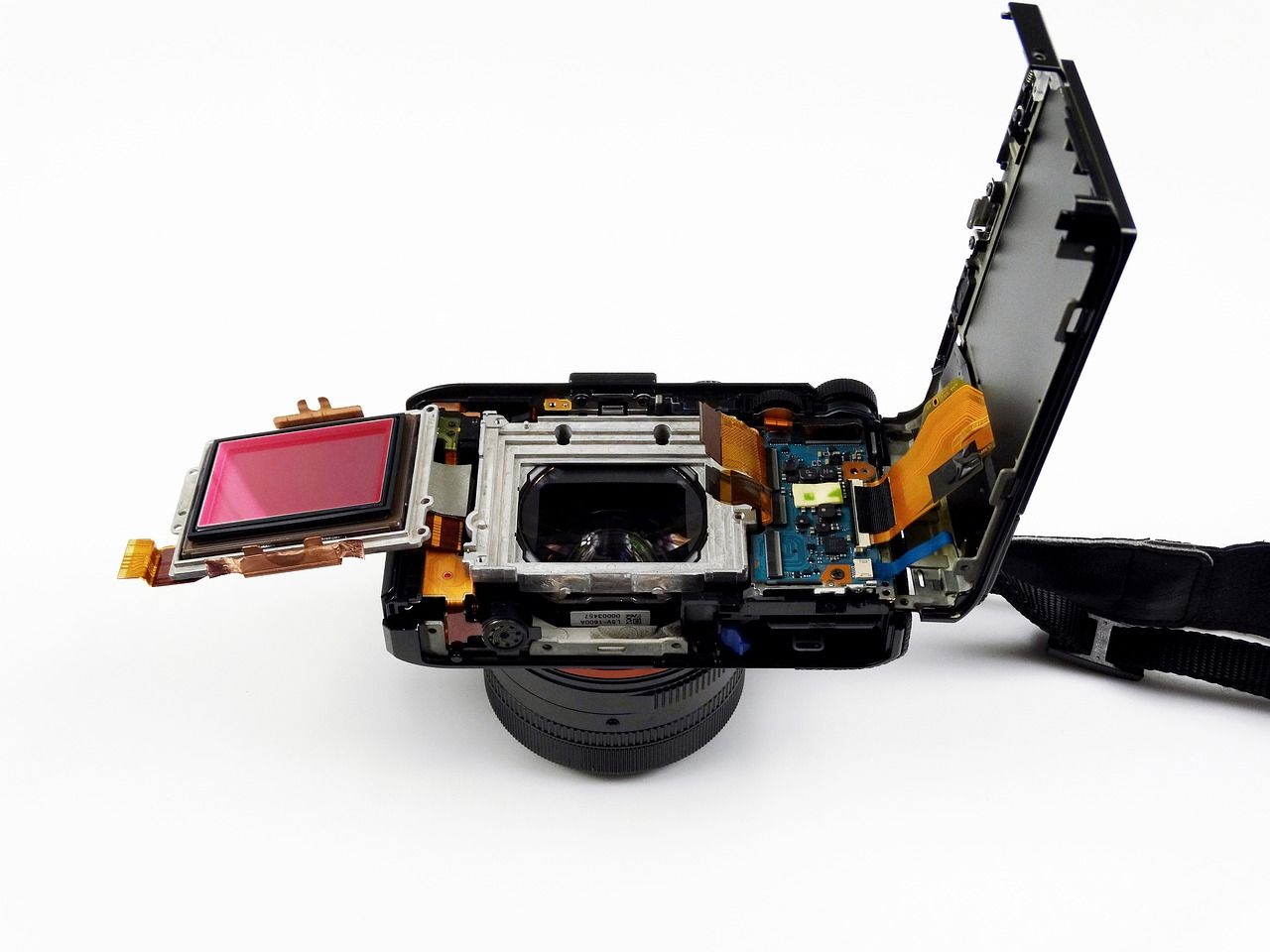

An imaging sensor is arguably the most integral component of a camera system. With imaging technology advances, we now have sensors ranging from tiny smartphone camera sensors to high-tech astrophotography arrays.

It’s now possible (and affordable) to capture high-quality photographs with basically zero understanding of how camera technology works.

But knowing how an imaging sensor works and the differences between types of sensors will help you choose the right sensor for your goals.