If you often find yourself fetching data from websites, you should probably consider automating the process. Sometimes referred to as “web scraping”, the process is a common one for sites that do not provide a formal API or feed. Of course, you won't get anywhere if the site you're trying to fetch is unavailable.

If you run your own site, you've probably had to deal with downtime before. It can be frustrating, causing you to lose visitors and interrupting whatever activity your site may be responsible for. In such circumstances, it pays to be able to easily check your website's availability.

Python is a great language for scripting, and its concise yet readable syntax makes implementing a site checker a simple task.

Creating Your Personalized Website Checker

The website checker is tailor-made to accommodate multiple websites at once. This allows you to easily switch out sites you no longer care about, or start checking sites that you launch in the future. The checker is an ideal “skeleton app” on which you could build further, but it demonstrates a basic approach to fetching web data.

Import Libraries in Python

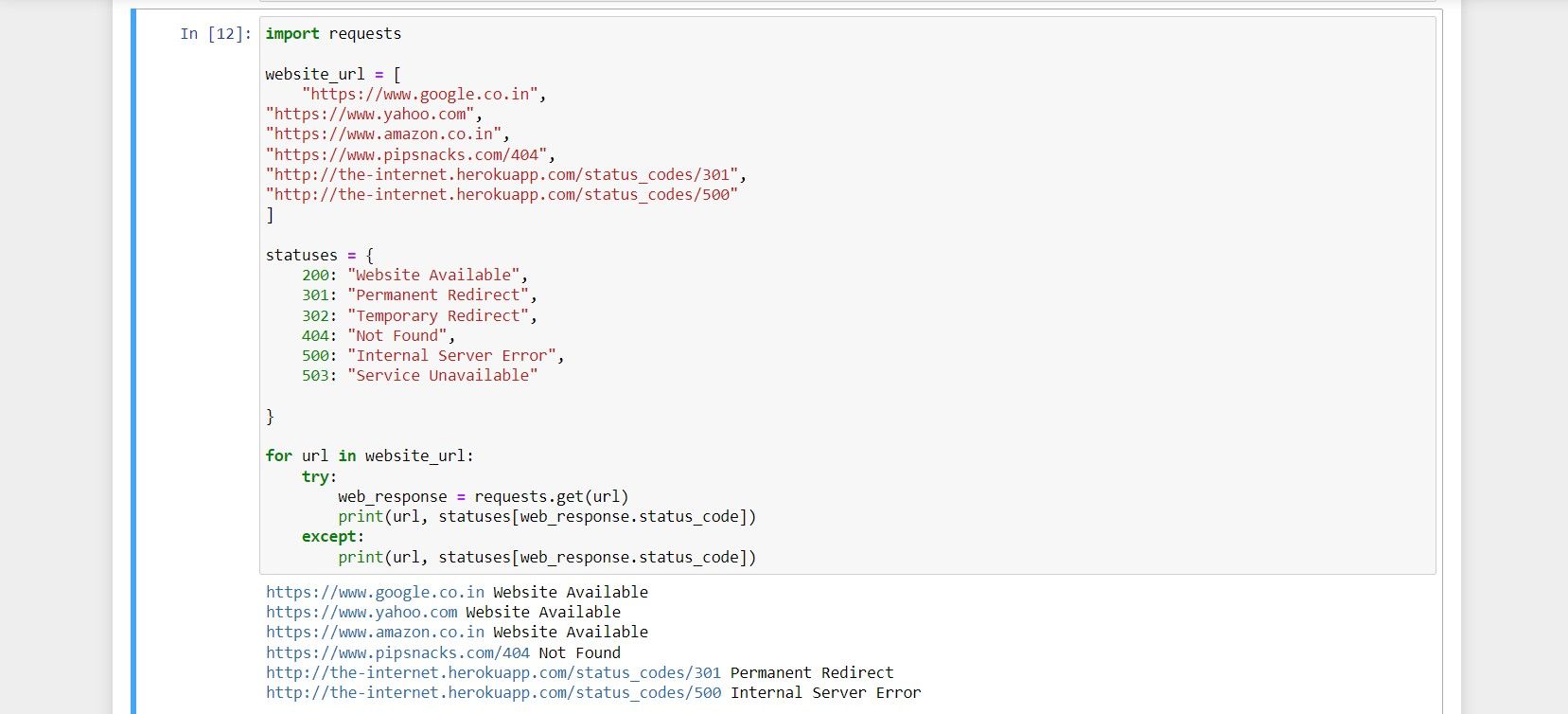

To kick off the project, you must import the requests library in Python with the import function.

import requests

The Requests library is useful for communicating with websites. You can use it to send HTTP requests and receive response data.

Store the Website URLs in a List

Once you import the library, you should define and store the website URLs in a list. This step allows you to retain multiple URLs, which you can check with the website checker.

import requests

website_url = [

"https://www.google.co.in",

"https://www.yahoo.com",

"https://www.amazon.co.in",

"https://www.pipsnacks.com/404",

"http://the-internet.herokuapp.com/status_codes/301",

"http://the-internet.herokuapp.com/status_codes/500"

]

The variable website_url stores the list of URLs. Inside the list, define each URL you want to check as an individual string. You can use the example URLs in the code for testing or you can replace them to start checking your own sites right away.

Next, store the messages for common HTTP response codes. You can keep these in a dictionary, and index each message by its corresponding status code. Your program can then use these messages instead of status codes for better readability.

statuses = {

200: "Website Available",

301: "Permanent Redirect",

302: "Temporary Redirect",

404: "Not Found",

500: "Internal Server Error",

503: "Service Unavailable"

}

Creating a Loop to Check Website Status

To check each URL in turn, you’ll want to loop through the list of websites. Inside the loop, check the status of each site by sending a request via the requests library.

for url in website_url:

try:

web_response = requests.get(url)

print(url, statuses[web_response.status_code])

except:

print(url, statuses[web_response.status_code])

Where:

- for url...iterates over the list of URLs.

- url is the variable that the for loop assigns each URL to.

- try/except handles any exceptions that may arise.

- web_response is a variable that provides a property with the response’s status code

The Entire Code Snippet

If you prefer to review the entire code in one go, here's a full code listing for reference.

import requests

website_url = [

"https://www.google.co.in",

"https://www.yahoo.com",

"https://www.amazon.co.in",

"https://www.pipsnacks.com/404",

"http://the-internet.herokuapp.com/status_codes/301",

"http://the-internet.herokuapp.com/status_codes/500"

]

statuses = {

200: "Website Available",

301: "Permanent Redirect",

302: "Temporary Redirect",

404: "Not Found",

500: "Internal Server Error",

503: "Service Unavailable"

}

for url in website_url:

try:

web_response = requests.get(url)

print(url, statuses[web_response.status_code])

except:

print(url, statuses[web_response.status_code])

And here’s an example run of the code:

Python’s Coding Capabilities in Web Scraping

Python’s third-party libraries are ideal for tasks like web scraping and fetching data via HTTP.

You can send automated requests to websites to perform various types of tasks. These might include reading news headlines, downloading images, and sending emails automatically.