You’ve probably been in one of those Facebook Groups before. Where there’s so much hate speech and misinformation and you’re sometimes left to wonder: “What is Facebook doing about all of this?”

The social media site has revealed the measures it's using to make it harder for Groups that violate its Community Guidelines to operate on the platform.

Here are some of the ways that Facebook is cracking down on Groups that break the rules...

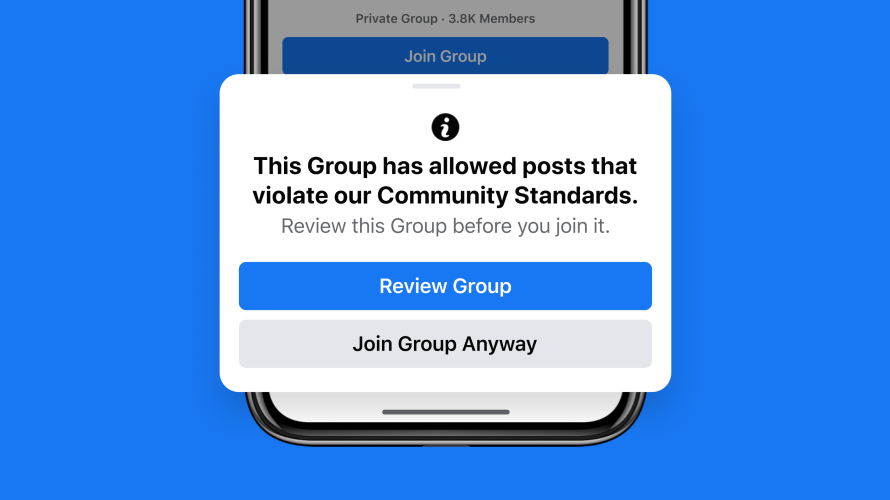

1. Notifying Users When They Try to Join a Rule-Breaking Group

Facebook announced on its company blog in March 2021 that it will start to notify users when they try to join a Group that has repeatedly violated its Community Guidelines. The notification prompt will give users the option to either review the group or join it anyway.

The goal of this feature is to discourage users from joining Groups that violate its Community Guidelines, while also ensuring that users who insist to join these Groups are aware of the violations.

2. Limiting Invites for Groups With Violations

Facebook also revealed that it will limit the number of invites members of rule-breaking Groups can send.

This means members of the Group can only invite a limited number of their friends to join. Facebook says it believes this will help cut down on the number of people likely to join these Groups.

3. Reducing the Reach of Rule-Breaking Groups

Another strategy Facebook will use to crack down on Groups that break its rules is to reduce the reach of content from these Groups.

Facebook does this by showing the content from rule-breaking Groups lower in members’ News Feed, where it's less likely to be seen.

4. Demoting Rule-Breaking Groups in Recommendations

In its March blog post, Facebook also revealed that it will demote Groups with Community Standard violations in the Recommended Groups list shown to users. These Groups will be further down in the Recommended Groups list, so fewer people see them.

The goal of this demotion, Facebook claims, is to make it harder for users to discover and engage with Groups that break its rules.

5. Requiring Moderators to Approve All Posts

To control the spread of harmful content on its platform, Facebook says that it will temporarily require admins and moderators to approve all posts in Groups where a substantial number of people have violated the Community Guidelines.

Admins and moderators of Groups with many ex-members of a deleted Group will also be made to temporarily approve all posts.

Facebook hopes to control the spread of hate speech and misinformation on its platform by giving admins and moderators the chance to vet posts shared in a Group.

Meanwhile, if a Group admin or moderator repeatedly approves content that goes against the rules, Facebook says it will take the entire Group down.

Facebook removed “Stop The Steal”, a Group with over 300,000 people, for encouraging its members to partake in violent activities during the 2020 US Presidential election.

6. Blocking Rule-Breaking Users From Posting in Any Group

Another measure Facebook is using to limit the reach of users trying to spread harmful content on its platform is by temporarily blocking users who have repeatedly violated its Community Guidelines from posting or commenting in any Group for 30 days.

The offending user will also not be able to create new Groups or even send Group invites to friends.

The goal of this measure, Facebook says, is to slow down the reach of people who want to use its platform to spread hate speech and misinformation.

7. Automatically Removing Group Posts That Break its Rules

Facebook announced that it will automatically delete Group content that violates its Community Guidelines. With the aid of AI and machine learning, Facebook detects harmful content posted in Groups even before any user reports it.

Facebook-trained reviewers review content flagged by its systems or reported by users to confirm violations. This helps Facebook automatically remove posts with severe violations.

8. Banning Movements Tied to Violence

Facebook is also cracking down on organizations and movements that have demonstrated a “significant risk to public safety” on its platform.

An example of this is QAnon, a US-based movement known for encouraging violence and conspiracy theories. Facebook has completely banned the movement on its platform, deleting all content praising, supporting, or representing it. It also removed over 790 Groups related to the movement.

Are Facebook Groups Now Safe?

With all these measures in place to crack down on Groups that break its rules, it seems like Facebook is out to put an end to the spread of inappropriate and misleading content on its platform.

However, Facebook also acknowledged that there is more they still need to do to make Groups safe.