To the best understanding of modern medical science, you are a meticulously structured sack of volatile chemicals under pressure. There is no reason to believe that there is anything at all special about your body, compared to that of a corpse, or that of a Buick for that matter. Except for one thing -- your body contains a living human brain.

There is no evidence that any new laws of physics or supernatural phenomena are involved in human cognition. Animalism and dualism are well and truly dead. This is, at least at first, disturbing - because, from the inside of the human experience, it certainly doesn't feel like we're nothing more than a collection of chemicals. Being a person feels, on a very deep and intuitive level, like something that shouldn't be possible for what is ultimately a complicated molecular machine.

Programming Consciousness

This begs the question - if we learn how consciousness works, can we build machines, or write software, that has it, too? Can the effort to build intelligent software teach us lessons about the nature of the human mind? Can we finally understand subjective experience well enough to, once and for all, decide how much moral weight we should assign to the experiences of less sophisticated minds, likes those of livestock, dolphins, or fetuses?

These are the hard questions that philosophers have grappled with for centuries. However, since philosophy as a discipline is not good at actually solving problems - very little headway has been made. Here's a traditional philosopher talking about consciousness on a TED stage.

This is a good introduction to the topic, though Chalmer's philosophy is a supernatural one, proposing metaphysical phenomenon that provide consciousness in ways that don't interact with the physical world at all. This is what I'd call the easy way out.

If, in your effort to explain something, you resort to magic, then you haven't really explained it -- you've simply given up with style. Arguably, Star Trek addressed the same issue better.

Recently, science has begun making meaningful progress on this problem, as artificial intelligence and neuroscience have begun to chip away at the edges of the issue. This has inspired new fields of evidence-based philosophical thinking. The insights gained in this way are enormously interesting, and help to better outline a coherent theory of consciousness, and guide us toward machines that can experience as well as reason.

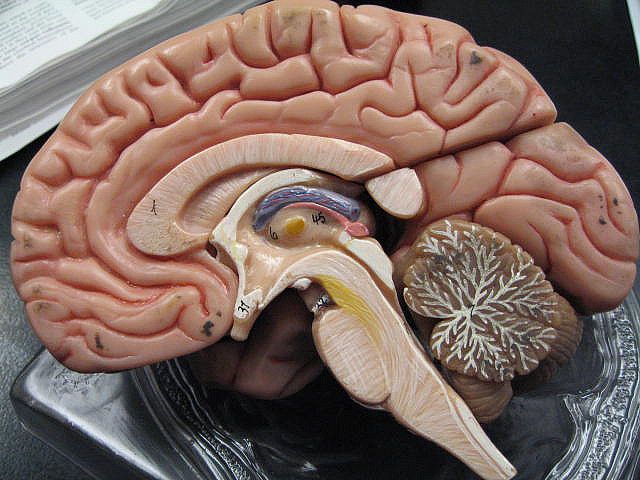

Neuroscience and Consciousness

In neuroscience, it is largely the defects of the brain that teach us things about its function. When the brain breaks, we get a peek behind the curtain of the mind. Taken together, these insights begin to sketch the broad outlines of what the structure of consciousness must look like, and the result is fascinating.

For example: Cotard's delusion (more colorfully known as "Walking Corpse Syndrome"), is a delusion often caused by severe schizophrenia or physical damage to the parietal lobe of the brain, which causes a variety of symptoms, the most interesting of which is the delusion that the sufferer does not exist.

Sufferers of Cotard's delusion aren't self-aware. They genuinely don't believe that they exist, which often also leads to the conclusion that they have died. Decartes once claimed that the fundamental truth, on which all else can be based, is "I think, therefore, I am." People with Cotard's delusion disagree. In other words, the component of consciousness that includes self-awareness can be selectively turned off by damage to a specific area of the brain, while leaving the rest of the human intellect relatively intact.

A related condition is "blindsight," which affects some people who are blind due to damage to the visual center of the brain. Blindsight patients are capable of instinctively catching objects thrown at them, and, if you place objects in front of them and ask them to guess what they are, they significantly outperform random chance. However, they do not believe that they can see: subjectively, they are blind.

Blindsight patients are unique, in that they have a functioning sense (sight), but are not conscious of it. What the brain damage has destroyed is not their ability to process visual information, but their ability to be consciously aware of that processing.

Blindsight occurs when one specific circuit that leads information away from the visual cortex is damaged (the V1 circuit), but not the other two, which leaves neuroscientists in the unique position of knowing exactly which neural circuit is necessary for visual information to enter the conscious experience, but not why.

Interestingly, the reverse of Blindsight is also possible -- victims of Anton-Babinski syndrome lose their vision but maintain their conscious perception of having vision, insisting that they can see normally and confabulating ridiculous explanations for their inability to perform basic tasks.

There have also been experiments on selectively disabling consciousness. For example: there is a small region of the brain called the claustrum near the center of the brain that, when stimulated by an electrode in at least some patients, entirely disables consciousness and higher cognition, which returns a few seconds later when the electric current ceases.

What's interesting is that while the stimulation is occurring, the patient remains awake, eyes open, sitting up. If the patient is asked to repeat a task while the current is turned on, they simply drift off from what they're doing and stop. It's believed that the role of the claustrum is to coordinate communication between a number of different regions of the brain, including the hippocampus, the amygdala, the caudate nucleus, and possibly others.

Some neuroscientists believe that since the claustrum serve to coordinate communication between different modules of the brain; stimulating this region would disable that coordination, and cause the brain to break down into separate components - each largely useless in isolation, and incapable of building a subjective experience.

This notion meshes with what we know about the function of anesthetics - which we've used for centuries before we understood how they work.

It is currently believed that general anesthetics interfere with networking between different high-level components of the brain, preventing them from building whatever neurological system is necessary to produce a coherent conscious experience. Upon consideration, this makes a certain amount of intuitive sense: if it isn't possible for the visual cortex to send information to your working memory, there's no way for you to have a conscious visual experience that you could talk about later.

The same goes for hearing, memory, emotion, internal monologue, planning, etc. All of those systems are modules that, once disconnected from working memory, would remove a crucial part of the conscious experience.

It may, in fact, be more accurate to talk about consciousness - rather than as a distinct, unified entity - as a collaboration of many different kinds of awareness, bound together by inclusion in the narrative flow of memory. In other words, instead of a "consciousness", you might have a visual consciousness, an audible consciousness, a consciousness of memories, and so on. It's an open question as to whether anything remains when you take away all of these pieces, or whether this explains the matter of consciousness entirely.

Theories of Consciousness

Daniel Dennett, otherwise known as the "cranky old man" of consciousness research, holds that this is in fact the case - that consciousness simply isn't as special as most people imagine. His model of consciousness, which some accuse of being overly reductionist, is called the 'multiple drafts' theory, and works like this:

The brain functions as a population of semi-independent, interconnected modules, continuously transmitting information semi-discriminately into the network, often in response to the signals that they are receiving from other modules. Signals that trigger responses from other modules, such as a smell that provokes a visual memory, cascade between modules and escalate. The memory might provoke an emotion, and an executive process might have a response to that emotion, which might be structured by the language center into part of an inner monologue.

This process increases the odds that the whole cascade of related signals will be detected by the memory encoding mechanism of the brain, and become part of the record of short term memory: the "story" of consciousness, some of which will make it into longer term memory, and become part of the permanent record.

Consciousness, according to Dennett, is nothing but a serial narrative comprised of these sorts of cascades, which make up the entire system's record of the world it exists in, and its path through it. Because the modules don't have introspective access to their own functions, when we are asked to describe the nature of the behavior of one module, we come up with no useful information. As a result, we feel, intuitively, that our subjective experience is undefinable and ineffable.

An example would be asking someone to describe what the color red looks like. The question feels absurd, not because of any inherent fact about the universe, but because the underlying structure of the brain doesn't actually allow us to know how the color red is implemented in our own hardware. As far as our conscious experience is concerned, it's just... red.

Philosophers call these sorts of experiences 'qualia' and often assign near-mystical significance to them. Daniel Dennett suggests that they're more like a neurological 404 page the brain throws up when asked what goes on behind the curtain of a particular brain region not accessible to the conscious narrative. Dennet himself puts it like this:

There is no single, definitive "stream of consciousness," because there is no central Headquarters, no Cartesian Theatre where "it all comes together" for the perusal of a Central Meaner. Instead of such a single stream (however wide), there are multiple channels in which specialist circuits try, in parallel pandemoniums, to do their various things, creating Multiple Drafts as they go. Most of these fragmentary drafts of "narrative" play short-lived roles in the modulation of current activity but some get promoted to further functional roles, in swift succession, by the activity of a virtual machine in the brain. The seriality of this machine (its "von Neumannesque" character) is not a "hard-wired" design feature, but rather the upshot of a succession of coalitions of these specialists.

There are, of course, other schools of thought. One model that is currently popular among certain philosophers is called integrated information theory, which holds that the consciousness of a system is related to its density of internal networking - the complexity of its overall structure, relative to the structure of its components.

However, this model has been criticized via the suggestion of intuitively non-conscious (simply structured) information systems which it ranks as massively more conscious than humans. Scott Aaronson, a mathematical researcher and vocal critic of integrated information theory, said this about the issue a few months ago:

"In my opinion, the fact that Integrated Information Theory is wrong—demonstrably wrong, for reasons that go to its core—puts it in something like the top 2% of all mathematical theories of consciousness ever proposed. Almost all competing theories of consciousness, it seems to me, have been so vague, fluffy, and malleable that they can only aspire to wrongness."

Another proposed model holds that consciousness is the result of humans modelling themselves, an idea that may be compatible with Dennett's model, but suffers from the possibly fatal flaw of suggesting that a windows computer running a virtual machine of itself is in some sense conscious. The list of models of consciousness is about as long as the list of everyone who's ever felt inclined to tackle such a difficult problem.

There are a lot of options out there, from the outright mystical, to Dennett's stolid, cynical pragmatism. For my money, Dennett's multiple drafts theory strikes me as, if not a complete accounting of why human beings talk about consciousness, at least a solid start along that path.

Artificial Intelligence & Consciousness

Let's say that, a few years from now, progress in neuroscience has led to a Grand Unified Theory of Consciousness -- how could we know if it's right? What if the theory leaves out something important -- how would we know? The history of science has taught us to be wary of nice-sounding ideas that we can't test. So how might we test our model of consciousness?

Well, we could try to build one.

Our ability to construct intelligent machines has been going through something of a renaissance lately. Watson, a piece of intelligent software developed by IBM, which famously won on the game show Jeopardy, is also capable of a surprisingly broad suite of intellectual tasks, having been adapted to serve as both a talented chef and a superhuman diagnostician.

Though IBM calls Watson a cognitive computer, the truth is that Watson is very much a triumph of non-neuromorphic artificial intelligence - that is, it is an intelligent piece of software that does not attempt to implement the specific insights of neuroscience and brain research. IBM works by using a large number of very different machine learning algorithms, some of which are used to evaluate the output of other algorithms to gauge their usefulness, and many algorithms which are hand-tweaked to connect together in productive ways.

As Watson improves and its reasoning becomes deeper and more useful, it's easy to imagine using Watson technology, along with other technology not yet developed, to build systems that emulate the function of specific known brain systems, and integrating those systems in a way that would produce a conscious experience.

We could then experiment with that intelligent machine, to see if it describes a subjective experience at all - and, if so, determine if that subjective experience is similar to the human experience. If we can build a conscious computer that, at its lowest level is not similar to our own neurology, that would certainly validate the model!

This idea of building an artificial intelligence to validate theories about the brain is not a new one. Spaun, a research project at the University of Alberta, is a huge (roughly mouse-scale) biological neural network simulation designed to implement models of various brain regions, including executive function, sight, working memory, and motor function.

The implementation is capable of performing a number of basic cognitive tasks, like recognizing and drawing symbols, repeating back strings of numbers, and answering simple questions by drawing the answers, and predicting the next digit of a sequence. Because Spaun can do these things, this implies that current artificial intelligence models are correct, at least in the broad strokes.

In principle, the same could be applied to consciousness, provided we can build the component parts of the system to a high enough standard. Of course, with the ability to make conscious machines comes a degree of responsibility. Turning on a machine that may be conscious is at least as big a moral responsibility as the decision to have a child, and, if we succeed, we are responsible for the well-being of that machine for the rest of its existence.

This is on top of the risks associated with building very intelligent software at all - namely the risk of a machine with different values from our own rapidly improving its own architecture until it's smart enough to begin changing the world in ways that we may not like. Numerous commentators, including Stephen Hawking and Elon Musk, have noted that this may be one of the most significant threats mankind faces going forward.

Put another way: the ability to create a new kind of "human" is a big responsibility. It might be the most important thing humanity has ever done as a species, and we should take it very seriously. None the less, there's potential there, too - the potential to understand those fundamental questions about our own minds. We're still a ways out from having the technology we need to put these ideas into practice, but not so far out that we can ignore them entirely. The future is on its way, deceptively quickly, and we'd be wise to get ready for it today.

Image credits: "Watson and the other three podiums", by Atomic Taco, "No Brain" by Pierre-Olivier Carles, "Brain", by GreenFlames09 "Lights of Ideas" by Saad Faruque, Abstract Eye by ARTEMENKO VALENTYN via Shutterstock