Web scrapers automatically collect information and data that's usually only accessible by visiting a website in a browser. By doing this autonomously, web scraping scripts open up a world of possibilities in data mining, data analysis, statistical analysis, and much more.

Why Web Scraping Is Useful

We live in a day and age where information is more readily available than any other time. The infrastructure in place used to deliver these very words you are reading is a conduit to more knowledge, opinion, and news than has ever been accessible to people in the history of people.

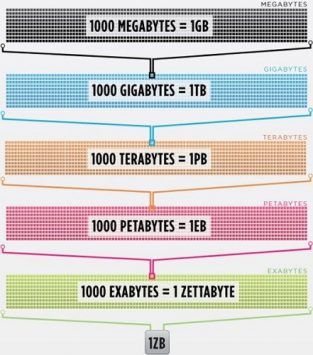

So much so, in fact, that the smartest person's brain, enhanced to 100% efficiency (someone should make a movie about that), would still not be able to hold 1/1000th of the data stored on the internet in the United States alone.

Cisco estimated in 2016 that traffic on the internet exceeded one zettabyte, which is 1,000,000,000,000,000,000,000 bytes, or one sextillion bytes (go ahead, giggle at sextillion). One zettabyte is about four thousand years of streaming Netflix. That would be equivalent to if you, intrepid reader, were to stream The Office from start to finish without stopping 500,000 times.

All this data and information is very intimidating. Not all of it is right. Not much of it is relevant to everyday life, but more and more devices are delivering this information from servers around the world right to our eyes and into our brains.

As our eyes and brains can't really handle all of this information, web scraping has emerged as a useful method for gathering data programmatically from the internet. Web scraping is the abstract term to define the act of extracting data from websites in order to save it locally.

Think of a type of data and you can probably collect it by scraping the web. Real estate listings, sports data, email addresses of businesses in your area, and even the lyrics from your favorite artist can all be sought out and saved by writing a small script.

How Does a Browser Get Web Data?

To understand web scrapers, we will need to understand how the web works first. To get to this website, you either typed "makeuseof.com" into your web browser or you clicked a link from another web page (tell us where, seriously we want to know). Either way, the next couple of steps are the same.

First, your browser will take the URL you entered or clicked on (Pro-tip: hover over the link to see the URL at the bottom of your browser before clicking it to avoid getting punk'd) and form a "request" to send to a server. The server will then process the request and send a response back.

The server's response contains the HTML, JavaScript, CSS, JSON, and other data needed to allow your web browser to form a web page for your viewing pleasure.

Inspecting Web Elements

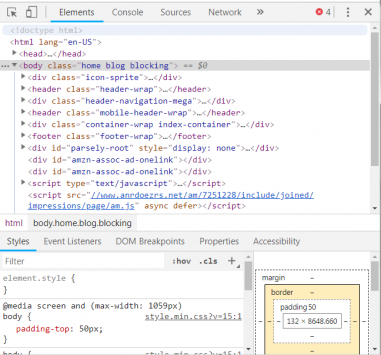

Modern browsers allow us some details regarding this process. In Google Chrome on Windows you can press Ctrl + Shift + I or right click and select Inspect. The window will then present a screen that looks like the following.

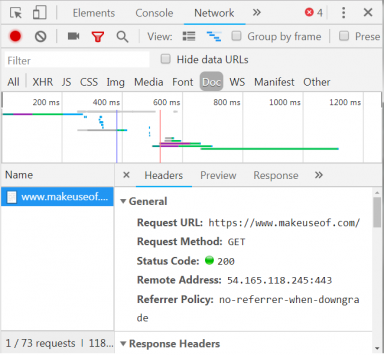

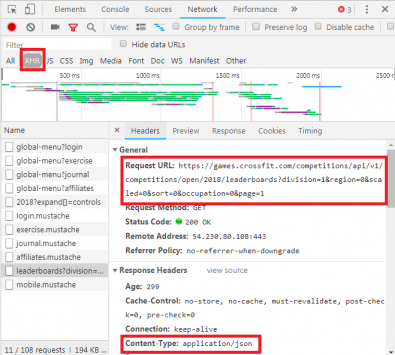

A tabbed list of options lines the top of the window. Of interest right now is the Network tab. This will give details about the HTTP traffic as shown below.

In the bottom right corner we see information about the HTTP request. The URL is what we expect, and the "method" is an HTTP "GET" request. The status code from the response is listed as 200, which means the server saw the request as valid.

Underneath the status code is the remote address, which is the public facing IP address of the makeuseof.com server. The client gets this address via the DNS protocol.

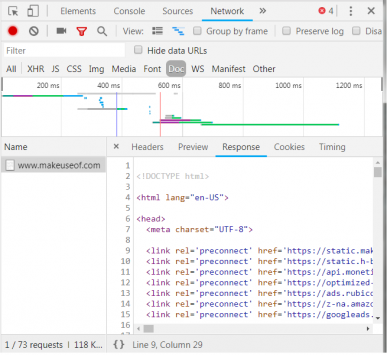

The next section lists details about the response. The response header not only contains the status code, but also the type of data or content that the response contains. In this case, we are looking at "text/html" with a standard encoding. This tells us that the response is literally the HTML code to render the website.

Other Types of Responses

Additionally, servers can return data objects as a response to a GET request, instead of just HTML for the web page to render. A website's Application Programming Interface (or API) typically utilizes this type of exchange.

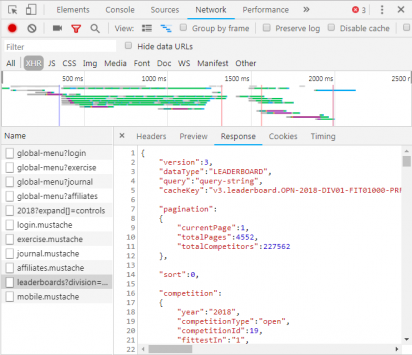

Perusing the Network tab as shown above, you can see if there is this type of exchange. When investigating the CrossFit Open Leaderboard the request to fill the table with data is shown.

By clicking over to the response, the JSON data is shown instead of the HTML code for rendering the website. Data in JSON is a series of labels and values, in a layered, outlined list.

Manually parsing HTML code or going through thousands of key/value pairs of JSON is a lot like reading the Matrix. At first glance, it looks like gibberish. There may be too much information to manually decode it.

Web Scrapers to the Rescue!

Now before you go asking for the blue pill to get the heck out of here, you should know that we don't have to manually decode HTML code! Ignorance is not bliss, and this steak is delicious.

A web scraper can perform these difficult tasks for you. Scraping frameworks are available in Python, JavaScript, Node, and other languages. One of the easiest ways to begin scraping is by using Python and Beautiful Soup.

Scraping a Website With Python

Getting started only takes a few lines of code, as long as you have Python and BeautifulSoup installed. Here is a small script to get a website's source and let BeautifulSoup evaluate it.

from bs4 import BeautifulSoup

import requests

url = "http://www.athleticvolume.com/programming/"

content = requests.get(url)

soup = BeautifulSoup(content.text)

print(soup)

Very simply, we are making a GET request to a URL and then putting the response into an object. Printing the object displays the HTML source code of the URL. The process is just as if we manually went to the website and clicked View Source.

Specifically, this is a website that posts CrossFit-style workouts every day, but only one per day. We can build our scraper to get the workout each day, and then add it to an aggregating list of workouts. Essentially, we can create a text-based historical database of workouts we can easily search through.

The magic of BeaufiulSoup is the ability to search through all the HTML code using the built-in findAll() function. In this specific case, the website uses several "sqs-block-content" tags. Therefore, the script needs to loop through all of those tags and find the one interesting to us.

Additionally, there are a number of <p> tags in the section. The script can add all the text from each of these tags to a local variable. To do this, add a simple loop to the script:

for div_class in soup.findAll('div', {'class': 'sqs-block-content'}):

recordThis = False

for p in div_class.findAll('p'):

if 'PROGRAM' in p.text.upper():

recordThis = True

if recordThis:

program += p.text

program += '\n'

Voilà! A web scraper is born.

Scaling Up Scraping

Two paths exist to move forward.

One way to explore web scraping is to use tools already built. Web Scraper (great name!) has 200,000 users and is simple to use. Also, Parse Hub allows users to export scraped data into Excel and Google Sheets.

Additionally, Web Scraper provides a Chrome plug-in that helps visualize how a website is built. Best of all, judging by name, is OctoParse, a powerful scraper with an intuitive interface.

Finally, now that you know the background of web scraping, raising your own little web scraper to be able to crawl and run on its own is a fun endeavor.