You may not have heard of HTTP/2 yet, but it's the most recent update to HTTP. The new protocol standard introduces some new concepts and makes communication between servers and applications faster and more efficient.

What Is HTTP/2?

HyperText Transfer Protocol Version 2, or HTTP/2, is the first major update to HTTP in 15 years.

The previous protocol standard, HTTP/1.1, has been in use since 1997 and uses a mix of clunky workarounds to improve on the limitations of HTTP.

It is based on SPDY ("speedy"), an open-source experiment started by Google to address some of the issues and limitations of HTTP/1.1

The Internet Engineering Task Force (IETF) specifies the changes like this in Hypertext Transfer Protocol version 2, Draft 17:

"HTTP/2 enables a more efficient use of network resources and a reduced perception of latency by introducing header field compression and allowing multiple concurrent exchanges on the same connection [...]

"It also allows prioritization of requests, letting more important requests complete more quickly, further improving performance."

"HTTP/2 also enables more efficient processing of messages through use of binary message framing."

"This specification is an alternative to, but does not obsolete, the HTTP/1.1 message syntax. HTTP's existing semantics remain unchanged."

HTTP/2 Is Based on SPDY

By 2012, most modern browsers and many popular sites (Google, Twitter, Facebook etc.) already supported SPDY. As the popularity of SPDY was increasing, the HTTP Working Group (HTTP-WG) started working on updating the HTTP standard.

From this point onward, SPDY became the foundation and experimental branch for new features in HTTP/2. At the time, we examined how SPDY can improve browsing. Since then, the version 2 standard was drafted, approved and published.

Many of the features from SPDY were incorporated into of HTTP/2, and Google eventually stopped supporting this protocol in early 2016.

Most browsers eventually stopped supporting SPDY, and as there are no alternatives, HTTP/2 is becoming the de facto standard.

While the HTTP/2 protocol standard is not strictly backward compatible with HTTP/1, compatibility can be achieved via translation. An HTTP/1.1 only client won't understand an HTTP/2 only server and vice versa, which is why the new protocol version is HTTP/2 and not HTTP/1.2.

That said, an important part of the work provided by HTTP-WG, is to make sure HTTP/1 and HTTP/2 can be translated back and forth without any loss of information.

Any new mechanisms or features introduced will also be version-independent, and backward-compatible with the existing web.

HTTP/2 isn't really something a user can implement, but there are things we can do to affect our browsing speed. Do you believe any of these common myths to speed up your internet speed?

The Benefits and Features of HTTP/2

HTTP/2 comes with some great updates to the HTTP standard. Some of the more important ones are binary framing, multiplexing, stream prioritization, flow control, and server push.

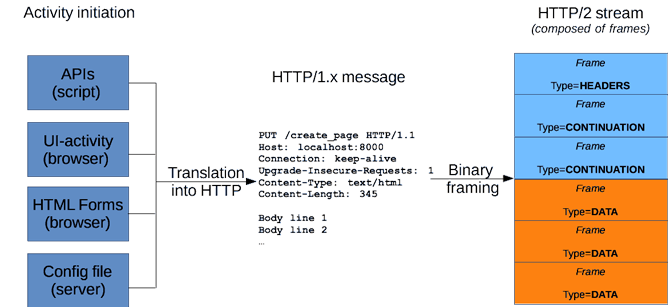

Binary Framing

Following the update to HTTP2/, the HTTP protocol communication is split up into an exchange of binary-encoded frames. These frames are mapped to messages that belong to a particular stream. The streams are then multiplexed (woven together in a sense) in a single TCP connection.

The new binary framing layer introduces some new terminology; Streams, Messages, and Frames.

- Streams are bidirectional flows of bytes that carry one or more messages.

- Each of these streams has a unique identifier and can carry bidirectional messages using optional priority information.

- Frames are the smallest unit of communication in HTTP/2 that contain specific sets of data (HTTP headers, message payloads etc.). The header will at minimum identify the stream that the frame belongs to.

- Messages are a complete set of frames that map to a logical request or response message.

- Each message is a logical HTTP message, like a request or responses, made up of one or more frames.

This allows us to use a single TCP connection, for what in the past required multiple.

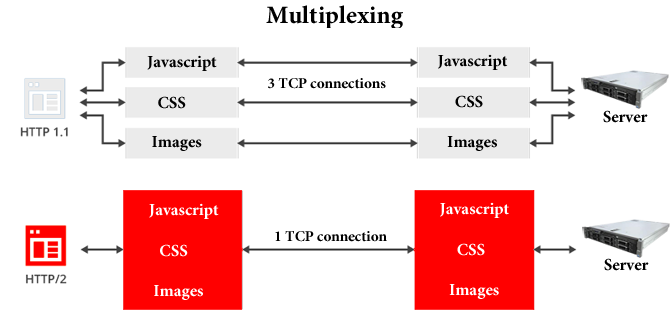

Multiplexing

HTTP/1.1 ensures that only one response can be delivered at a time per connection. And the browser will open additional TCP connections if the client wants to make multiple parallel requests.

HTTP/2 removes this limitation of HTTP/1.1 and enables full requests and response multiplexing. This means that the client and server can break down an HTTP message into independent frames, which are then interleaved, and reassembled at the other end.

Overall, this is the most important enhancement of HTTP/2, as it will in part eliminate the need for multiple connections. This will in turn introduce numerous performance benefits across all web technologies.

The reduced number of connections means fewer Transport Layer Security (TLS) handshakes, better session reuse, and an overall reduction in client and server resource requirements. This makes applications faster, simpler and cheaper to deploy.

Websites with many external assets (images or scripts) will see the largest performance gains from HTTP/2 multiplexing.

Stream Prioritization and Dependency

Further improvements of the multiplexed streams are made with weight and stream dependencies. HTTP/2 allows us to give each stream a weight (a value between 1 and 256), and make it explicitly dependent on another stream.

This dependency and weight combination leads to the creation of a prioritization tree, which tells the server how the client would prefer to receive responses.

The server will use the information in the prioritization tree to control the allocation of CPU, memory, and other resources, as well as the allocation of bandwidth to ensure the client receives the optimal delivery of high-priority responses.

Flow Control

Issues with flow control in HTTP/2 are similar to HTTP/1.1. However, since HTTP/2 streams are multiplexed within a single TCP connection, the way flow control in HTTP/1.1 works is no longer efficient.

In short, flow control is needed to stop streams interfering with each other to cause a blockage. This makes multiplexing possible. HTTP/2 allows for a variety of flow-control algorithms to be used, without requiring protocol changes.

No algorithm for flow control is specified in HTTP/2. Instead, a set of building blocks has been provided to aid clients and servers to apply their own flow control.

You can find the specifics of these building blocks in the "Flow Control" section of the HTTP/2 internet-draft.

Server Push

Your browser will normally request and receive an HTML document from a server when first visiting a page. The server then needs to wait for the browser to parse the HTML document and send a request for the embedded assets (CSS, JavaScript, images, etc.).

In HTTP/1.1, the server cannot send these assets until the browser requests them, and each asset requires a separate request (i.e multiple handshakes and connections).

Server push will reduce latency by allowing the server to send these resources without prompt, as it already knows that the client will require them. So in the example above, the server will push CSS, JavaScript (a common scripting language in web pages), and images to the browser to display the page quicker.

Basically, server push allows a server to send multiple responses for a single client request.

Albeit manually, this is the effect we currently get by inlining CSS or JS into our HTML documents---we are pushing the inlined resource to the client without waiting for the client to request it.

This is a big step away from the current HTTP standard of strict one-to-one request-response workflow.

The Limitations of HTTP/2

SPDY had a slightly stricter policy on security and required SSL encryption for all connections. HTTPS/2 does not require encryption but many services will not serve HTTP/2 without SSL.

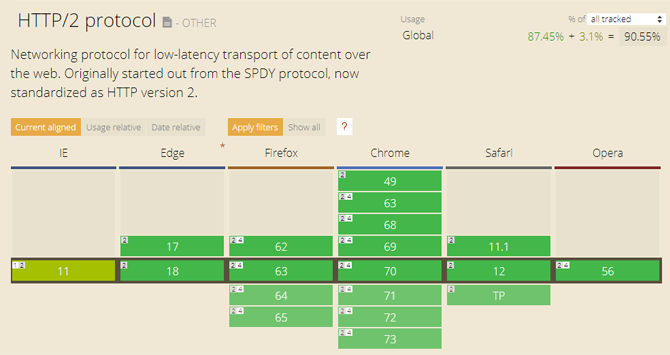

All major browsers support HTTP/2, but none of them will support it without encryption. The CanIUs website has a great table overview over the current browser support for HTTP/2, as seen above.

The backward compatibility and translations between HTTP/1.1 and HTTP/2 will slow down page load speed.

There is no real reason why encryption shouldn't be a default or mandatory setup by now. If you already have an SSL certificate on your site, you can improve the security of your HTTPS website by enabling HSTS.

Is HTTP/2 the Next Big Thing?

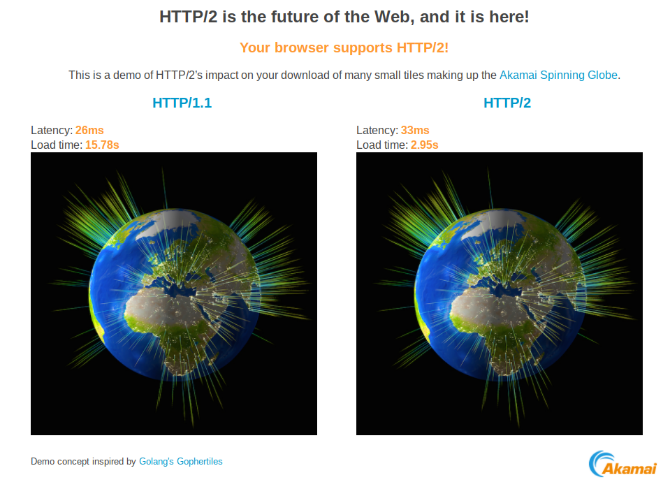

HTTP/2 was proposed as a standard in mid-2015, and most browsers added support for it by the end of that year. HTTP/2 already affects the way that the internet works and how applications and servers talk together.

There are no requirements to force the use of HTTP/2, but so far it only serves benefits and no drawbacks. It's also a fairly minor change from a user perspective, one that people won't really notice.

According to W3Tech, 31.7% of the top 10 million websites currently support HTTP/2. The quickest way for most of you to enable HTTP/2 on your website is to use Cloudflare's CDN.

The next proposed standard (HTTP/3) is already in the works and is based on QUIC, another experimental project by Google. In October of this year, IETF'S HTTP-WG and the QUIC Working Group officially requested QUIC to become the new worldwide standard and to rename it HTTP/3.

If you are curious, Akamai.com has a quick tool to check if your browser supports HTTP/2. If it doesn't, perhaps consider switching your browser.