The Internet is amazing, I think we can all agree on that. But have you ever sat down and thought how on earth a website actually makes its way to your computer? What technologies are behind MakeUseOf, for example? It's a lot more than just a simple collection of HTML files and images.

Read on to find out exactly what goes into running, hosting, and serving up a website for your consumption, dear readers.

Hardware

Let's start at the most basic component of hosting a website - the hardware. Essentially, machines used to host a website are really no different to the desktop PCs you or I have at home. They have more memory, backup drives, and often fibre-optic networking connections - but basically they're the same. In fact, any old machine can host a website - it's just a case of how fast it will be able to send pages out to users.

You can read more here about the various kinds of hosting available, from a single machine shared between thousands of websites each paying $5/month, to a full dedicated server capable of running something like MakeUseOf - which costs thousands of dollars a month.

Operating System

Most webserver machines run an optimized flavour of Linux - though there are a good number of servers out there running Windows, generally in corporate environments where web applications are built on ASP or dotNet. As of January this year, the most popular Linux distro of choice for webhosting is Debian, followed closely by CentOS (based on RedHat), both freely available for you to download and try out yourself - and each said to host about 30% of all websites. Google runs its own custom Linux of course, as well as its own custom filesystem.

Webserver Software

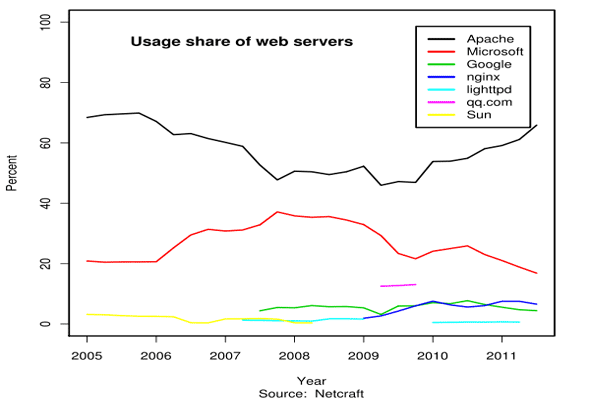

This is where things really start to differentiate. The webserver software is the application that receives incoming requests, and serves up the pages or files. The webserver software itself is largely unconcerned with the language of the webpage it is serving - an Apache server is quite capable of serving Python, PHP, Ruby, or any number of different languages; but this is not universal. Current market share indicates Apache runs around 65% of the top websites, Microsoft IIS 15%, and nginx 10%. nginx is considered to be better at handling high-concurrency sites - that is, where many thousands of users may be on the site at any one time - and is in fact used here at MakeUseOf.

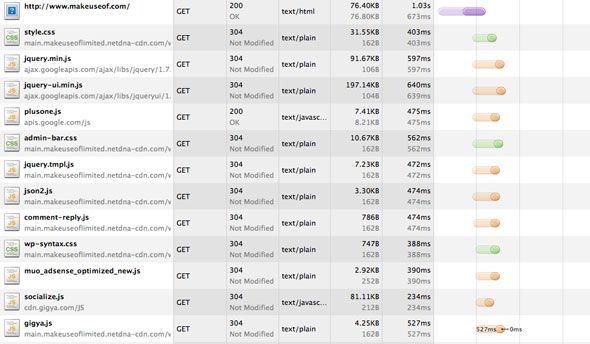

When you load up a website, you open up a socket - a connection - between your computer and the website server. An elaborate and lengthy dance to the HTTP tune then begins with the back and forth of requests, data, and status codes. As you requested this page, our server responded with a 200 - OK, meaning "sure, here you go"; if you visited before, your browser might also ask "hey, I've got a copy of this graphic already in my browser cache, do I really need it again?", to which our server responded 304 - Not modified, or "no, that's cool, we haven't changed it or anything, just use that one".

Occasionally, you'll find the dreaded 404 - not found, but I needn't explain that error code to you. If you've ever opened up Firebug or the developer mode of your browser, you'll be amazed to see just how much back and forth goes on - it's not a simple "give me that page" - "OK, here", but actually hundreds of smaller interactions.

Static Files & Content Delivery Networks

On all websites, there are some files that hardly ever change. Things like Javascripts, CSS, images, PDFs or mp3s. These are called static files, and to serve these to you, the webserver software simply has to grab the file and send it. Easy, right? Not so fast.

Unfortunately, sending out large numbers of static files is quite a laborious task due to the size of the files. If you've ever visited a webpage where you can actually sit there watching the images load, it's because the webserver is fetching those files for you itself - they simply aren't optimised to do that kind of work. Instead, large websites offload all these static files onto what is called a Content Delivery Network - separate servers that are optimized to serve up static files ridiculously fast in the blink of an eye.

They also achieve this by physically locating servers in different locations around the world that mirror each other, so the data has less far distance to travel to you. Right now, even though the MakeUseOf article you are reading is actually hosted in the United States, the images and Javascript all come from somewhere much closer to you via a local CDN.

Dynamic Content - Web Programming Languages

Nearly all modern websites have dynamic content of some sort, whether that means Wordpress adding comments to a blog post, or Google serving up search results. To make a webpage dynamic like, web programming languages are needed. I wrote before about the various languages available to you (and got into some heated debates for suggesting PHP was the best). Whichever language you choose though, it works in conjunction with the webserver software layer to first dynamically generate the page contents, then serve it up to you.

Databases

Behind all dynamic websites are databases - massive stores for raw data that allow us to access that data in a variety of ways. For this, a separate database programming language is required, the most popular being SQL (Structured Query Language) and it's many variants. Databases contain different tables of data to represent different data structures - one might be a list of articles; another for comments on those articles. Using SQL, we can sort, combine, and present that data in a variety of ways.

In Wordpress for example, a 'post' consists of at least a title and a date, and probably some actual content. A separate table is used to store the comments on that article, with yet another table to store a list of categories, and then yet another to store a list of which categories have been assigned to which article. By cross-referencing and pulling data from all of these, Wordpress gathers together all the information it needs for a particular page of your blog, before applying the theme and presenting it to you, via the webserver software.

Caching Systems

Serving up static HTML files is pretty easy in terms of computation - the server just has to fetch the file - dynamic content on the other hand requires a lot of work to put the page together, with the database and the processing that occurs on that data. A caching system brings us full circle, by creating these dynamic pages, and then basically saving them as static HTML files. When the exact same page is requested again, it needn't be re-computed, thereby speeding up the site.

Caching is a broad term that can mean many things though - CDNs are a type of cache; there are also database caches for frequently asked queries (think of Wordpress asking the database for the title of your blog every single time someone looks at your post - because that's actually what happens). I wrote before about how to setup the popular W3 Total Cache system for Wordpress, also used here at MakeUseOf. Your browser has a cache too - pretty much anything can be cached.

So as you can see, there is in fact an immense amount of work and many technologies involved with hosting a website. However, that's not to say you can't have your own blog set up and running in less than an hour. Scaling it to many thousands of users is where the problems start.

Any questions? Ask away, and I'll do my best to answer. Are you surprised by how much effort can go into a website?