A couple of months ago, HTC launched the U11. Apart from high-end specifications, the smartphone's marketing was centered around a unique feature called Edge Sense.

The HTC U11 has grip sensors on both sides that recognize when the phone is squeezed. This gesture is used for launching apps and performing actions. Rumors now suggest that Google's second-generation Pixel phone will have a similar feature too.

So, is the squeeze feature a gimmick or is it actually a groundbreaking feature? Let's find out.

A History of Gesture-Based Features in Smartphones

Ever since smartphones have had motion sensors like accelerometers and gyroscopes, several phones have come with gestures-based features.

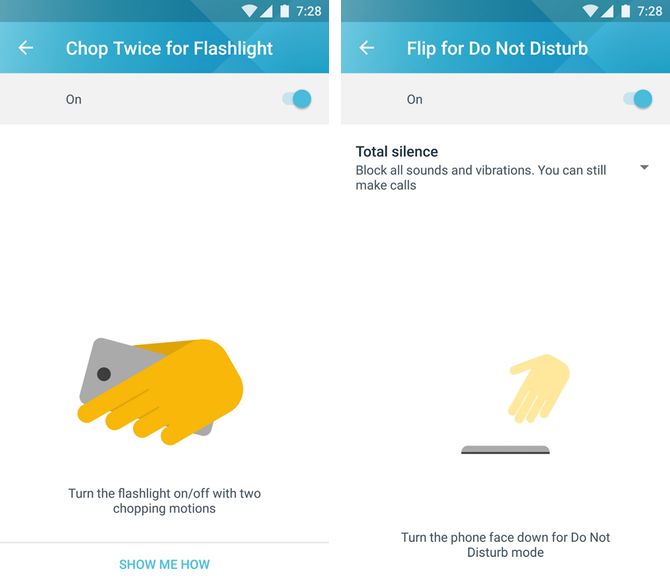

You could flip the Nokia Lumia 900 from 2012 face down to mute incoming phone calls. The first Moto X in 2013 had a signature double-twist gesture to turn on the camera. It was an instant hit and is now available on almost every Motorola smartphone sold today.

The company built on its popularity with a double-chop gesture to toggle the flashlight. Motorola has even put IR sensors on the faces of its flagships since 2014 that detect a waving hand movement to show the time and notifications.

Motorola phones also show the time and notifications when you pick the phone up, thanks to an always-on motion co-processor. Sounds familiar? That's because, since iOS 10, recent iPhones have had the "Raise to Wake" feature that does the same thing.

Google's Pixel phones have "Ambient Display", which works in a similar fashion too. Ambient Display on Google Pixel is part of a Settings menu called "Moves", which also has other gestures like swiping on the fingerprint scanner to drop the notification shade.

Not All Gestures Are Revered

As you saw in the examples above, some gestures were popular enough that the manufacturer decided to keep them in future products. Others got so popular that competing manufacturers wanted the same thing on their phones.

But not every gesture gets the limelight. Microsoft's Gesture app for Windows Phone had a rather interesting feature: during a phone call, the speakerphone would turn on automatically if you put the phone on a flat surface. But alas, it never caught on.

The elementary problem with gestures is that people need to remember they exist. Most of them, including HTC U11's squeeze gesture, offer no visual cues. So, while something like "Raise to Wake" is pretty intuitive, others like answering calls by putting the phone to your ear may not be. There's trial-and-error involved, and the fear of consequences may put people off.

For example, that Microsoft Gestures app could put the call on mute if you put the phone face down. But what if the software doesn't put the call on mute? You can't tell for sure if the call is on mute if the phone is facing down. Would you risk using a gesture to do this action, or simply prefer hitting the mute button?

3D Touch is a classic example of how the lack of visual cues can impair its usability. To use 3D Touch, the user has to keep hard-pressing different areas of the user interface to know if a 3D Touch action is applicable. This is probably why many iPhone users I've personally polled over the years eventually forget to ever use 3D Touch.

Another example could be Google's Now on Tap feature, which provided contextual information to what's shown on the screen.

For example, if someone was talking about a restaurant in a chat, a press-and-hold of the home button would ideally show contextual info like the menu, the distance to the place, and shortcuts to call, etc. But low user response is probably why the feature faded away a year later (it exists as a menu option in Google Assistant).

So What Does This Squeeze Feature Do?

On The HTC U11, the squeeze feature is highly customizable. You can set it to launch apps like the camera, Google Assistant, or any other app of your choice. You can also set it to perform actions like taking a screenshot, toggling the flashlight, start voice recording, turn a WiFi hotspot on or off, etc.

An advanced mode lets you set a total of two actions: one for a short squeeze and another for squeeze-and-hold. Also, you can choose from 10 squeeze force levels.

In a recent update, Edge Sense has been updated to perform the pinch-to-zoom action in apps like Google Maps, Photos, and Calendar, as well as answering and ending phone calls, dismissing alarms, and playing/pausing videos. These features are contextual, meaning if you've set your squeeze action to turning on the flashlight, it won't do that when you're in the Maps app (it'll pinch to zoom instead).

You can already see how the squeeze feature is going to face the exact same problem we discussed with other gestures above. In fact, it's worse, because users will have to bear in mind that squeezing the phone in some apps does one thing, while elsewhere it does the action you've preset.

You will also need to fine-tune the pressure sensitivity. As the YouTube video below explains (starting at 2:59), setting the force level too low will accidentally trigger it, while setting it too high will require a really hard press for it to work. And different people will have different preferences of how hard they want to squeeze. So you have to not only tune the grip level to your liking, you'll have to use it enough to know what the optimal pressure level is.

Reviewers of the HTC U11 have expressed a mixed reaction to the squeeze feature. While some aren't mincing any words in calling it a gimmick, others are intrigued and find it useful.

Rumors around the Google Pixel 2's reported squeeze feature suggest it will be similar to HTC U11's implementation, and supposedly will launch Google Assistant when squeezed. This sounds similar to the Galaxy S8 and Galaxy S8+, which have dedicated buttons for Samsung's own Bixby virtual assistant.

If this is the case, then it's a little redundant too, because even on current Pixel and Nexus phones, you can summon the Google Assistant by saying, "Ok Google" -- even when the phones are on standby. Why have a dedicated function?

So It's a Gimmick?

At least when it comes to its implementation in the HTC U11, I believe it's a gimmick. Although some users may find it useful, due to the lack of consistent behavior (a side-effect of its ultra-customizability), I fear the average user will forget about it over time. But that doesn't mean the idea of having sensors on the sides of the phone is entirely bad.

Last year, we got to see a Microsoft prototype Windows Phone codenamed "McLaren." Other than detecting fingers before they touched the screen, the accompanying sensors also did something very interesting: they told the phone how it was being held.

This meant the operating system could accurately change the interface orientation without a manual orientation lock. So, if you've gripped the phone from the sides, the interface will remain in portrait mode, even if the phone orientation is horizontal (like say, when you're lying in bed). It is only when the phone is held from top and bottom, will the interface switch to landscape mode.

Another feature born out of these side sensors is keeping the phone display on for as long as it's held, disregarding the preset screen timeout. Samsung phones have had a similar feature, but it was unreliable in low light since it used the front-facing camera to detect the face.

I think that there is potential for having sensors on the side of the phone. But as for squeeze shortcuts, the past suggests that gestures have to be highly intuitive and superbly easy for them to stick.

What do you think? Do you agree or disagree with our observation? What other useful gestures have you come across? Let it flow in the comments below.