Simultaneous localization and mapping (SLAM) is likely not a phrase you use everyday. However, several of the latest cool technological marvels use this process every millisecond of their lifespan.

What is SLAM? Why do we need it? And what are these cool technologies you speak of?

From Acronym to Abstract Idea

Here's a quick game for you. Which one of these does not belong?

- Self-driving cars

- Augmented reality apps

- Autonomous aerial and underwater vehicles

- Mixed reality wearables

- The Roomba

You may think the answer is easily the last item in the list. In a way, you are right. In another way, this was a trick game as all of those items are related.

The real question of the (very cool) game is this: What makes all these technologies feasible? The answer: simultaneous localization and mapping, or SLAM! as the cool kids say it.

In a general sense, the purpose of SLAM algorithms is easy enough to iterate. A robot will use simultaneous localization and mapping to estimate its position and orientation (or pose) in space while creating a map of its environment. This allows the robot to identify where it is and how to move through some unknown space.

Therefore, yes, that is to say all this fancy-smancy algorithm does is estimate position. Another popular technology, Global Positioning System (or GPS) has been estimating position since the first Gulf War of the 1990s.

Differentiating Between SLAM and GPS

So then why the need for a new algorithm? GPS has two inherent problems. First, while GPS is accurate relative to a global scale, both precision and accuracy diminish scale relative to a room, or a table, or a small intersection. GPS has accuracy down to a meter, but what the centimeter? Millimeter?

Secondly, GPS doesn't work well underwater. By not well I mean not at all. Similarly, performance is spotty inside buildings with thick concrete walls. Or in basements. You get the idea. GPS is a satellite based system, which suffers from physical limitations.

So SLAM algorithms aim to give an improved sense of position for our most advanced gadgets and machines.

These devices already have a litany of sensors and peripherals. SLAM algorithms utilize the data from as many of these as possible by using some math and statistics.

Chicken or Egg? Position or Map?

Math and statistics are needed to answer a complex quandary: is position used to create the map of the surroundings or is the map of the surroundings used to calculate position?

Thought experiment time! You are inter-dimensionally warped to an unfamiliar place. What is the first thing you do? Panic? OK, well calm down, take a breath. Take another. Now, what is the second thing you do? Look around and try to find something familiar. A chair is to your left. A plant is to your right. A coffee table is in front of you.

Next, once the paralyzing fear of "Where the hell am I?" wears off, you start to move. Wait, how does movement work in this dimension? Take a step forward. The chair and plant are getting smaller and the table is getting larger. Now, you can confirm that you are in fact moving forward.

Observations are key to improving the accuracy of the SLAM estimation. In the video below, as the robot moves from marker to marker, it builds a better map of the environment.

Back to the other dimension, the more you walk around the more you orient yourself. Stepping in all directions confirms that movement in this dimension is similar to your home dimension. As you go to the right, the plant looms larger. Helpfully, you see other things that you identify as landmarks in this new world that allow you to wander more confidently.

This is essentially the process of SLAM.

Inputs to the Process

In order to make these estimations, the algorithms use several pieces of data that can be categorized as internal or external. For your inter-dimensional transport example (admit it, you had a fun trip), the internal measurements are size of steps and direction.

The external measurements made are in the form of images. Identifying landmarks such as the plant, chair, and table is an easy task for the eyes and brain. The most powerful processor known---the human brain---is able to take these images and not just identify objects, but also estimate the distance to that object.

Unfortunately (or fortunately, depending on your fear of SkyNet), robots do not have a human brain as a processor. Machines rely on silicon chips with human written code as a brain.

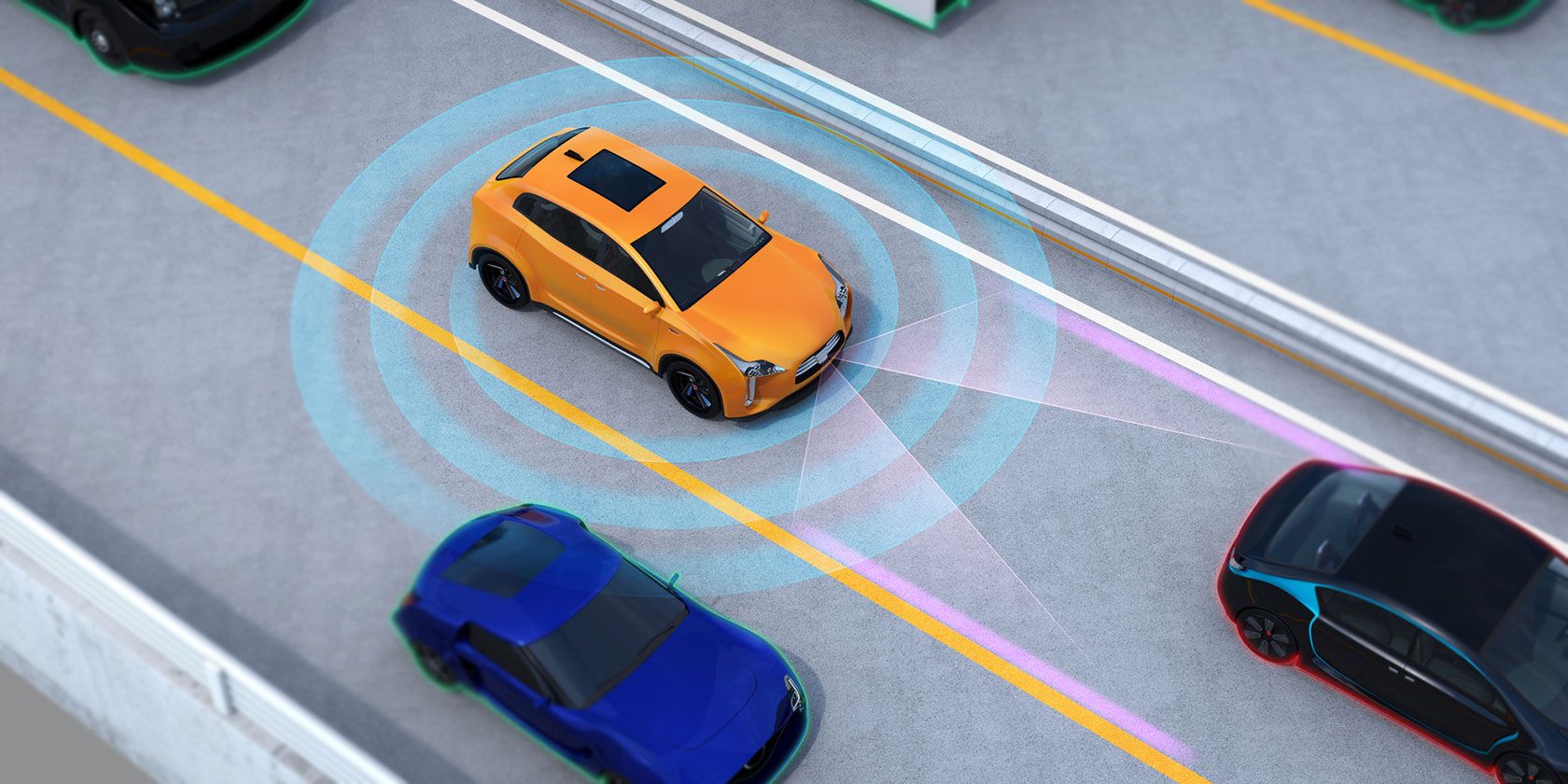

Other pieces of machinery make external measurements. Peripherals such as gyroscopes or other inertial measurement unit (IMU) are helpful in doing this. Robots such as self-driving cars also use the odometry of wheel position as an internal measurement.

Externally, a self-driving car and other robots use LIDAR. Similar to how radar uses radio waves, LIDAR measures reflected light pulses to identify distance. The light used is typically ultraviolet or near infrared, similar to an infrared depth sensor.

LIDAR sends out tens of thousands of pulses per second to create an extremely high definition three-dimensional point cloud map. So, yes, the next time that Tesla rolls around on autopilot, it will shoot you with a laser. Lots of times.

Additionally, SLAM algorithms use static images and computer vision techniques as an external measurement. This is done with a single camera, but can be made even more accurate with a stereo pair.

Inside the Black Box

Internal measurements will update the estimated position, which can be used to update the external map. External measurements will update the estimated map, which can be used to update the position. You can think of it as an inference problem, and the idea is to find the optimal solution.

A common way to do this is through probability. Techniques such as a particle filter approximate position and mapping using Bayesian statistical inference.

A particle filter uses a set number of particles spread out by a Gaussian distribution. Each particle "predicts" the robot's current position. A probability is assigned to each particle. All particles start with the same probability.

When measurements are made that confirm each other (such as step forward = table getting bigger), then the particles that are "correct" in their position are incrementally given better probabilities. Particles that are way off are assigned lower probabilities.

The more landmarks a robot can identify, the better. Landmarks provide feedback to the algorithm and allow for more precise calculations.

Current Applications Using SLAM Algorithms

Let's break this down, cool piece of technology by cool piece of technology.

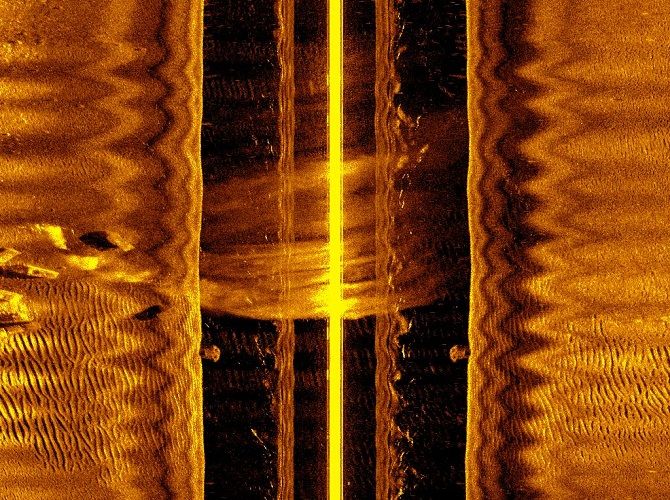

Autonomous Underwater Vehicles (AUVs)

Unmanned submarines can operate autonomously using SLAM techniques. An internal IMU provides acceleration and motion data in three directions. Additionally, AUVs use bottom-facing sonar for depth estimations. Side scan sonar creates images of the sea floor, with a range of a couple hundred meters.

Mixed Reality Wearables

Microsoft and Magic Leap have produced wearable glasses that introduce Mixed Reality applications. Estimating position and creating a map is crucial for these wearables. The devices use the map to place virtual objects on top of real objects and have them interact with each other.

Since these wearables are small, they cannot use large peripherals such as LIDAR or sonar. Instead, smaller infrared depth sensors and outward facing cameras are used to map an environment.

Self-Driving Cars

Autonomous cars have a little bit of an advantage over wearables. With a much bigger physical size, cars can hold bigger computers and have more peripherals to make internal and external measurements. In many ways, self-driving cars represent the future of technology, both in terms of software and hardware.

SLAM Technology Is Improving

With SLAM technology being used in a number of different ways, it is only a matter of time before it is perfected. Once self-driving cars (and other vehicles) are seen on a daily basis, you'll know that simultaneous localization and mapping is ready for everyone to use.

Self-driving technology is improving every day. Want to know more? Check out MakeUseOf's detailed breakdown of how self-driving cars work. You might also be interested in how hackers are targeting connected cars.

Image Credit: chesky_w/Depositphotos