We talk all the time about computers understanding us. We say that Google "knew" what we were searching for, or that Cortana "got" what we were saying, but "understanding" is a very difficult concept. Especially when it comes to computers.

One field of computational linguistics, called natural language processing (NLP), is working on this particularly tough problem. It's a fascinating field right now, and once you have an idea of how it works, you'll start to see its effects everywhere.

A quick note: This article has a few examples of a computer responding to speech, like when you ask Siri for something. The transformation of audible speech to a computer-understandable format is called speech recognition. NLP isn't concerned with that (at least in the capacity we're discussing here). NLP only comes into play once the text is ready. Both processes are necessary for many applications, but they're two very different problems.

Defining Understanding

Before we get into how computers deal with natural language, we need to define a few things.

First of all, we need to define natural language. This is an easy one: every language used regularly by people falls into this category. It doesn't include things like constructed languages (Klingon, Esperanto) or computer programming languages. You use natural language when you talk to your friends. You also probably use it to talk to your digital personal assistant.

So what do we mean when we say understanding? Well, it's complex. What does it mean to understand a sentence? Maybe you'd say that it means you now have the intended content of the message in your brain. Understanding a concept might mean you can apply that concept to other thoughts.

Dictionary definitions are nebulous. There's no intuitive answer. Philosophers have argued over things like this for centuries.

For our purposes, we're going to say that understanding is the ability to accurately extract meaning from natural language. For a computer to understand, it needs to accurately process an incoming stream of speech, convert that stream into units of meaning, and be able respond to the input with something that's useful.

Obviously this is all very vague. But it's the best we can do with limited space (and without a neurophilosophy degree). If a computer can offer a human-like, or at least useful, response to a stream of natural language input, we can say it understands. This is the definition we'll use going forward.

A Complex Problem

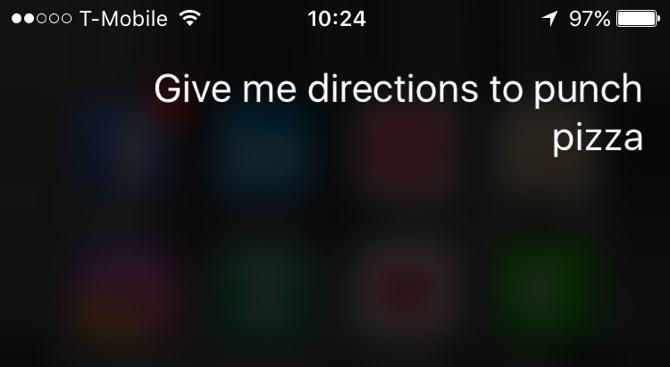

Natural language is very difficult for a computer to deal with. You might say, "Siri, give me directions to Punch Pizza," whereas I might say, "Siri, Punch Pizza route, please."

In your statement, Siri might pick out the keyphrase "give me directions," then run a command related to the search term "Punch Pizza." In mine, however, Siri needs to pick out "route" as the keyword and know that "Punch Pizza" is where I want to go, not "please." And that's just a simplistic example.

Think about an artificial intelligence that reads emails and decides whether or not they might be scams. Or one that monitors social media posts to gauge interest in a particular company. I once worked on a project where we had to teach a computer to read medical notes (which have all sorts of strange conventions) and glean information from them.

This means the system had to be able to deal with abbreviations, strange syntax, occasional misspellings, and a wide variety of other differences in the notes. It's a highly complex task that can be difficult even for experienced humans, much less machines.

Setting an Example

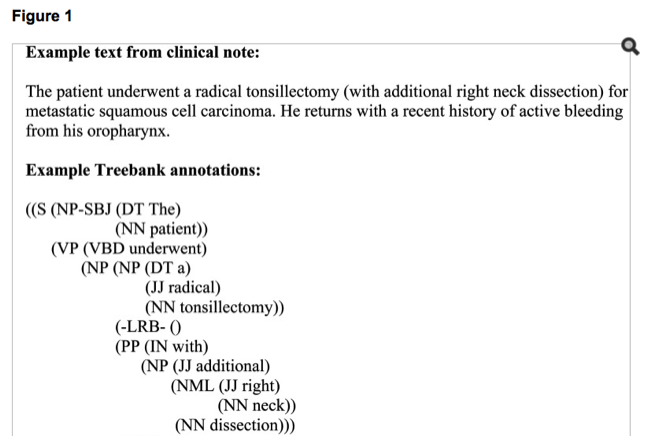

In this particular project, I was part of the team that was teaching the computer to recognize specific words and the relationships between words. The first step of the process was to show the computer the information that each note contained, so we annotated the notes.

There were a huge number of different categories of entities and relations. Take the sentence "Ms. Green's headache was treated with ibuprofen," for example. Ms. Green was tagged as a PERSON, headache was tagged as SIGN OR SYMPTOM, ibuprofen was tagged as MEDICATION. Then Ms. Green was linked to headache with a PRESENTS relation. Finally, ibuprofen was linked to headache with a TREATS relation.

We tagged thousands of notes this way. We coded diagnoses, treatments, symptoms, underlying causes, co-morbidities, dosages, and everything else you could possibly think of related to medicine. Other annotation teams coded other information, like syntax. In the end, we had a corpus full of medical notes that the AI could "read."

Reading is just as hard to define as understanding. The computer can easily see that ibuprofen treats a headache, but when it learns that information, it's converted into meaningless (to us) ones and zeroes. It can certainly give back information that seems human-like and is useful, but does that constitute understanding? Again, it's largely a philosophical question.

The Real Learning

At this point, the computer went through the notes and applied a number of machine-learning algorithms. Programmers developed different routines for tagging parts of speech, analyzing dependencies and constituencies, and labeling semantic roles. In essence, the AI was learning to "read" the notes.

Researchers could eventually test it by giving it a medical note and asking it to label each entity and relation. When the computer accurately reproduced human annotations, you could say that it learned how to read said medical notes.

After that, it was just a matter of gathering a huge amount of statistics on what it had read: which drugs are used to treat which disorders, which treatments are most effective, the underlying causes of specific sets of symptoms, and so on. At the end of the process, the AI would be able to answer medical questions based on evidence from actual medical notes. It doesn't have to rely on textbooks, pharmaceutical companies, or intuition.

Deep Learning

Let's look at another example. Google's DeepMind neural network is learning to read news articles. Like the biomedical AI above, researchers wanted it to pull out relevant and useful information from larger pieces of text.

Training an AI on medical information was tough enough, so you can imagine how much annotated data you'd need to make an AI able to read general news articles. Hiring enough annotators and going through enough information would be prohibitively expensive and time-consuming.

So the DeepMind team turned to another source: news websites. Specifically, CNN and the Daily Mail.

Why these sites? Because they provide bullet-pointed summaries of their articles that don't simply pull sentences from the article itself. That means the AI has something to learn from. Researchers basically told the AI, "Here's an article and here's the most important information in it." Then they asked it to pull that same type of information from an article without bulleted highlights.

This level of complexity can be handled by a deep neural network, which is an especially complicated type of machine learning system. (The DeepMind team is doing some amazing things on this project. To get the specifics, check out this great overview from the MIT Technology Review.)

What Can a Reading AI Do?

We now have a general understanding of how computers learn to read. You take a huge amount of text, tell the computer what's important, and apply some machine-learning algorithms. But what can we do with an AI that pulls information from text?

We already know that you can pull specific actionable information from medical notes and summarize general news articles. There's an open-source program called P.A.N. that analyzes poetry by pulling out themes and imagery. Researchers often use machine learning to analyze large bodies of social media data, which is used by companies to understand user sentiments, see what people are talking about, and find useful patterns for marketing.

Researchers have used machine learning to gain insight into emailing behaviors and the effects of email overload. Email providers can use it to filter out spam from your inbox and classify some messages as high-priority. Reading AIs are critical in making effective customer service chatbots. Anywhere there's text, there's a researcher working on natural language processing.

And as this type of machine learning improves, the possibilities only increase. Computers are better than humans at chess, Go, and video games now. Soon they may be better at reading and learning. Is this the first step towards strong AI? We'll have to wait and see, but it may be.

What kinds of uses do you see for a text-reading and learning AI? What sorts of machine learning do you think we'll see in the near future? Share your thoughts in the comments below!

Image Credits: Vasilyev Alexandr/Shutterstock