RAM or computer memory is the most common upgrade due to its relatively low cost and the immediate increases in performance it offers. Whether your computer is sluggish, or your favorite games and applications keep crashing, RAM typically offers the best bang for the buck. The problem is, we may be reaching the point of diminishing returns on adding more RAM to our computers.

Fear not, as it appears AMD could have the solution the fix - High Bandwidth Memory (HBM) - and since the hype machine has already started, it's time to dive in and take a look just what it is, what problem(s) it fixes, and if it's really needed.

Let's get started.

Current Memory Limitations

Building a better computer isn't often about finding mind-blowing technology to shove into a case. Instead, modern parts manufacturers spend most of their time identifying and attempting to solve bottlenecks within the system. Current memory technology is one of these bottlenecks.

There has long been talk of a so-called "memory wall" which would limit future innovation on future processing breakthroughs. This "wall" was estimated to happen at around 16 CPU cores. On a desktop computer, lower latency is preferred over higher-bandwidth solutions, while GPU devices currently have a "the more, the merrier" approach to bandwidth.

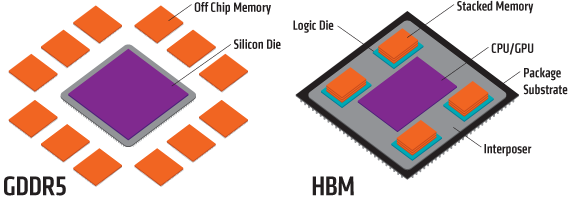

The limitations of GDDR5 (double data rate type five synchronous graphics random access memory) are mostly surrounding the wide bus - which is a sort of communication system that transfers data from component to component. The fatal flaw rests in how the chips connect to the GPU - via contacts around its edge. To add bandwidth to memory we're continually adding more chips, each of which lay flat, side-by-side. It's not an ideal solution, as it results in a sort of sprawl effect which requires longer wires necessary for maintaining the GPU connection. Additional power is then required to run the length of the additional wires.

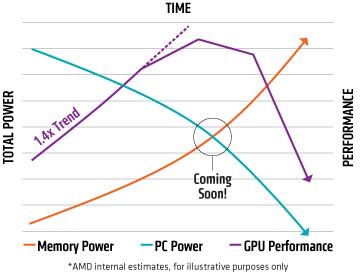

While it's technically possible to squeeze more performance out of existing memory technology, it comes at a cost, and in this case, it's efficiency. The efficiency problem revolves around memory pulling so much power from the GPU that overall performance suffers.

In the past, we'd address this problem by adding more GDDR5 and increasing the power supply. As the world shifts to mobile devices, space is at a premium, and it's becoming more and more difficult to cool these devices, especially with additional power requirements. This has led us to a point of diminishing returns. Any additional GDDR5 added requires longer wires, additional power - and increased cooling capacity - or a drop in GPU performance.

What is HBM?

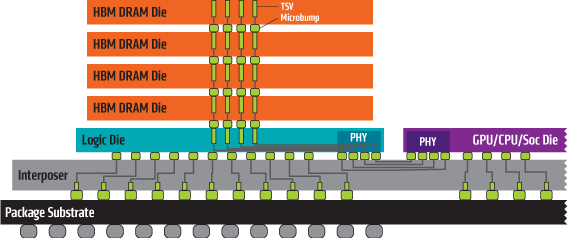

High bandwidth memory (HBM) is a different approach to the technology behind current GDDR5. As opposed to sprawling GDDR5, HBM uses stacked RAM which increases the height of the component, but not the width. This leads to significantly more memory capacity without the need for longer connections. While GDDR5 uses a GPU placed on a silicon die and surrounded by off-chip memory, HBM takes a different approach. HBM uses a GPU placed on an interposer - or, an electrical interface that routes connections between sockets - in addition to four pieces of stacked memory, each residing on top of its own logic die.

The design allows for stacking additional memory on top of each chip, thus reducing the need for longer connections, additional power, performance drops, or heating issues. In fact, HBM is so efficient the design allows for a theoretical stack of four times the chips of current RAM. This, of course, brings significant power gains in addition to faster and more efficient memory.

For example: A current GDDR5 chip supports 32-bit wide memory bus with up to 7Gbps of bandwidth.

HBM, on the other hand, supports a 1,024-bit bus and more than 125Gbps. According to AMD Chief Technology Officer Joe Macri, the stacked HBM also increases efficiency. HBM utilizes 35Gbps of memory bandwidth per watt of power consumed, while current GDDR5 technology is only about a third as efficient, clocking in at 10.5Gbps per watt of power consumed. For gamers, this also means bigger gains from overclocking.

Do You Need It?

Probably not, at least for now. Apart from computer gamers building their own extremely high-end rigs, it's not an issue most of us currently need to address. Gamers stand to benefit the most from High Bandwidth Memory as gaming rigs continue to get more and more powerful.

The standard computer relies heavily on both the GPU and RAM in order to run some of the more graphic intensive games at the highest settings. Freeing up additional space for better-functioning RAM allows game designers that much more freedom to experiment with textures and scale without worrying about users who can't run the game at the highest display settings.

That's not to say non-gamers can't benefit from High Bandwidth Memory. Resource-intensive jobs, such as those requiring video or photo editing stand to benefit greatly from an increase in RAM efficiency. Since the first generation of HBM won't require a motherboard upgrade, the switch to higher-efficiency memory could make a lot of sense for those that are typically pushing their GPU to the limit.

For the rest of us, it's definitely not something we'll need to run out and get right away. However, that day is coming. High performance computational tasks are increasingly being offloaded to GPUs in what's known as GPU-accelerated computing. GPU-accelerated computing is also making its way to more and more mobile devices and as the trend continues it's looking like it'll make less sense in the future to separate CPU and GPU processes. So the future of computing - no matter what your level of computer nerd-dom - will require the additional efficiency afforded by utilizing HBM, but most us aren't there yet.

Are you going to upgrade? Tell us why or why not in the comments below.

Image Credits: glowing square Via Shutterstock