On January 27, Google announced that AlphaGo, an artificial intelligence developed by its subsidiary DeepMind, had defeated European Go champion Fan Hui in a five-game match.

You may have heard of this news as it's making headlines around the world, but why do people care so much about it? What does it all mean? If you aren't familiar with the game of Go or its significance to artificial intelligence, you might be feeling a bit lost.

Don't worry, we have you covered. Here's everything you need to know about the breakthrough and how it affects regular people like you and me.

The Game of Go: Simple Yet Complex

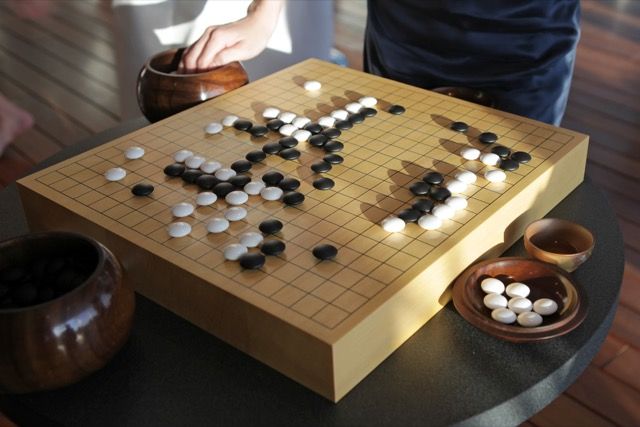

Go is an ancient Chinese strategy game where two players fight to capture territory. Turn by turn, each player -- one white, the other black -- places stones on the intersections of a 19 x 19 grid. When a group of stones is completely surrounded by the other player's stones, they're "captured" and removed from the board.

At the end of the game, each empty spot is "owned" by the player surrounding it. Each player's scores is based on how much territory he owns (i.e. how much empty space he has surrounded) plus the number of opponent pieces that were captured during play.

While most people probably think of Chess as the king of strategy games, Go is actually more complex. According to Wikipedia, there are 10761 possible games of Go compared to 10120 estimated possible games of Chess.

This complexity, along with some esoteric rules and an emphasis on playing by instinct, makes Go an especially difficult game for computers to learn and play at a high level.

The Incredible World of Game-Playing AIs

In the grand scheme of things, designing an artificial intelligence that plays a game doesn't seem like a very worthwhile pursuit, especially when IBM's Watson AI is already working to help improve healthcare, an area that needs all the help it can get. So why did Google spend so many hours and dollars to create a Go-playing AI?

On one level, it helps AI researchers figure out the best way to teach computers to do things. If you can teach a computer to solve how to find the best moves in a game of Checkers or Tic-Tac-Toe, you could gain insight into teaching a different computer how to recommend movies on Netflix, instantly translate speech, or predict earthquakes.

Many of the uses for AI that we've seen so far would benefit from improved problem-solving and pattern-extracting abilities, which also happen to be important for effective game-playing AIs.

Deep Blue, the Chess champion AI, worked by using a huge amount of computational power and brute force techniques to evaluate all possible next moves -- up to 200,000,000 positions per second. And while this strategy was effective enough to beat a former World Chess Champion, it's not a particularly "human-like" way to play chess. It also requires programmers to "explain" the rules of the game to the AI.

More recently, a process was developed called deep learning, which essentially paved the way for computers to teach themselves, and that completely changed the race for artificial intelligence.

With deep learning, a computer can extract useful patterns from data -- instead of being told by programmers which patterns it should look for -- and use those patterns to optimize its own decisions. If deep learning is successful, an AI can even discover patterns that are more effective than what we can recognize as humans.

This type of learning was demonstrated last year, when Google-owned AI research firm DeepMind revealed an AI that taught itself to play 49 different Atari games after being given only raw input. (You can see it learning to play Breakout above.)

The process is the same as learning a video game without a tutorial or explanation. You watch for a while, then try pushing random buttons, then start to figure things out, develop strategies, and eventually go on to excel.

And excel it did. The DeepMind AI absolutely destroyed professional-level human opponents in some of those games, like Video Pinball. It fared significantly worse in other games, including Ms. Pac-Man, but had a very impressive record overall.

AlphaGo: The Next Level of AI

AlphaGo, the computer that defeated Fan Hui at Go, used this deep learning strategy to go undefeated in five matches.

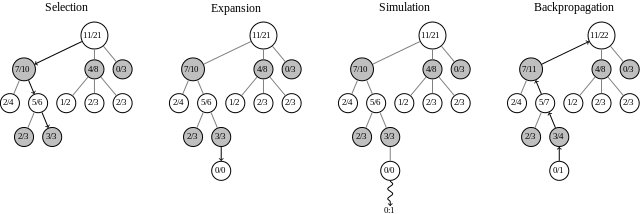

Instead of using brute force computation like Deep Blue, AlphaGo determined its next move by using what it had learned in training to limit the scope of potentially effective moves, then running simulations to see which moves were most likely to result in positive outcomes.

Two different neural networks, the policy network and the value network, worked together to evaluate moves and choose the best one each turn.

Because of the complexity of Go, a brute force approach over all possible moves just isn't possible like it is in Chess. So AlphaGo drew on the knowledge it gained during the training phase, which consisted of watching 30 million moves made by human experts, learning to predict their moves, coming up with its own strategies, and playing against itself thousands of times.

Using reinforcement learning, its decision-making processes were developed and strengthened until AlphaGo became the best Go-playing AI in the world. In 500 games against the most advanced Go computers, it won 499 of them -- even after giving those programs a four-move headstart.

And, of course, AlphaGo beat Fan Hui, the current European Go champion. The victory was actually achieved in October of 2015, but the announcement was delayed to coincide with the release of DeepMind's research paper in Nature. In March, AlphaGo will be taking on Lee Sedol, the most dominant player in the world over the past ten years.

Okay, So What Does It All Mean?

Why is this making headlines around the world? For several reasons, actually.

First, many people thought this was impossible with current technology. Most estimates said that an AI wouldn't beat a world-class Go player for at least another ten years. AlphaGo's value networks can evaluate any Go game currently being played and predict an eventual winner, a problem that Google says is "so hard it was believed to be impossible."

Second, the fact that deep and independent learning was used is very important. This shows that a current artificial intelligence can gather data, extract patterns, learn to predict such patterns, and eventually develop problem-solving strategies that are complex and effective enough to beat a world-class human.

And while winning at Go isn't going to change the world, the fact that a computer was able to come up with that level of strategy using its own learning algorithms is very impressive.

It's this deep learning that has AI researchers really excited about AlphaGo. Many believe that independent learning is the first step towards making a strong artificial intelligence. A strong AI refers to a computer that can solve intellectual tasks on par with humans (which is incredibly difficult, largely due to the complexity and efficiency of the human brain). This is the sort of AI you see in many science fiction movies.

It's for this reason that creating AIs that can behave in human-like ways is such a big deal. Extracting patterns and developing strategies is something that we do all the time, and we don't use brute force methods when making decisions.

It's very difficult to get a computer to do that without a lot of guidance, but thanks to AlphaGo, we now know that strong AI isn't just possible, but nearer than we thought.

Of course, a Go-playing AI is still a long way away from a generally intelligent AI. It only does one thing, which is about as simple as an artificial intelligence can get -- even the Atari-playing AI was able to play 49 different games -- but AlphaGo's effective independent learning could be the first step toward a major paradigm shift in AI.

What Do You Think?

There's no question that AlphaGo's victory over Fan Hui is important, but whether or not it's worthy of worldwide headlines is up for debate.

Do you think this is a big deal? Are we one step closer to the robot apocalypse? Or are you not impressed with an AI that can just play a game? Share your thoughts below and let's talk about it.

Image Credits: go game by vvoe via Shutterstock, Tatiana Belova via Shutterstock.com, Mciura via Wikimedia Commons, Zerbor via Shutterstock.com