It sounds like something from George Orwell's 1984: a man sends a private e-mail and finds himself arrested for it. The e-mail wasn't intercepted by an investigating police officer; the man wasn't even under suspicion before his arrest. The e-mail was analyzed by an automated system that few people know about, and the offending e-mail was brought to the attention of the authorities.

Does this sound like a world you want to live in? That world is already here—and that system was used to catch a guy sending child pornography.

Caught Red-Handed

So how did it happen? John Skillern was sending indecent photos of children to a friend, and soon found himself under arrest. What he didn't know was that Google automatically scans the images that are sent via Gmail and compares them to a database of recovered child pornography; if a match is found, the police are notified. In this case, they subsequently obtained a warrant to search his computer and tablet, where they found other pornographic images of children.

US law requires that any company discovering evidence of child pornography report it to the police immediately. This has traditionally applied to photo developers, photo hosting services, and other photography-related companies, but it applies to all companies, including search engines. Of course, this is good—the sexual exploitation of children is a heinous crime, and we should be throwing every resource we have at it. Companies are happy to comply with these laws, and in general, people are happy for them to.

But the existence of this technology has some people worried.

How Does It Work?

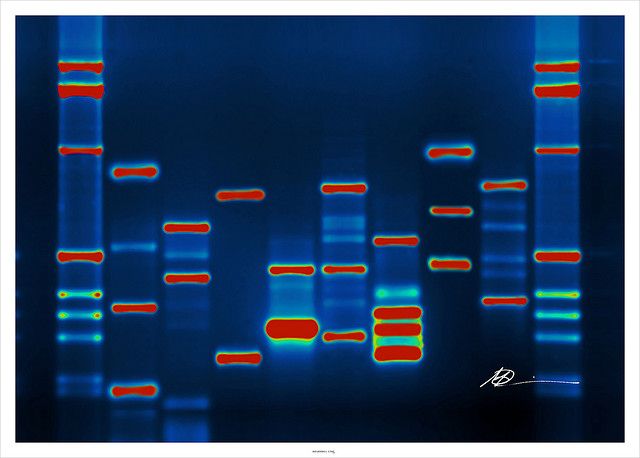

The technology that's used for Gmail's child-porn scanning is a piece of software created by Microsoft called PhotoDNA. When an image is added to the PhotoDNA database, a mathematical hash is created and used as a unique identifier. After a few thousand images have been added to the database, it gets really good at identifying photos based on these identifiers, even if those photos have undergone minor alterations.

Microsoft developed PhotoDNA in conjunction with the National Center for Missing and Exploited Children (NCMEC), so it's been destined for fighting child porn since the very beginning. And, obviously, it's working. It caught Skillern, and it's in use by Bing, OneDrive, Facebook, Twitter, and Gmail, among potential others.

Unfortunately, PhotoDNA can only identify photos that have already been added to the database, which means the trading of new images won't trigger an alert. But with the amount of illicit material that's already out there, it can be extremely useful in identifying potential child pornographers.

Was It a Secret?

Since the announcement of Skillern's arrest, there's been a lot of discussion about whether or not the deployment of PhotoDNA by Gmail is a privacy concern. Gmail serves ads to users, and scans the contents of personal e-mails to determine which ads to show. Google maintains that this scanning is done anonymously, and that student, business, and government accounts aren't scanned. Recent privacy and legal concerns have led to Google backing off of their scanning a bit, but the revelation of the use of PhotoDNA has many people questioning whether or not we're getting the whole story.

While Google had never denied that they were scanning Gmail messages for child porn, they were very tight-lipped about it. Even though people are sure to support anti-child-pornography measures, the idea that Google is scanning the images in their e-mails might not have gone over so well. But the news is out now, and we have to ask ourselves whether Google is being forthcoming with what they're doing with our e-mails. We know that e-mail is inherently insecure, but finding evidence of surveillance by Google is unnerving.

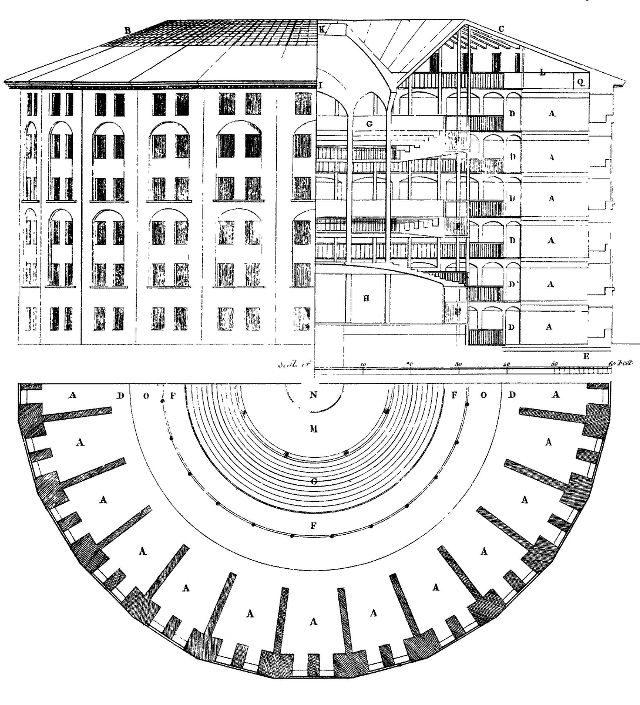

The Gmail Panopticon

In the late 18th century, Jeremy Bentham developed the plans for a building called a panopticon, in which all of the students, children, or—most applicably—prisoners in a building could be monitored by a single watchperson. None of the inmates are able to tell if they are being watched, so they must assume that they're under surveillance, encouraging them to moderate their behavior so as to not draw attention to themselves.

Is that what Gmail is leading us toward? Right now, they're scanning images for child pornography. But, as some journalists have noted, Google is bound by the laws of the countries in which it operates, which means that governments could require that they turn over other sorts of information that's found in their scans. This might sound like a stretch, but by accepting the terms and conditions of Gmail, we've given Google a lot of power to do what they want with our data.

And is it so hard to imagine that this technology would be used in the pursuit of perpetrators of other crimes? As it stands, Google maintains that someone could blatantly plan another type of crime via Gmail and they wouldn't be at any risk. But how long will that be the case? Google is now cooperating with law enforcement by scanning our private messages. Now that this technology has been used to catch a criminal and has been shown to the public, there's a very strong bond there.

What will Google scan for next? Terrorist threats? Murder plots? Shoplifting? Opinions that differ from those supported by the political majority? Indications of intent to stop using Google services? We could see a big turning point in the near future driven by increasing demands from governments. And not just ours—what will more repressive governments ask Google to turn over? E-mails containing evidence of homosexuality? Political dissent? Humanitarian missions?

We just don't know. And it's tough to say how Google will respond.

And it's going to be hard to say no after they've shown that their scanning can catch child pornographers. Who's going to ask them to stop using this technology when they'll inevitably be asked "Do you support child pornography, then?" in response? There might be no going back from this—public opinion is going to be tough to sway against a system that catches some of the most reviled criminals in the world.

Is This the Beginning of the End of Privacy?

If there's a single company that's synonymous with the Internet, it's Google. And if they're scanning our e-mails, online privacy could be coming to an end (at least in the major channels; there will always be other options). If you read my piece of the Don't Spy on Us Day of Action, you'll know that I'm afraid that privacy is facing some serious attacks from a number of directions, both corporate and governmental. And this cooperation between the two could spell trouble for online privacy and security.

While I don't think we're seeing a calculated move by Google to improve public opinion of their scanning, I think they'll do their best to make this a positive thing. They might not have been hoping for this scanning to be brought to the attention of the public, but there's ample opportunity here to show the world what they can do for us in exchange for some of our privacy.

The next step that Google takes in response to this story will be very telling, and I'm looking forward to following this story to see what they say about it. What do you think? Is Google overstepping their bounds by scanning every image that we send? Or are you happy to sacrifice some of your privacy to fight child pornography? Would you feel the same way if Google starts scanning for other crimes? Or if governments start making demands on them to turn over certain e-mails? There's a lot of room for controversy and discussion here—chime in below and let us know what you think!

Image credits: All seeing eye. Doodle style (edited) via Shutterstock, Man in black via Shutterstock, Micah Baldwin via flickr, DieBuche via Wikimedia Commons, Robert Scoble via flickr, Ben Roberts via flickr.