Facebook is ramping up its efforts to help people having suicidal thoughts. And the social network is using artificial intelligence to identify people at risk. While this does carry some privacy implications, it could help save countless lives every year. Which has to be a good thing.

Depression and other forms of mental illness can be debilitating. And not everyone has the kind of support network needed to get through trying times. However, even if someone doesn't feel able to reach out to a friend in person, they may still emit a cry for help on Facebook. Which is why the social network is trying to help people expressing thoughts of suicide on its platform.

Facebook Helps People Expressing Thoughts of Suicide

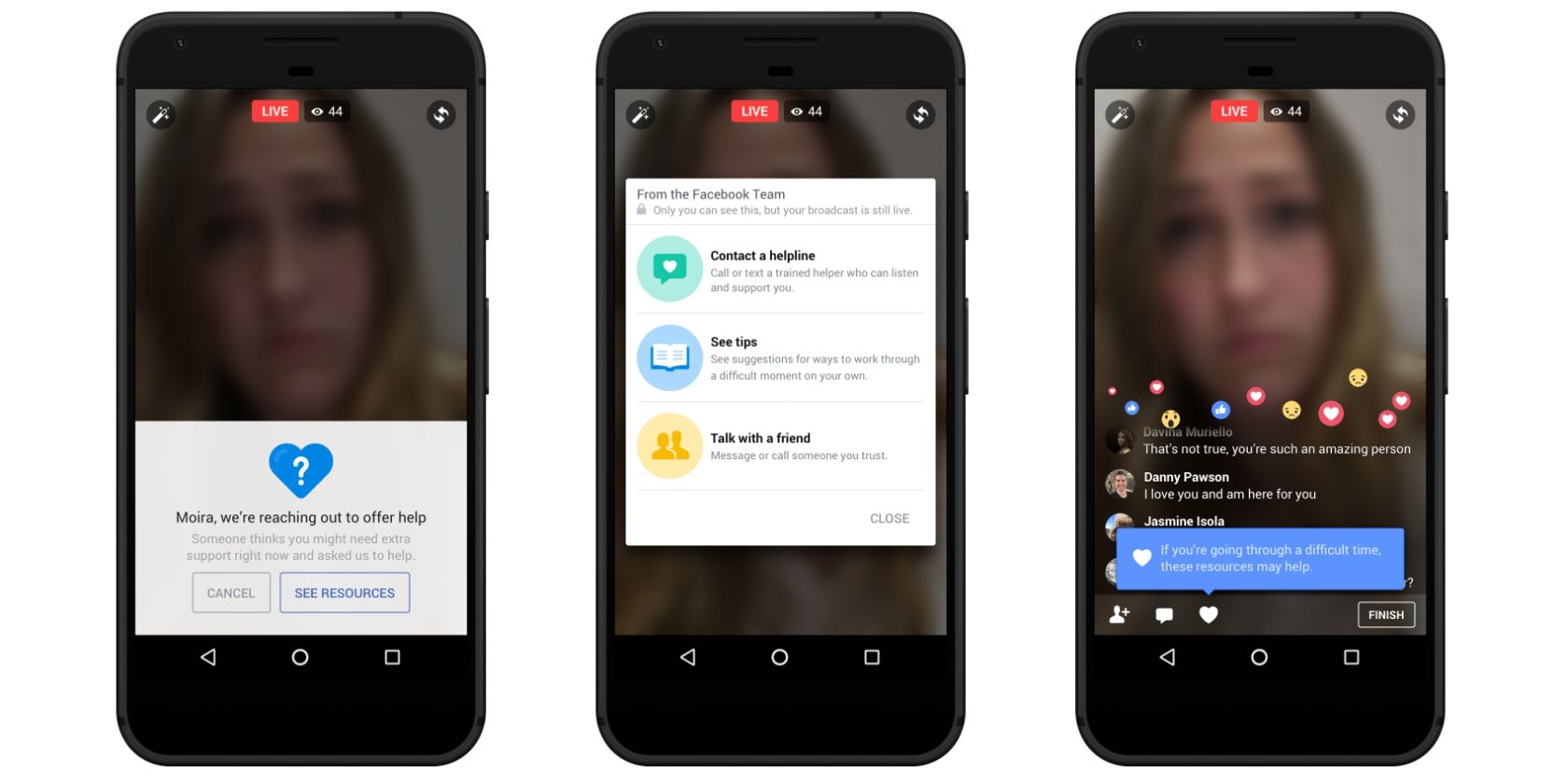

Facebook has detailed the steps it's taking to get help for people who need it. Which involves using artificial intelligence to "detect posts or live videos where someone might be expressing thoughts of suicide," identifying appropriate first responders, and then employing more people to "review reports of suicide or self harm".

The social network has been testing this system in the U.S. for the last month, and "worked with first responders on over 100 wellness checks based on reports we received via our proactive detection efforts." In some cases the local authorities were notified in order to help.

Now, Facebook is expanding its use of AI, which it calls "pattern recognition technology," and it will eventually be available worldwide except the EU. This is because the EU has the General Data Protection Regulation which prevents companies using sensitive information to profile their users.

Unfortunately, for all of its good intentions, there is an unavoidable and undeniable downside to this initiative. Because it means Facebook is monitoring what you're saying on its platform. And while monitoring for suicidal thoughts clearly has the potential to do good, it's also an invasion of privacy. Which is why Facebook cannot roll this out in the EU thanks to stronger privacy laws.

Facebook Fights the Good Fight Against Depression

Depression is more common than you might think, and there's evidence to suggest technology might be making things worse. However, the internet can also act as a temporary support group, with a number of resources designed to help. Facebook is just employing another tool in this fight.

What do you think of Facebook's new efforts to help prevent suicides? Is it Facebook's duty to put such safeguards in place? Do you think it's borne out of genuine concern for its users? Or is it just another way of invading people's privacy? Please let us know in the comments below!