There is a spam filter in almost every emailing or messaging platform. The filter examines each mail or message as it arrives and classifies it as either spam or ham. Your inbox displays those that fall under ham. It rejects, or displays separately, the messages that fall under spam.

You can create your own spam filter using NLTK, regex, and scikit-learn as the main libraries. You will also need a dataset to train your model.

Understanding Your Dataset

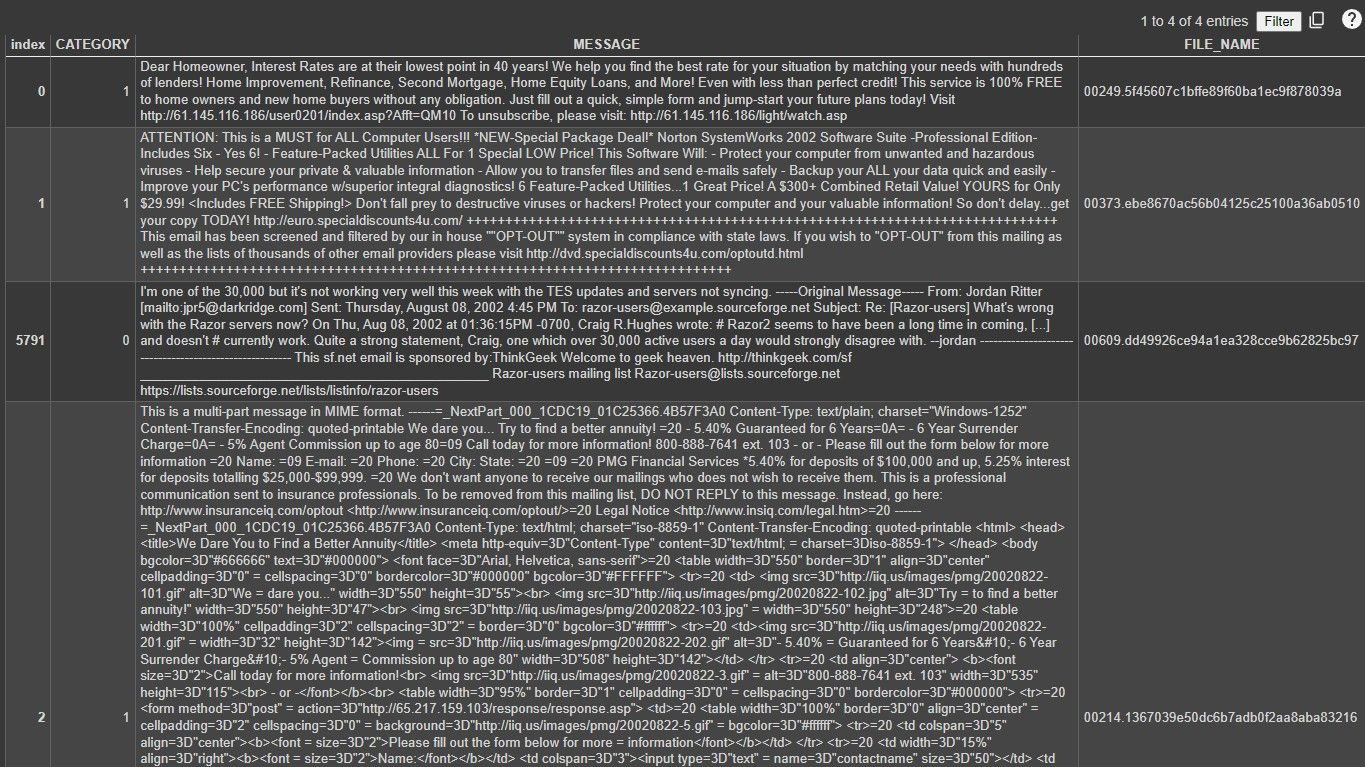

“Spam Classification for Basic NLP” is a freely available Kaggle dataset. It contains a mixture of spam and ham raw mail messages. It has 5,796 rows and 3 columns.

The CATEGORY column indicates whether a message is spam or ham. Number one represents spam while zero represents ham. The MESSAGE column contains the actual raw mail. The FILE_NAME category is a unique message identifier.

Preparing Your Environment

To follow along, you’ll need to have a basic understanding of Python and machine learning. You should also be comfortable working with Google Colab or Jupyter Notebook.

For Jupyter Notebook, navigate to the folder you want the project to reside. Create a new virtual environment and run the Jupyter Notebook from this folder. Google Colab does not need this step. Create a new notebook in either Google Colab or Jupyter Notebook.

The full source code and the dataset are available in a GitHub repository.

Run the following magic command to install the required libraries.

!pip install nltk scikit-learn regex numpy pandas

You will use:

- NLTK for natural language processing (NLP).

- scikit-learn to create the machine learning model.

- regex for working with regular expressions.

- NumPy for working with arrays.

- Pandas to manipulate your dataset.

Import Libraries

Import the libraries you installed in your environment. Import the regex library as re and scikit-learn as sklearn.

import pandas as pd

import numpy as np

import nltk

from nltk.stem import WordNetLemmatizer

from nltk.corpus import stopwords

import re

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer

You will use WordNetLemmatizer and stopwords modules from NLTK to preprocess the raw messages in the dataset. You will use imported sklearn modules during model building.

Preprocessing the Data

Call the pandas read_csv function to load the dataset. Make sure you store the dataset in the same directory as your project. Display the first five rows of the dataset to get a visual of the dataset.

df = pd.read_csv('/content/Spam Email raw text for NLP.csv')

df.head()

Drop the FILE_NAME column of the dataset. It is not a useful feature for spam classification.

df.drop('FILE_NAME', axis=1, inplace=True)

Check for the count of ham and spam mail in the dataset. This will later help you determine how to split the data for model training and testing.

df.CATEGORY.value_counts()

Download the corpus stopwords from the NLTK library. Stopwords are a set of commonly occurring words. Preprocessing removes them from messages. Load the English stopwords and store them in a stopword variable.

nltk.download('stopwords')

stopword = nltk.corpus.stopwords.words('english')

Download the open Multilingual WordNet. It is a lexical database of English words and their semantic meanings.

nltk.download('omw-1.4')

Download the wordnet corpus. You will use it for text classification. Instantiate a WordNetLemmatizer() object. You will use the object during lemmatization. Lemmatization is a technique used in NLP to reduce derivational forms of words to their dictionary meaning.

For example: Reducing the word “cats” will give you “cat”. A word after lemmatization becomes a lemma.

nltk.download('wordnet')

lemmatizer = WordNetLemmatizer()

Create an empty list that you will use to store the preprocessed messages.

corpus=[]

Create a for loop to process every message in the MESSAGE column of the dataset. Remove all non-alphanumeric characters. Convert the message to lowercase. Split the text into words. Remove the stopwords and lemmatize the words. Convert the words back into sentences. Append the preprocessed message into the corpus list.

for i in range(len(df)):

# removing all non-alphanumeric characters

message = re.sub('[^a-zA-Z0-9]', ' ', df['MESSAGE'][i])

# converting the message to lowercase

message = message.lower()

# splitting the sentence into words for lemmatization

message = message.split()

# removing stopwords and lemmatizing

message = [lemmatizer.lemmatize(word) for word in message

if word not in set(stopwords.words('english'))]

# Converting the words back into sentences

message = ' '.join(message)

# Adding the preprocessed message to the corpus list

corpus.append(message)

This loop will take around five minutes to run. The lemmatizing and removing stopwords step takes most of the time. You have now preprocessed your data.

Feature Engineering Using the Bag-of-Words Model vs TF-IDF Technique

Feature engineering is the process of converting raw data features into new features suited for machine learning models.

Bag-of-Words Model

The bag-of-words model represents text data as a frequency distribution of words present in the document. This is simply how the number of times a word occurs in a document.

Use the CountVectorizer class from scikit-learn to convert the text data into numerical vectors. Fit the corpus of preprocessed messages and transform the corpus into a sparse matrix.

# Take top 2500 features

cv = CountVectorizer(max_features=2500, ngram_range=(1,3))

X = cv.fit_transform(corpus).toarray()

y = df['CATEGORY']

Split the transformed data into training and test sets. Use twenty percent of the data for testing and eighty percent for training.

x_train, x_test, y_train, y_test = train_test_split(

X, y, test_size=0.20, random_state=1, stratify=y)

The bag-of-words model will classify the messages in the dataset correctly. But will not perform well in classifying your own messages. It does not take into account the semantic meaning of the messages. To only classify the messages in the dataset, use this technique.

TF-IDF Technique

The Term Frequency-Inverse Document Frequency (TF-IDF) works by assigning weights to words in a document based on how frequently they appear. TF-IDF gives words that appear frequently in a document but are rare in the corpus higher weight. This allows machine learning algorithms to better understand the meaning of the text.

tf = TfidfVectorizer(ngram_range=(1,3), max_features=2500)

X = tf.fit_transform(corpus).toarray()

x_train, x_test, y_train, y_test = train_test_split(

X, y, test_size=0.20, random_state=1, stratify=y)

To extract semantic meaning from the messages and classify your own messages use TF-IDF.

Creating and Training Your Model

Start by creating and initializing a Naive Bayes model using the scikit-learn MultinomialNB class.

model = MultinomialNB()

Fit the training data, allowing the model to train on the training set:

model.fit(x_train, y_train)

Then make predictions on the training and testing sets using the predict method.

train_pred = model.predict(x_train)

test_pred = model.predict(x_test)

These predictions will help you evaluate your model.

Model Evaluation

Evaluate the performance of your model using the classification_report function from scikit-learn. Pass the training set predictions and the actual training set labels as input. Do the same for the test set.

print(classification_report(train_pred, y_train))

print(classification_report(test_pred, y_test))

The higher the precision, recall, and accuracy for both classes the better the model.

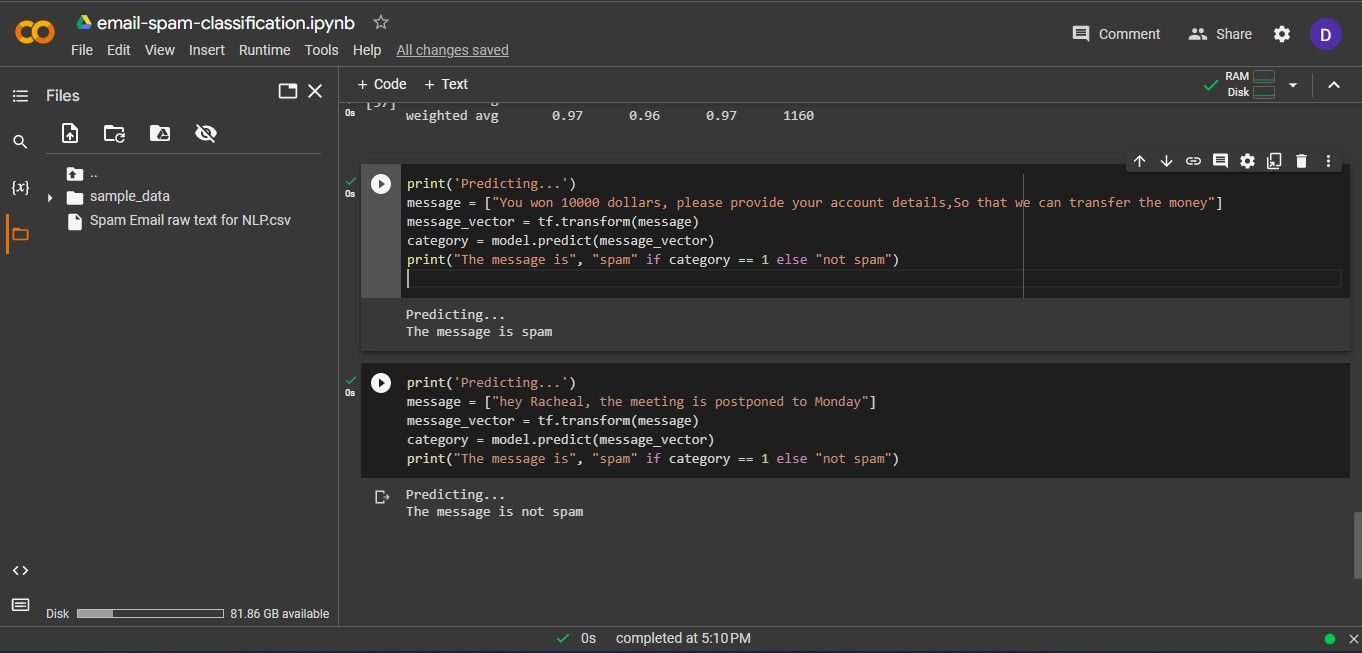

Results of Classifying Your Own Messages

Transform the message into a vector using the TF-IDF technique. Use the model to predict whether the message is spam or ham, then display that prediction on the screen.

print('Predicting...')

message = ["You won 10000 dollars, please provide your account

details,So that we can transfer the money"]

message_vector = tf.transform(message)

category = model.predict(message_vector)

print("The message is", "spam" if category == 1 else "not spam")

Replace the message with your own.

The output is as follows:

The model can classify new unseen messages as spam or ham.

The Challenge Facing Spam Classification in Applications

The main challenge facing spam classification in applications is the misclassification of messages. Machine learning models are not always correct. They may classify spam as ham and vice-versa. In the case of classifying ham as spam, a program may remove email from the user's inbox, causing them to miss important messages.