Web scraping is a popular technique for gathering large amounts of data from web pages quickly and efficiently. In the absence of an API, web scraping can be the next-best approach.

Rust’s speed and memory safety make the language ideal for building web scrapers. Rust is home to many powerful parsing and data extraction libraries, and its robust error-handling capabilities are handy for efficient and reliable web data collection.

Web Scraping in Rust

Many popular libraries support web scraping in Rust, including reqwest, scraper, select, and html5ever. Most Rust developers combine functionality from reqwest and scraper for their web scraping.

The reqwest library provides functionality for making HTTP requests to web servers. Reqwest is built on Rust’s built-in hyper crate while delivering a high-level API for standard HTTP features.

Scraper is a powerful web scraping library that parses HTML and XML documents and extracts data using CSS selectors and XPath expressions.

After creating a new Rust project with the cargo new command, add the reqwest and scraper crates to the dependencies section of your cargo.toml file:

[dependencies]

reqwest = {version = "0.11", features = ["blocking"]}

scraper = "0.12.0"

You’ll use reqwest to send HTTP requests and scraper for parsing.

Retrieving Webpages With Reqwest

You’ll send a request for a webpage’s contents before parsing it to retrieve specific data.

You can send a GET request and retrieve the HTML source of a page using the text function on the get function of the reqwest library:

fn retrieve_html() -> String {

let response = get("https://news.ycombinator.com").unwrap().text().unwrap();

return response;

}

The get function sends the request to the webpage, and the text function returns the text of the HTML.

Parsing HTML With Scraper

The retrieve_html function returns the text of the HTML, and you’ll need to parse the HTML text to retrieve the specific data you need.

Scraper provides functionality for interacting with HTML in the Html and Selector modules. The Html module provides functionality for parsing the document, and the Selector module provides functionality for selecting specific elements from the HTML.

Here’s how you can retrieve all the titles on a page:

use scraper::{Html, Selector};

fn main() {

let response = reqwest::blocking::get(

"https://news.ycombinator.com/").unwrap().text().unwrap();

// parse the HTML document

let doc_body = Html::parse_document(&response);

// select the elements with titleline class

let title = Selector::parse(".titleline").unwrap();

for title in doc_body.select(&title) {

let titles = title.text().collect::<Vec<_>>();

println!("{}", titles[0])

}

}

The parse_document function of the Html module parses the HTML text, and the Parse function of the Selector module selects the elements with the specified CSS selector (in this case, the titleline class).

The for loop traverses through these elements and prints the first block of text from each.

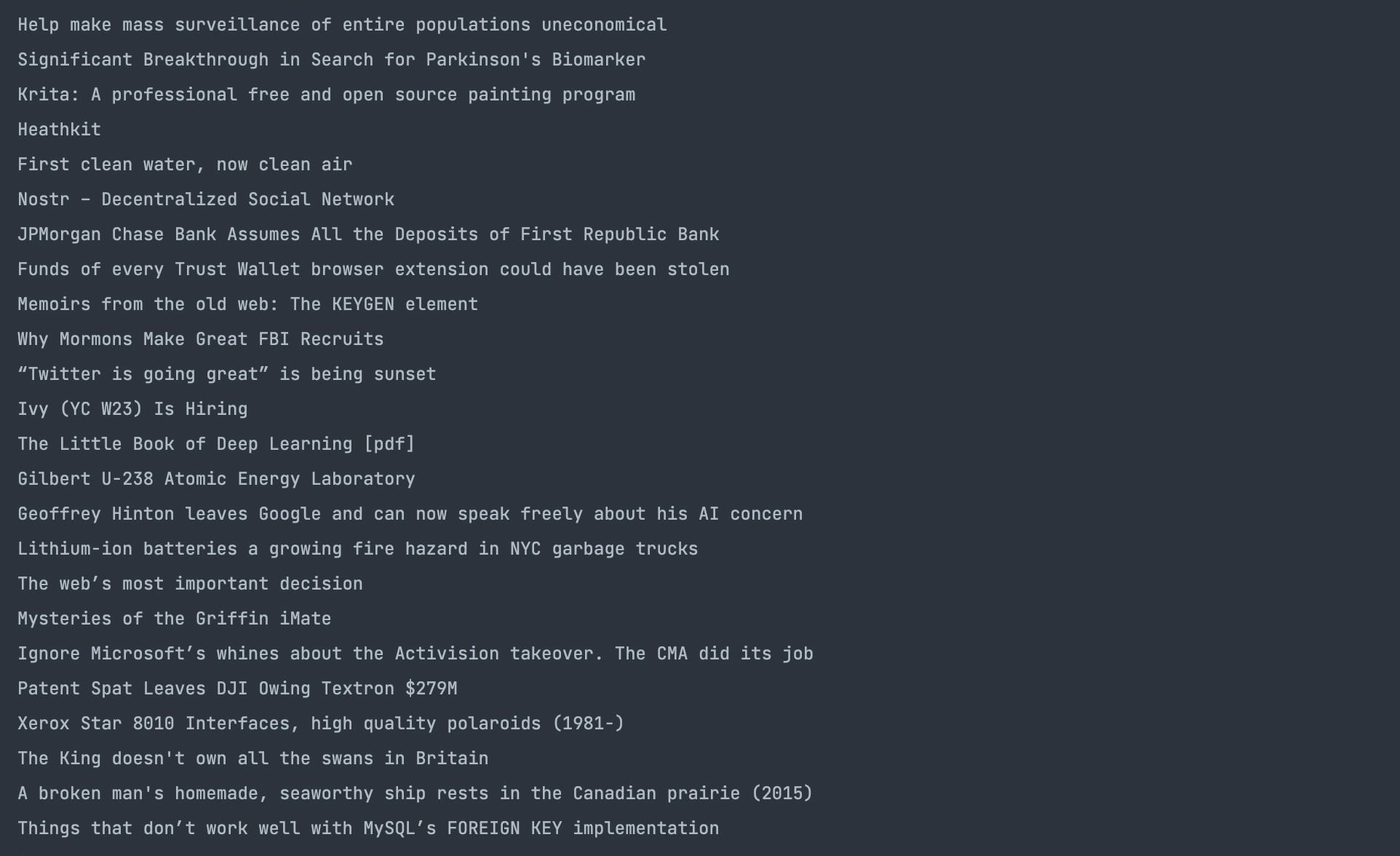

Here’s the result of the operation:

Selecting Attributes With Scraper

To select an attribute value, retrieve the required elements as before and use the attr method of the tag value instance:

use reqwest::blocking::get;

use scraper::{Html, Selector};

fn main() {

let response = get("https://news.ycombinator.com").unwrap().text().unwrap();

let html_doc = Html::parse_document(&response);

let class_selector = Selector::parse(".titleline").unwrap();

for element in html_doc.select(&class_selector) {

let link_selector = Selector::parse("a").unwrap();

for link in element.select(&link_selector) {

if let Some(href) = link.value().attr("href") {

println!("{}", href);

}

}

}

}

After selecting elements with the titleline class using the parse function, the for loop traverses them. Inside the loop, the code then fetches a tags and selects the href attribute with the attr function.

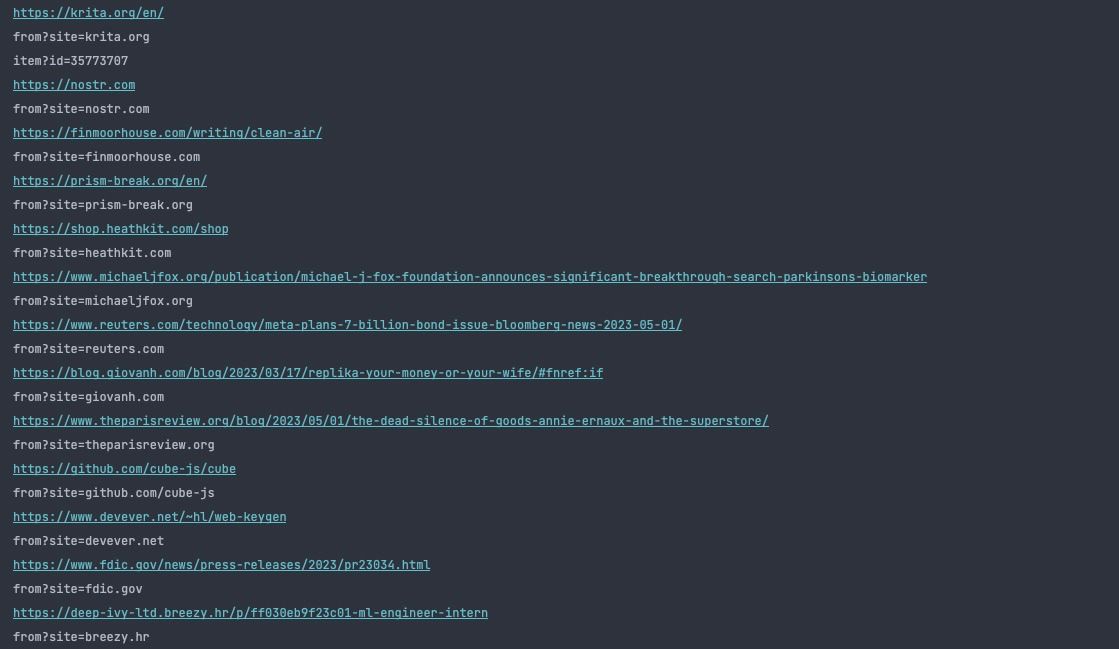

The main function prints these links, with a result like this one:

You Can Build Sophisticated Web Applications in Rust

Recently, Rust has been gaining adoption as a language for web development from front-end to server-side app development.

You can leverage web assembly to build full-stack web applications with libraries like Yew and Percy or build server-side applications with Actix, Rocket, and the host of libraries in the Rust ecosystem that provide functionality for building web applications.