Large language models, known generally (and inaccurately) as AIs, have been threatening to upend the publishing, art, and legal world for months. One downside is that using LLMs such as ChatGPT means creating an account and having someone else's computer do the work. But you can run a trained LLM on your Raspberry Pi to write poetry, answer questions, and more.

What Is a Large Language Model?

Large language models use machine learning algorithms to find relationships and patterns between words and phrases. Trained on vast quantities of data, they are able to predict what words are statistically likely to come next when given a prompt.

If you were to ask thousands of people how they were feeling today, the responses would be along the lines of, "I'm fine", "Could be worse", "OK, but my knees are playing up". The conversation would then turn in a different direction. Perhaps the person would ask about your own health, or follow up with "Sorry, I've got to run. I'm late for work".

Given this data and the initial prompt, a large language model should be able to come up with a convincing and original reply of its own, based on the likelihood of a certain word coming next in a sequence, combined with a preset degree of randomness, repetition penalties, and other parameters.

The large language models in use today aren't trained on a vox pop of a few thousand people. Instead, they're given an unimaginable amount of data, scraped from publicly available collections, social media platforms, web pages, archives, and occasional custom datasets.

LLMs are trained by human researchers who will reinforce certain patterns and feed them back to the algorithm. When you ask a large language model "what is the best kind of dog?", it'll be able to spin an answer telling you that a Jack Russell terrier is the best kind of dog, and give you reasons why.

But regardless how intelligent or convincingly and humanly dumb the answer, neither the model nor the machine it runs on has a mind, and they are incapable of understanding either the question or the words which make up the response. It's just math and a lot of data.

Why Run a Large Language Model on Raspberry Pi?

Large language models are everywhere, and are being adopted by large search companies to assist in answering queries.

While it's tempting to throw a natural language question at a corporate black box, sometimes you want to search for inspiration or ask a question without feeding yet more data into the maw of surveillance capitalism.

As an experimental board for tinkerers, the Raspberry Pi single-board computer is philosophically, if not physically, suited to the endeavor.

Meta's LLaMA Is LLM for Devices

In February 2023, Meta (the company formerly known as Facebook) announced LLaMA, a new LLM boasting language models of between 7 billion and 65 billion parameters. LLaMA was trained using publicly available datasets,

The LLaMA code is open source, meaning that anyone can use and adapt it, and the 'weights' or parameters were posted as torrents and magnet links in a thread on the project's GitHub page.

In March 2023, developer Georgi Gerganov released llama.cpp, which can run on a huge range of hardware, including Raspberry Pi. The code runs locally, and no data is sent to Meta.

Install llama.cpp on Raspberry Pi

There are no published hardware guidelines for llama.cpp, but it is extremely processor, RAM, and storage hungry. Make sure that you're running it on a Raspberry Pi 4B or 400 with as much memory, virtual memory, and SSD space available as possible. An SD card isn't going to cut it, and a case with decent cooling is a must.

We're going to be using the 7 billion parameter model, so visit this LLamA GitHub thread, and download the 7B torrent using a client such as qBittorrent or Aria.

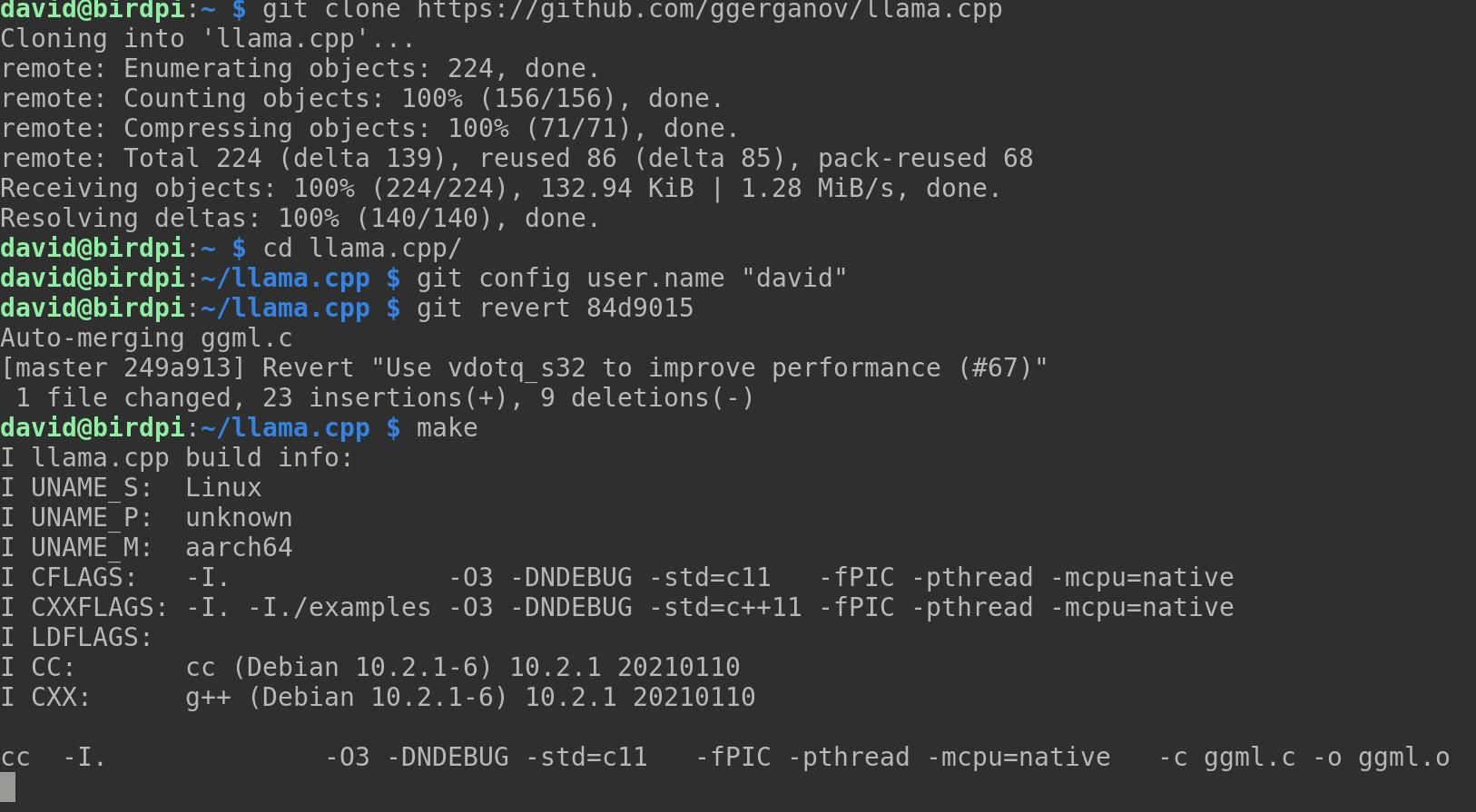

Clone the llama.cpp repository and then use the cd command to move into the new directory:

git clone https://github.com/ggerganov/llama.cpp

cd llama.cpp

If you don't have a compiler installed, install one now with:

sudo apt-get install g++

Now compile the project with this command:

make

There's a chance that llama.cpp will fail to compile, and you'll see a bunch of error messages relating to "vdotq_s32". If this happens, you need to revert a commit. First, set your local git user:

git config user.name "david"

Now you can revert a previous commit:

git revert 84d9015

A git commit message will open in the nano text editor. Press Ctrl + O to save, then Ctrl + X to exit nano. llama.cpp should now compile without errors when you enter:

make

You'll need to create a directory for the weighted models you intend to use:

mkdir models

Now transfer the weighted models from the LLaMa directory:

mv ~/Downloads/LLaMA/* ~/llama.cpp/models/

Make sure you have Python 3 installed on your Pi, and install the llama.cpp dependencies:

python3 -m pip install torch numpy sentencepiece

The NumPy version may cause issues. Upgrade it:

pip install numpy --upgrade

Now convert the 7B model to ggml FP16 format:

python3 convert-pth-to-ggml.py models/7B/ 1

The previous step is extremely memory intensive and, by our reckoning, uses at least 16GB RAM. It's also super slow and prone to failure.

You will get better results by following these instructions in parallel on a desktop PC, then copying the file /models/7B/ggml-model-q4_0.bin to the same location on your Raspberry Pi.

Quantize the model to 4 bits:

./quantize.sh 7B

That's it. LLaMA LLM is now installed on your Raspberry Pi, and ready to use!

Using llama.cpp on Raspberry Pi

To get started with llama.cpp, make sure you're in the project directory and enter the following command:

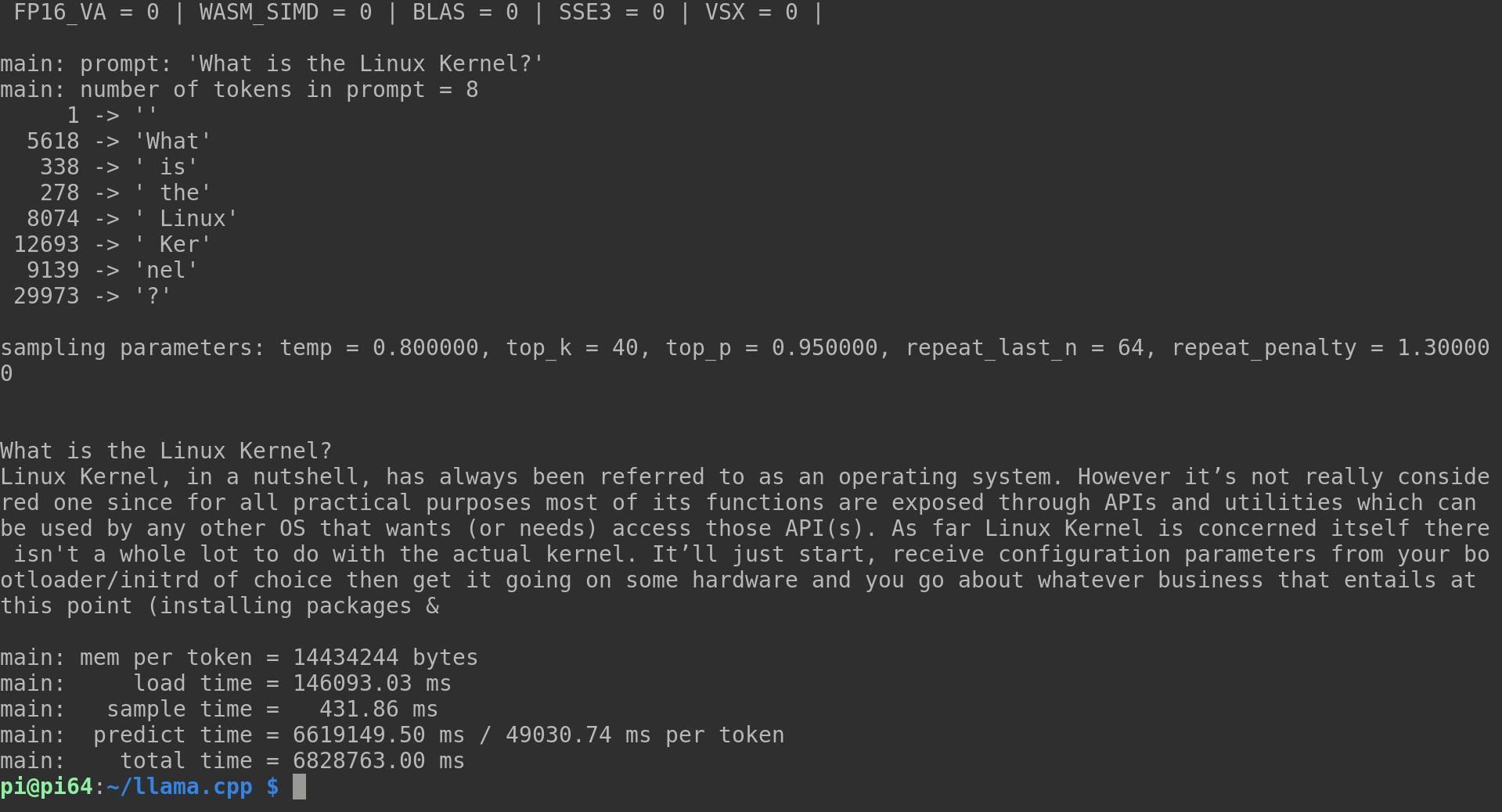

./main -m ./models/7B/ggml-model-q4_0.bin -t 4 -n 128 -p "What is the Linux Kernel?"

The -m option is to direct llama.cpp to the model you want it to use; -t indicates the number of threads you want it to use; -n is the number of tokens to predict; -p is your prompt. You can customize any and all of these variables.

Settle in for a long wait, because while the Raspberry Pi is excellent at what it does, it wasn't designed for this kind of CPU activity. In our example prompt, llama broke the text down into eight individual tokens, before giving the following response:

Linux Kernel, in a nutshell, has always been referred to as an operating system. However it’s not really considered one since for all practical purposes most of its functions are exposed through APIs and utilities which can be used by any other OS that wants (or needs) access those API(s). As far Linux Kernel is concerned itself there isn't a whole lot to do with the actual kernel. It’ll just start, receive configuration parameters from your bootloader/initrd of choice then get it going on some hardware and you go about whatever business that entails at this point

While this was a fairly coherent answer, a second attempt resulted in something resembling roughly chopped word salad:

Most of you have probably heard about it but not really know what they are talking about. We will be discussing this in details because understanding them fully helps us to use our computers more efficiently and also make better decisions when buying new hardware or software for your PCs at home, offices etc.. The Linux Kernel is the backbone of most operating systems that runs on a computer system such as android which is an open source Operating System based in part from this kernel. But what exactly do they mean by saying linux kernal?

The Linus Torvalds has written down his thoughts (Knowledge)

It's worth mentioning at this point that each of these responses took close to two hours to produce, and had all four CPU cores working at 100% throughout. Work on the project is moving fast, however, and you can expect upcoming ARM optimizations to bring the time down.

You can also simulate entire conversations with your LLM instead of feeding it prompts one at a time. Adding the -i option will start llama in interactive mode, while --interactive-start will ask you for input at the start. For a full list of available options, run:

main -h

Be aware that LlaMA doesn't have restrictive rules. It will, on occasion, be sexist, racist, homophobic, and very wrong.

A Large Language Model Is No Substitute for Real Knowledge

Running Meta's LLaMA on Raspberry Pi is insanely cool, and you may be tempted to turn to your virtual guru for technical questions, life advice, friendship, or as a real source of knowledge. Don't be fooled. Large language models know nothing, feel nothing, and understand nothing. If you need help with something, it's better to talk to a human being or to read something written by a human being.

If you're short of time, you could speed-read it in your Linux terminal!