HDR technology is now so widespread that popular streaming services such as Amazon Prime, Disney+, and Netflix have started to support HDR content. In fact, if you were to look for a new TV or monitor today, you'd be surprised how almost every product boasts HDR on its spec list.

Which begs the question: what is HDR exactly? How does HDR work, and how does it compare to regular SDR?

What Is SDR?

The Standard Dynamic Range (SDR) is a video standard that has been in use since CRT monitors. Despite the market success of HDR screen technology, SDR is still the default format used in TVs, monitors, and projectors. Although it was used in old CRT monitors (and is actually hampered by the limitations of CRT technology), SDR is still an acceptable format today. In fact, the vast majority of video content, whether games, movies, or YouTube videos, still uses SDR. Basically, if the device or content is not rated as HDR, you're probably using SDR.

What Is HDR?

High Dynamic Range (HDR) is the newer standard in images and videos. HDR first became popular among photographers wanting to properly expose a composition with two subjects having a 13-stop difference in exposure value. Such a wide dynamic range would allow proper exposure to real-life scenes that previously weren't possible with SDR.

More recently, HDR was introduced to movies, videos, and even games. While SDR content provided overblown skies, indistinguishable blacks, and banding problems during high-contrast scenes, HDR realistically portrays these scenes with a more expansive color space, color depth, and luminance.

Broader color space, bigger color depth, and higher luminance make HDR better than SDR—but by how much?

Comparing HDR vs. SDR

If you've ever been in the market for a monitor, you've probably noticed certain specs such as sRGB, nits and cd/m2, and 10-bit colors. These specifications are for color space, luminance, and color depth. All of these specifications are what make vibrant, well-blended, and adequately exposed subjects inside an image.

To better understand the difference between HDR and SDR, let us compare the two through their color gamut, brightness, and color depth. Let's start with the color gamut.

Color Gamut

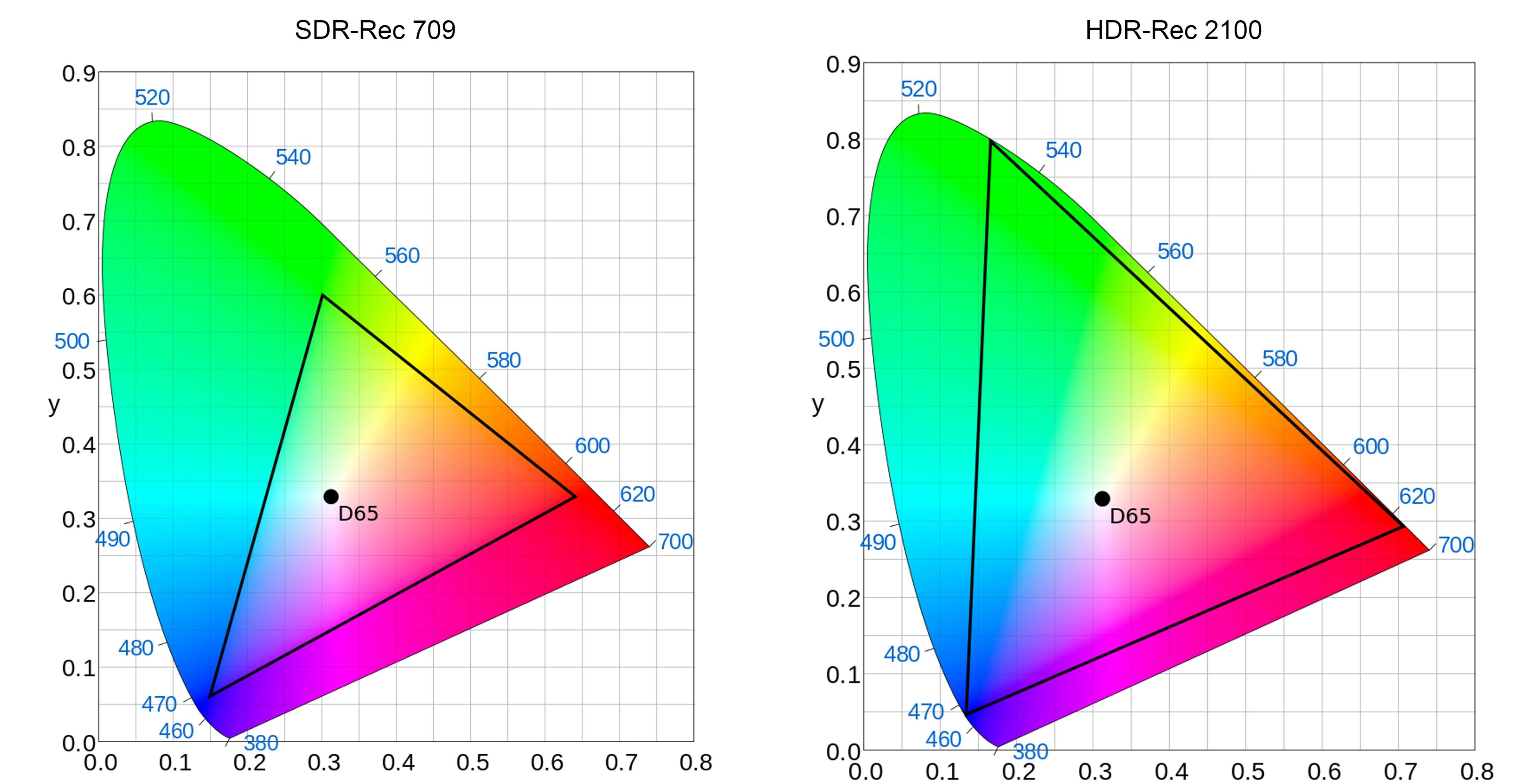

A color gamut is a theoretical spectrum of colors that can be digitally portrayed. To represent all possible colors that the eyes can see, the industry uses what is known as the CIE 1931 chromaticity diagram. This diagram is the standard with which different color spaces are being compared. SDR uses a color space called Rec 709 and HDR with Rec 2100. The triangle represents how much space they utilize through the illustration below:

As you can see, the color space used by HDR's Rec 2100 is significantly larger than that of SDR's Rec 709.

With the large color space of HDR, filmmakers and various content creators will have a significantly larger spectrum of greens, reds, and yellows to accurately and artistically portray their work. This means viewers watching HDR will see more vibrant colors, especially in greens, yellows, reds, and everything between them.

As for SDR, since the color space does have proportionate amounts of primary colors, colorists can still portray their work beautifully, albeit with significant limitations.

Brightness

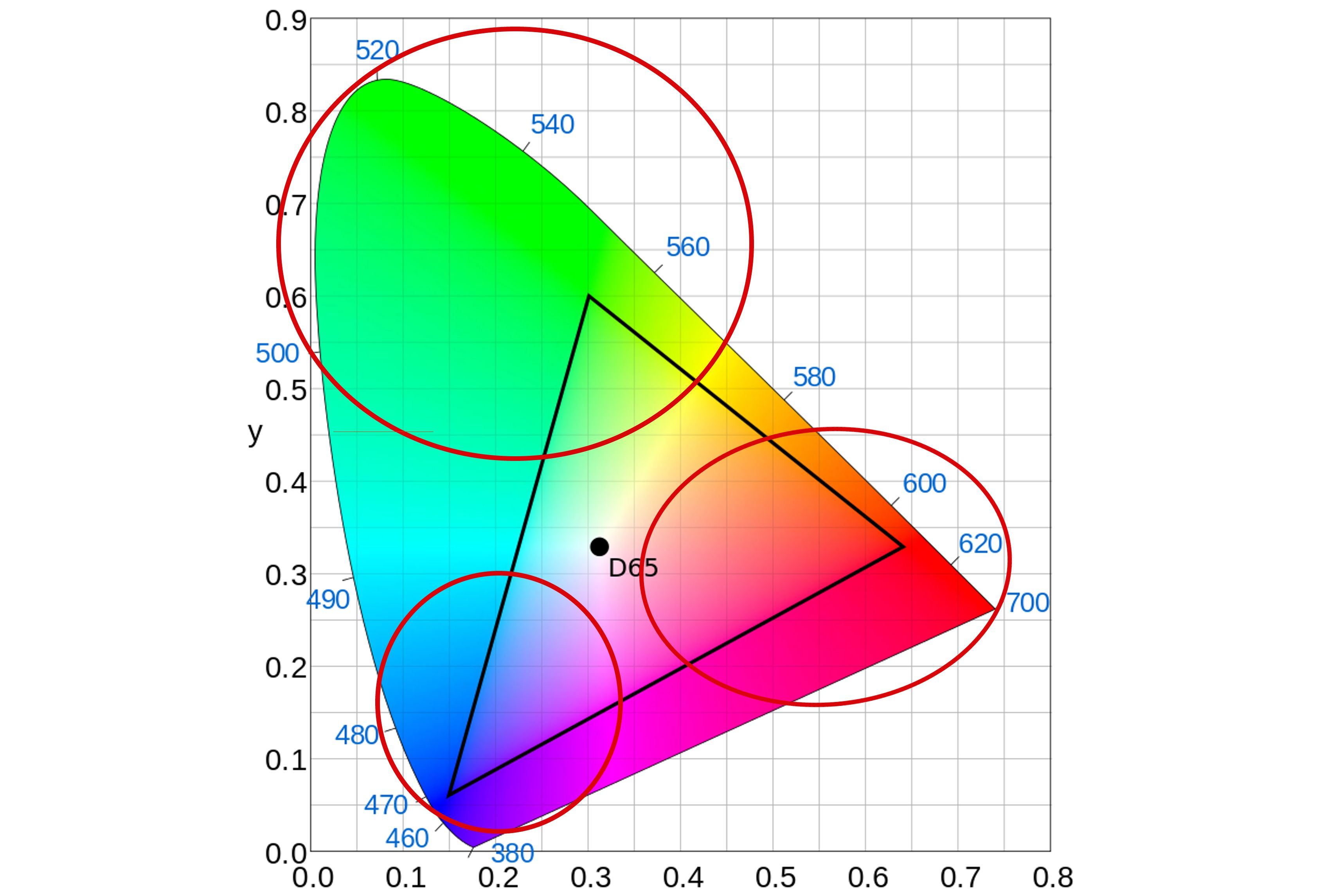

You've seen the color gamut portrayed in 2D like the one used earlier. However, the entire CIE 1931 color space is actually a 3D diagram. The 3rd dimension of the diagram represents the perceived brightness of the color. Brightness, along with saturation, are what modifies the quality of color humans are capable of seeing.

A display that can output higher amounts of luminance is more capable of modifying all hues the 2D color scape represents and thus can display more color visible to the human eyes. Luminance is measured in nits or candela/m2.

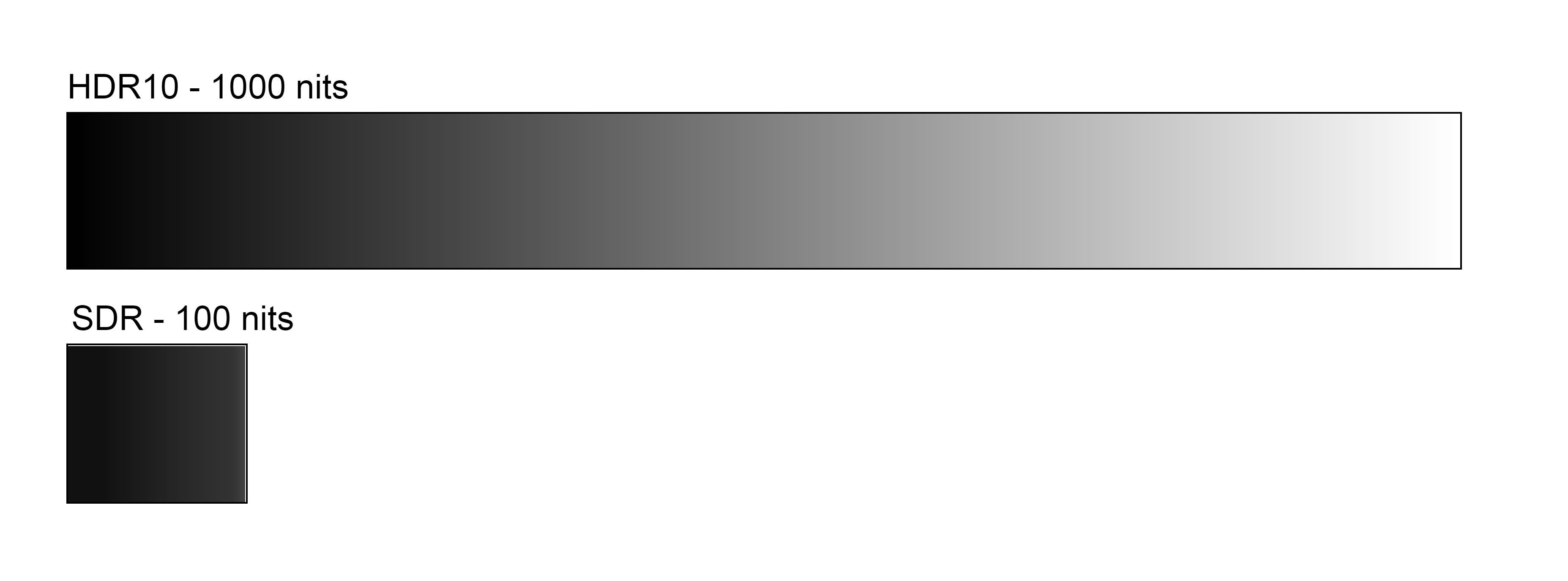

SDR is capable of outputting 100nits or 100cd/m2. In contrast, the HDR10 (the most common HDR standard) can output up to 1,000 nits. This means that watching in HDR10 can allow viewers to see more varieties of primary and secondary colors.

Color Depth

Although the human eyes see everything in analog, digital displays must mimic these analog waves of light in digital bits for processors to recreate. These bits of digital information are known as color depth or color bits.

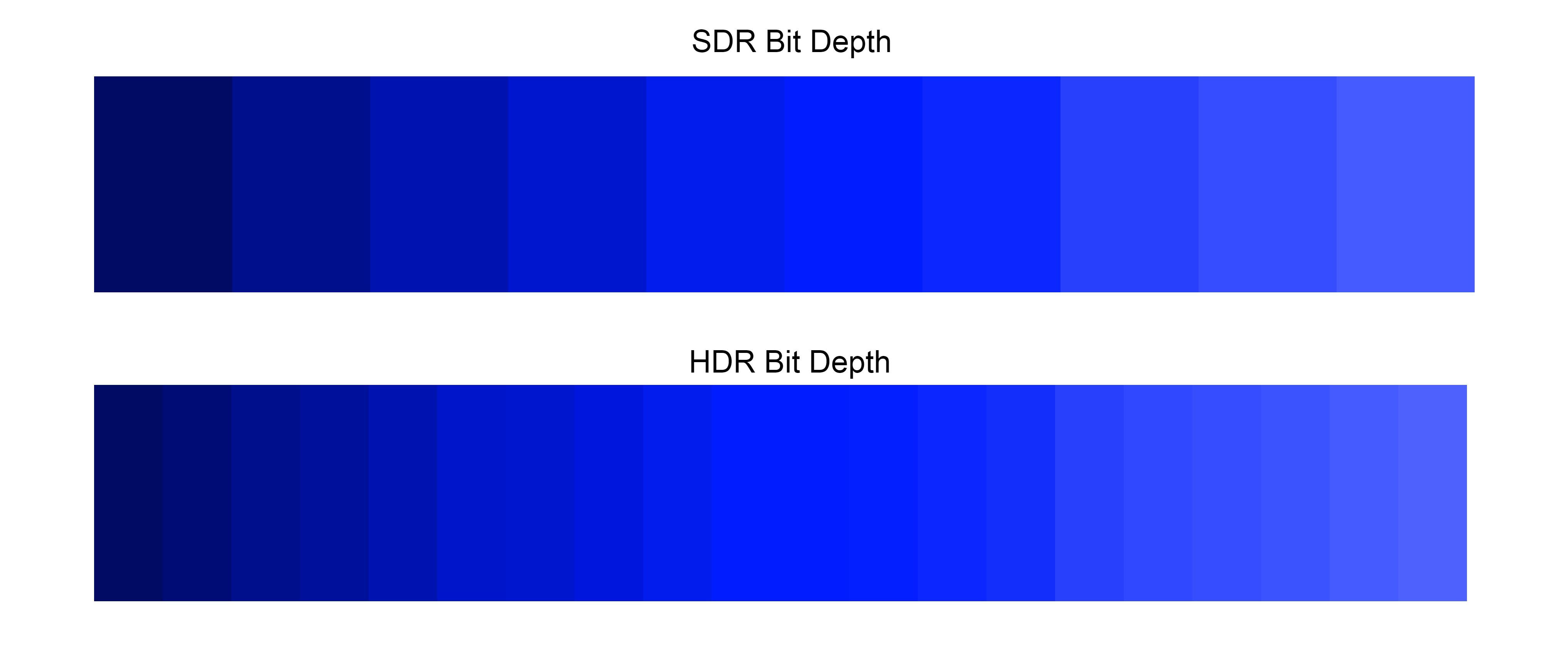

The human eye uses perception to see different colors. Digital displays use color depth or bit depth to instruct a pixel on what color to show. The more bit a pixel can flash, the more colors it can display.

SDR can display 8-bits of color, meaning a pixel can display one primary color in 256 varieties. Since there are three primary colors, an 8-bit panel can display a maximum of 16,777,216 shades of color.

To put that into perspective, the human eye can only distinguish around 10 million colors. This means that SDR is very capable of displaying colors our human eyes can see, which is why 8-bit color is still the standard for visual media today.

In contrast, HDR10 can do a color depth of 10-bits, allowing a maximum of 1.07 billion shades of color!

Impressive, but since the human eye can only distinguish around 10 million colors, isn't a 10-bit color depth overkill? Can you even see the difference?

Yes, you absolutely could! But how?

People can perceive more colors with a 10-bit depth because the human eye does not perceive the color hues equally.

If you look at the CIE 1931 chromaticity scale (pictured above), you can see that the human eye can see a lot more greens and reds than blues. Although an 8-bit color depth may closely maximize all the blues your eyes can perceive, it cannot do the same with red, and especially with greens. So although you will see around the same range of blues in 8 and 10-bit, other primaries such as reds and greens will show more on a system using a 10-bit color depth.

Pros and Cons of HDR and SDR

HDR and SDR are two standards used in visual digital media. Using one standard from another will have its streets and weaknesses. Here is a table to show you how one compares with the other:

In terms of color and display, HDR is better than SDR in every way. It provides significant improvements in color space, luminance, and color depth. So, if you have the chance to watch movies, view images, or play games in HDR, you should always do so—but can you?

The problem with HDR is that most consumable media is not HDR compatible. Often, viewing HDR media on an SDR screen will make your viewing experience worse than watching it on a regular SDR panel.

Another problem is that most HDR devices use HDR10, which is loosely standardized, while its marketing is largely uniform. For example, you'll find the HDR10 logo slapped on a subpar panel that cannot perform as well as the 1,000-nits panel shown in HDR10 advertisements.

Although SDR provides the usual viewing standards and cannot compete with HDR when it does work, its ease of use, compatibility, and lower cost is why many people still prefer using it.

You Need Both HDR and SDR

Now that you know the difference between SDR and HDR standards, it becomes obvious that HDR is the clear winner when it comes to watching entertaining content. This, however, does not mean that you should stop using SDR. The truth is that SDR is still the better standard to use whenever you're not watching or playing HDR-specific content.

If you are buying a new display, it would be wise to invest in a more expensive HDR-capable panel as it allows you to watch both HDR and SDR content. Since SDR content looks bad in HDR10, you can always turn off HDR when you're watching, playing, or viewing SDR content and applications.

Hopefully, that gives you an idea of how much of an impact HDR brings to the table. Although SDR is still going to be the way you enjoy various content, it is only a matter of time until HDR gets better support. Then, it will likely be the future standard everybody uses.

.jpg)