In January 2023, Google announced MusicLM, an experimental AI tool that could generate music based on text descriptions. Alongside the news, Google released a stunning research paper for MusicLM that left many people dazzled at the ability to conjure music from thin air.

Given a text prompt, the model promised to produce high fidelity music that delivered on all sorts of descriptions from genre to instrument to abstract captions describing famous artworks. Now that MusicLM is open to the public, we decided to put it to the test.

Google's Attempt to Create an AI Music Generator

Turning a text prompt like "relaxing jazz" into a ready-to-play track is arguably the holy grail of experiments in AI music. Similar to famous AI image generators like Dall-E or Midjourney, you don't need to have a speck of music know-how to produce a track that has a melody and beat.

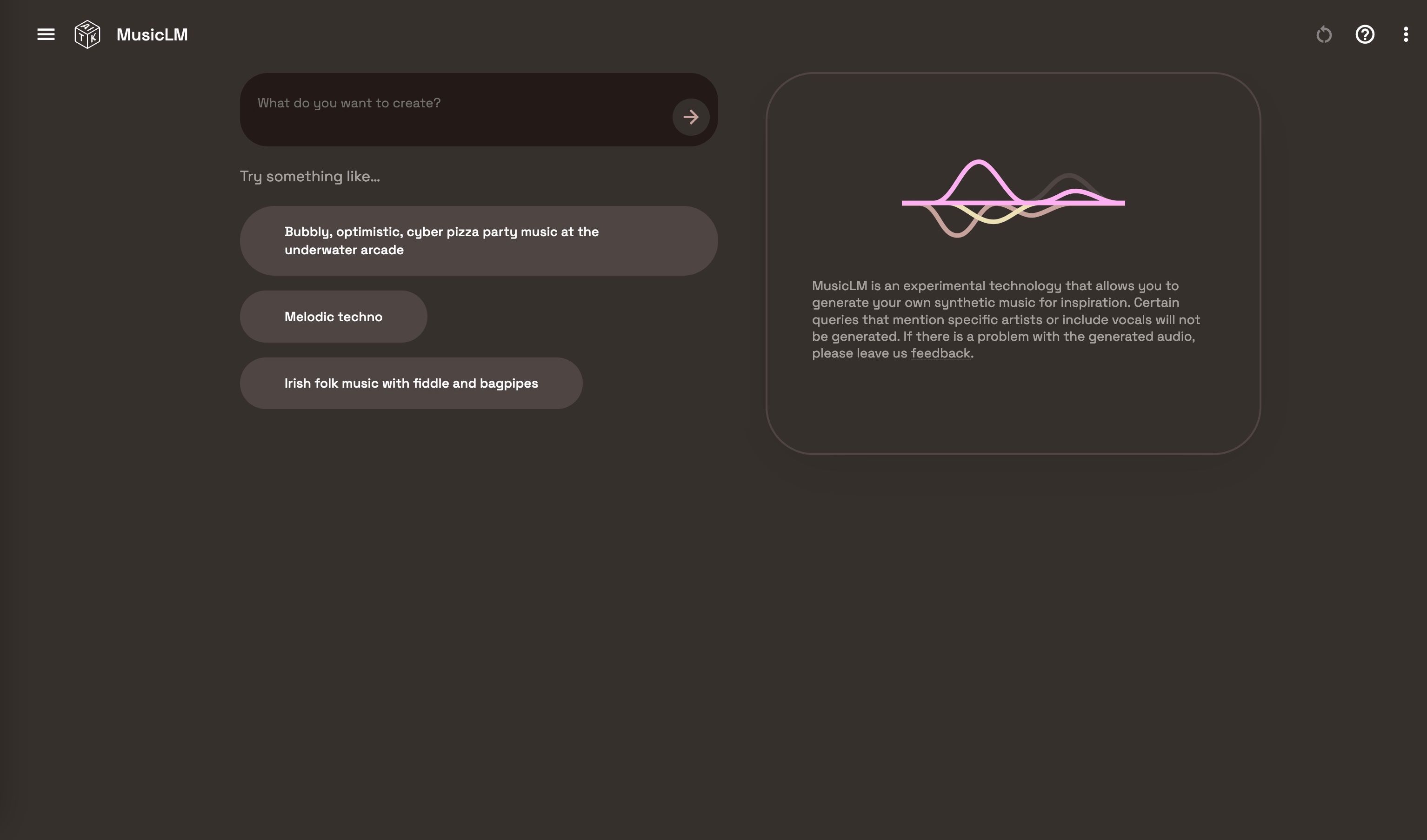

In May 2023, those who signed up to Google's AI Test Kitchen could try the demo out for the first time. Greeted by a user-friendly web page and a couple of guiding rules—electronic and classical instruments work the best, and don't forget to specify a "vibe"—producing a snippet of music is unimaginably easy.

Speed is one of the few things that MusicLM truly delivers on, alongside relatively high fidelity samples. However, the true test wasn't to be measured with a stopwatch alone. Can MusicLM produce real, listenable music based on a few words? Not exactly (we'll get to this shortly).

How to Use MusicLM in Google's AI Test Kitchen

Using MusicLM is easy, you can sign up to the waitlist for Google's AI Test Kitchen if you want to give it a go.

On the web app, you will see a text box where you can compose a prompt from a few words to a few sentences describing the kind of music you want to hear. For the best results, Google advises you to "be very descriptive", adding that you should try to include the mood and emotion of the music.

When you're ready, press enter to start processing. Within about 30 seconds, two audio snippets will be available for you to audition. From the two, you have the option to award a trophy to the best sample that matches your prompt, which in turn helps Google train the model and improve its output.

What MusicLM Sounds Like

Humans have been making music since at least 40,000 years ago with no definitive idea whether music came before, after, or at the same time as the development of language. So in some ways, it's not surprising that MusicLM hasn't quite cracked the code on this ancient universal art.

Google's MusicLM research paper suggested that MusicLM could generate music from captions belonging to famous artworks, and follow instructions like changing genre or mood in a smooth fashion following a sequence of different prompts.

Before getting around to such tall orders, however, we found that MusicLM had several fundamental problems to overcome first.

Difficulty Sticking to Tempo

The most basic job of any musician is simply to play in time. In other words, stick to the tempo. Surprisingly, that isn't something MusicLM can do 100% of the time.

In fact, using the same prompt 10 times, which produces 20 music tracks, only three were in time. The remaining 17 samples were faster or slower than the specified tempo which was written in "beats per minute", a widely used term to describe music.

In this example, we used the prompt "solo classical piano played at 80 beats per minute, peaceful and meditative". On closer listening, the music often sped up or slowed down within the small sample length.

The music also lacked a strong beat and sounded as if someone had hit play midway through the piece. Whether this was intentional or not, it does make it hard to judge whether MusicLM can actually compose a proper beginning or end to a piece of music on top of sticking to the beat.

Random Instrument Selection

Perhaps MusicLM hadn't yet learned how to play in strict timing, so we moved on to another common music parameter. We wanted to see if it would grant our request for certain instruments.

We wrote several different prompts that included descriptions like "Solo synthesizer" and "Solo bass guitar". Others were larger ensembles like "String quartet" or "Jazz band". On the whole, it seemed like a 50:50 chance you would get what you asked for.

One theory is that the model associates some instruments with popular musical genres. Take, for example, the prompt "Solo synthesizer, chord progression. Lively and upbeat". Instead of getting a synthesizer sound on its own, MusicLM produced an electronic track complete with drums and bass.

It's possible that the model just hasn't had enough data and enough training to understand the specific request for an instrument.

Vocals Are Out of the Equation

According to the restrictions at the time, the model wouldn't produce music containing vocals. MusicLM's thorny copyright issues and buggy vocals is a likely factor in why Google chose to play it safe by setting this limitation.

But after experimenting with MusicLM for some time, we realized that Google's control over the model's output wasn't exactly ironclad. Oddly, a prompt like "acoustic guitar" would produce a track that contained ghost-like vocals in the background that sounded muffled and distant.

While this isn't a common occurrence, it does leave you wondering about MusicLM's ability to create convincing vocals in the first place.

With software like VOCALOID and Synthesizer V leading the way in AI-assisted vocal synthesis technology, omitting vocals from the current model leaves us wondering if it's not yet good enough to compete against existing technology. MusicLM might well have a long way to go before musicians will be singing its praises.

The Future of AI Music Generators

While MusicLM has moved generative AI music technology forward, it needs to head back to school and learn a few more things before it can take on practical work in the music industry.

Before now, the best attempt at generative AI music was a model called JukeboxAI by OpenAI. It wasn't exactly in a ready-to-use state, and it took a whopping nine hours to render just one minute of music.

For your efforts, you were likely to get back a truly alien-sounding track riddled with audio distortion and artifacts. On the upside, you weren't going to get bored listening to the bizarre creations that Jukebox conjures.

In light of this, MusicLM has made some significant advances toward a user-friendly AI music generator. We could almost forgive the model for its random outputs when you stop to think about how vastly complicated it is to generate music in raw audio form.

After putting the model to work, however, MusicLM feels half-baked when compared to what Google published in its initial research paper. Rarely does an AI image generator get the image of an Apple wrong, likewise an AI music generator should get a few basics right like tempo and instruments.

Google's MusicLM Falls Short of Expectations

With tech companies racing to out-compete each other on the AI front, MusicLM feels as if it entered public trials before it was ready. In lieu of getting the fundamentals right, the model seems to take a far more vague and subjective approach to producing music.

Google may encourage you to be specific with your prompt, but it can't handle tempo well, and you're not guaranteed to get the instruments you asked for every time. MusicLM may be interesting, and a good demonstration of powerful AI advances, but if music is the end goal it still has a long way to go.