Web scraping, also known as web data extraction, is an automated method of extracting data or content from web pages.

Web scrapers automate data extraction without human interference. A scraper accesses a webpage by sending HTTP requests, much like a web browser does. However, instead of displaying the HTML it fetches, it processes it according to your instructions and stores the result.

Web scrapers come in handy for fetching data from websites that do not provide APIs. They are popular in fields like data science, cybersecurity, frontend, and backend development.

Web Scraping in Go

In Go, there are various web scraping packages. The popular ones include goquery, Colly, and ChromeDP.

ChromeDP is a selenium-like web driver package. It supports the Chrome developer tools protocol in Go without dependencies.

Colly is a web scraping-specific library built using goquery. But goquery is the faster option for scraping websites in Go.

What Is goquery?

The CSS library, jQuery, helped inspire goquery. It’s a Go library based on the net/html package, which implements an HTML5-compliant tokenizer and parser. It also uses the Cascadia package, which implements CSS selectors for use with the parser provided by net/html.

Installing goquery

Run the command below in your terminal to install goquery. If you encounter any errors, try updating your Go version.

go get github.com/PuerkitoBio/goquery

The Web Scraping Process

You can divide the overall scraping process into three smaller tasks:

- Making HTTP Requests.

- Using selectors and locators to get the required data.

- Saving data in a database or data structures for further processing.

Making HTTP Requests in Go

You can send HTTP requests using the net/http package, that the Go standard library includes.

package main

import "net/http"

import "log"

import "fmt"

func main() {

webUrl := "https://news.ycombinator.com/"

response, err:= http.Get(webUrl)

if err != nil {

log.Fatalln(err)

} else if response.StatusCode == 200 {

fmt.Println("We can scrape this")

} else {

log.Fatalln("Do not scrape this")

}

}

http.Get returns a response body and an error. response.StatusCode is the request-response status code.

On making HTTP requests, if the response status code is 200 you can proceed to scrape the website.

Getting the Required Data Using goquery

Getting the Website HTML

First, you have to parse the plain HTML from the response (response.body) to get a complete document object representing the webpage:

document, err := goquery.NewDocumentFromReader(response.Body)

if err != nil {

log.Fatalln(err)

}

You can now use the document object to access the structure and content the webpage contains.

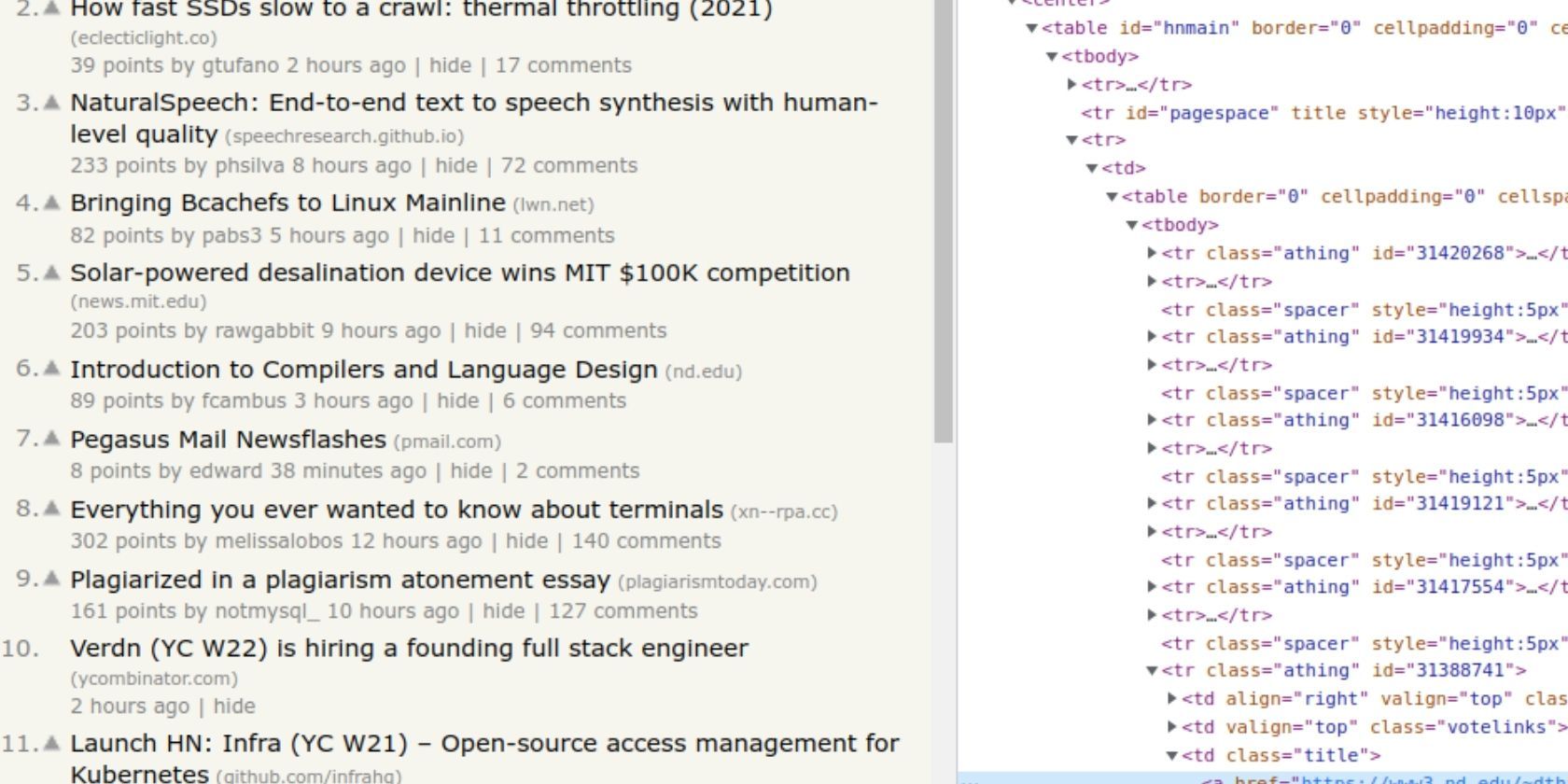

Selecting Required Elements From the HTML

You will need to inspect the webpage to check the structure of the data you need to extract. This will help you to construct a selector to access it.

Using selectors and locators, you can extract the HTML you need using the Find method of the document object.

The Find method takes a CSS selector to locate the element that contains the data you require:

document.Find("tr.athing")

The code above returns only the first HTML element matching the selector, or an empty list if there wasn’t a match at all.

Selecting Multiple Elements From HTML

Most of the time, you’ll want to fetch all the HTML elements that match your selector.

You can select all matching elements in the HTML using the Each method of the value that Find() returns. The Each method takes in a function with two parameters: an index and a selector of type *goquery.Selection.

document.Find("tr.athing").Each(func(index int, selector *goquery.Selection) {

/* Process selector here */

})

In the function body, you can select the specific data you want from the HTML. In this case, you need the links and titles of every post the page lists. Use the Find method of the selector parameter to narrow down the set of elements and extract text or attribute values.

document.Find("tr.athing").Each(func(index int, selector *goquery.Selection) {

title := selector.Find("td.title").Text()

link, found := selector.Find("a.titlelink").Attr("href")

})

The code above calls the Text method of the result from selector.Find to extract the contents of a table cell. Selecting attributes—like link and image URLs—requires you to use the Attr method. This method also returns a value indicating if the attribute exists at all.

The process is the same for selecting whatever elements and attributes off a web page.

The Find method is very powerful, allowing a wide range of operations to select and locate HTML elements. You can explore these in the goquery documentation.

Saving the Scraped Data

The link attribute and title are strings that you can assign to variables. In real scenarios, you will be saving to a database or a data structure for manipulation. Often, a simple custom struct will suffice.

Create a struct with fields title and link and a slice of structs to hold the struct type.

type Information struct {

link string

title string

}

info := make([]Information, 0)

Once you have created the struct and slice, in the body of the document method function, populate the slice in the function you pass to the Find method. Use the struct type to instantiate new data structures, each containing one result.

info = append(info, Information{

title: title,

link: link,

})

This appends types of Information(the struct) to the info(the slice) from which you can manipulate the data as you please.

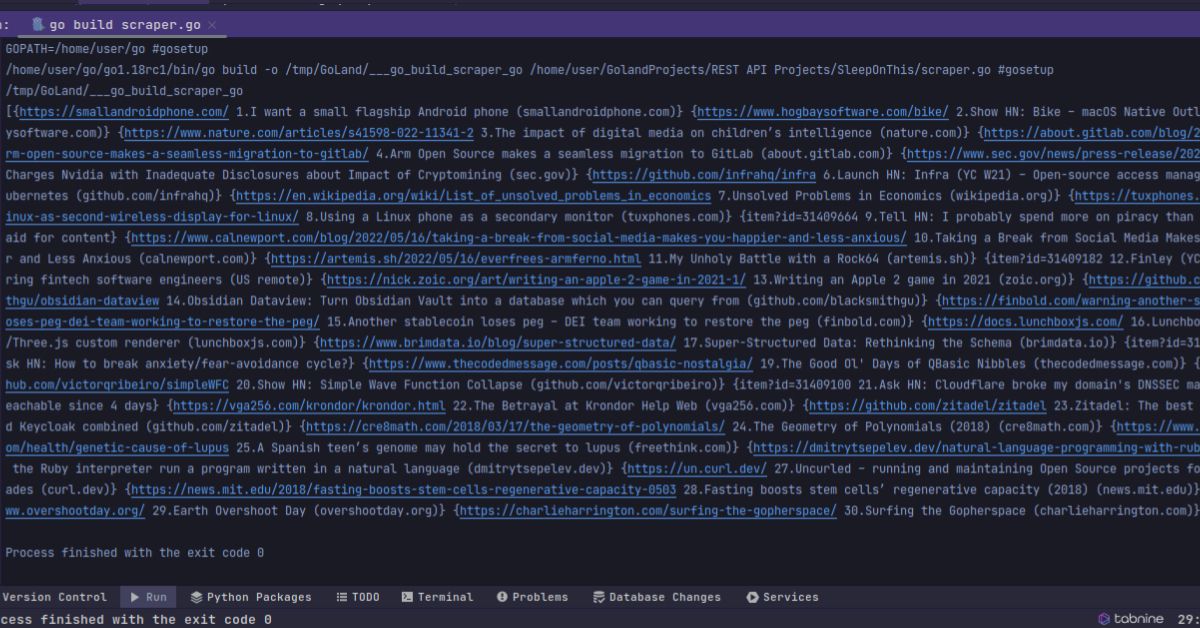

Printing the slice shows that you have successfully scraped the website and populated the slice.

fmt.Println(info)

It is reasonable to save the scraped data in a local cache so that you do not hit the server of the webpage more than you need to. This will not only reduce traffic but speed up your app since it is faster to retrieve local data than it is to make requests and scrape websites.

There are many database packages in Go that you could use to save the data. The database/sql package supports SQL databases. There are also NoSQL database clients like the MongoDB Go driver, and serverless databases like FaunaDB using the FaunaDB driver.

The Essence of Web Scraping in Go

If you’re trying to scrape data from a website, goquery is an excellent place to begin. But it’s a powerful package that can do more than just web scraping. You can find out about more of its functionality in the official project documentation.

Web scraping is an important skill across various technology fields and it will come in handy during many of your projects.