When working with large amounts of media and documents, it's quite common to accumulate multiple copies of the same file on your computer. Inevitably, what follows is a cluttered storage space filled with redundant files, provoking periodic checks for duplicate files on your system.

To this end, you'll find various programs to identify and delete duplicate files. And fdupes happens to be one such program for Linux. So follow along as we discuss fdupes and guide you through the steps to find and delete duplicate files on Linux.

What Is fdupes?

Fdupes is a CLI-based program for finding and deleting duplicate files on Linux. It's released under the MIT License on GitHub.

In its simplest form, the program works by running the specified directory through md5sum to compare the MD5 signatures of its files. Then it runs a byte-by-byte comparison on them to identify the duplicate files and ensure no duplicates are left out.

Once fdupes identifies duplicate files, it gives you the option to either delete them or replace them with hard links (links to the original files). So depending on your requirements, you can proceed with an operation accordingly.

How to Install fdupes on Linux?

Fdupes is available on most major Linux distros such as Ubuntu, Arch, Fedora, etc. Based on the distro you're running on your computer, issue the commands given below.

On Ubuntu or Debian-based systems:

sudo apt install fdupes

To install fdupes on Fedora/CentOS and other RHEL-based distros:

sudo dnf install fdupes

On Arch Linux and Manjaro:

sudo pacman -S fdupes

How to Use fdupes?

Once you've installed the program on your computer, follow the steps below to find and remove duplicate files.

Finding Duplicate Files With fdupes

First, let's start by searching for all the duplicate files in a directory. The basic syntax for this is:

fdupes path/to/directory

For example, if you want to find duplicate files in the Documents directory, you'd run:

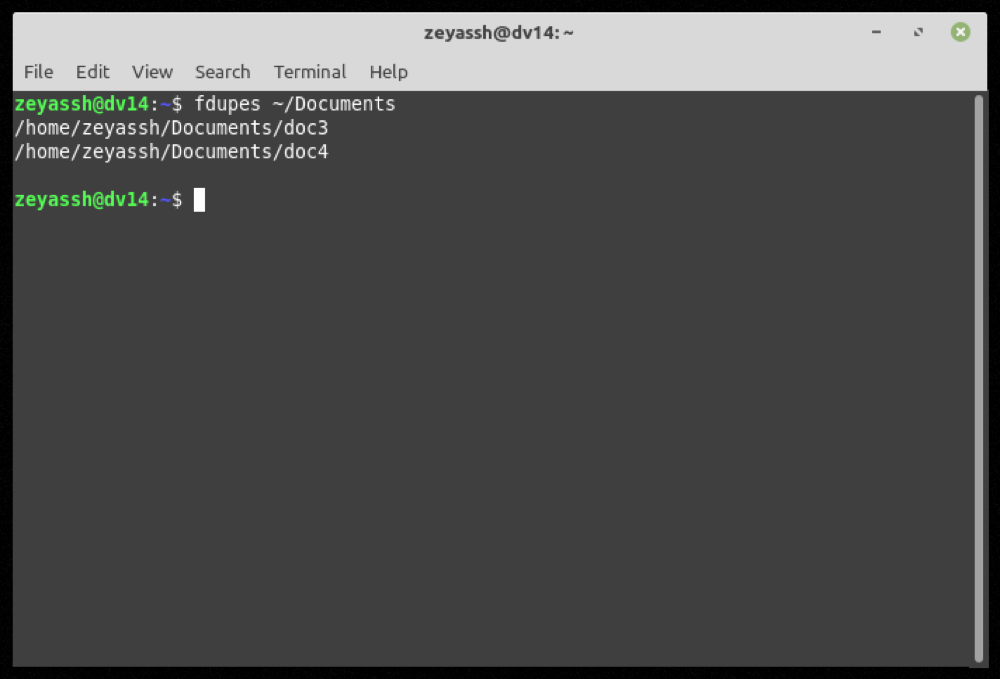

fdupes ~/Documents

Output:

If fdupes finds duplicate files in the specified directory, it'll return a list of all redundant files grouped by set, and you can then perform further operations on them as necessary.

However, if the directory you've specified consists of subdirectories, the above command won't identify duplicates inside them. In such situations, what you need to do is carry out a recursive search to find all the duplicate files present inside the subdirectories.

To perform a recursive search in fdupes, use the -r flag:

fdupes -r path/to/directory

For example:

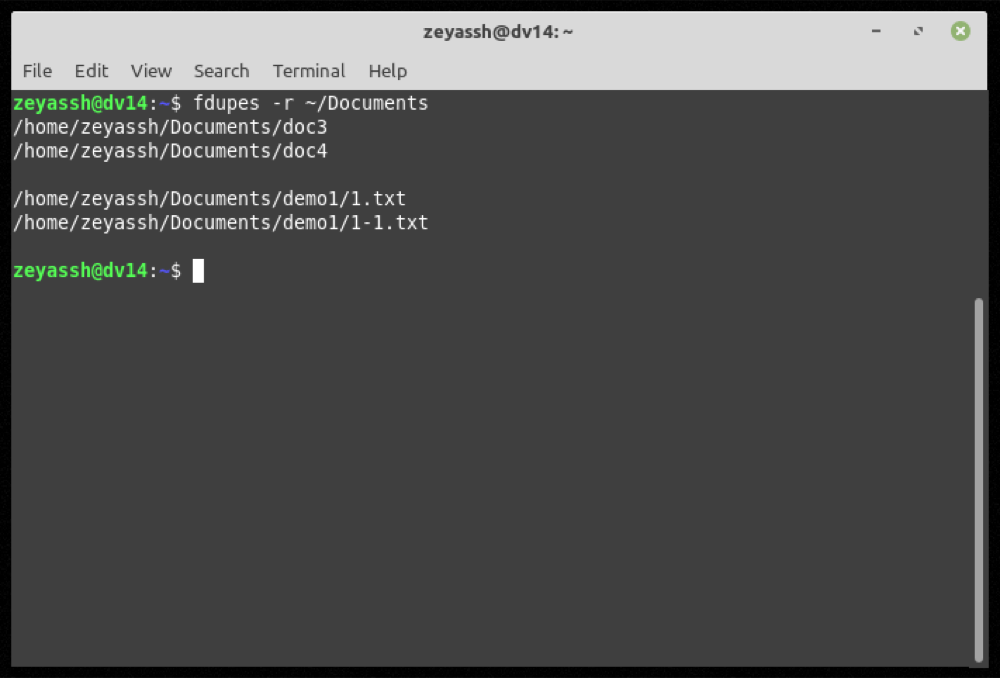

fdupes -r ~/Documents

Output:

While the above two commands can easily find duplicate files within the specified directory (and its subdirectories), their output includes zero-length (or empty) duplicate files too.

Although this functionality might still come in handy when you've got too many empty duplicate files on your system, it can introduce confusion when you only want to find out non-empty duplicates in a directory.

Fortunately, fdupes allows you to exclude zero-length files from its search results using the -n option, which you can use in your commands.

Note: You can exclude non-empty duplicate files in both normal as well as recursive searches.

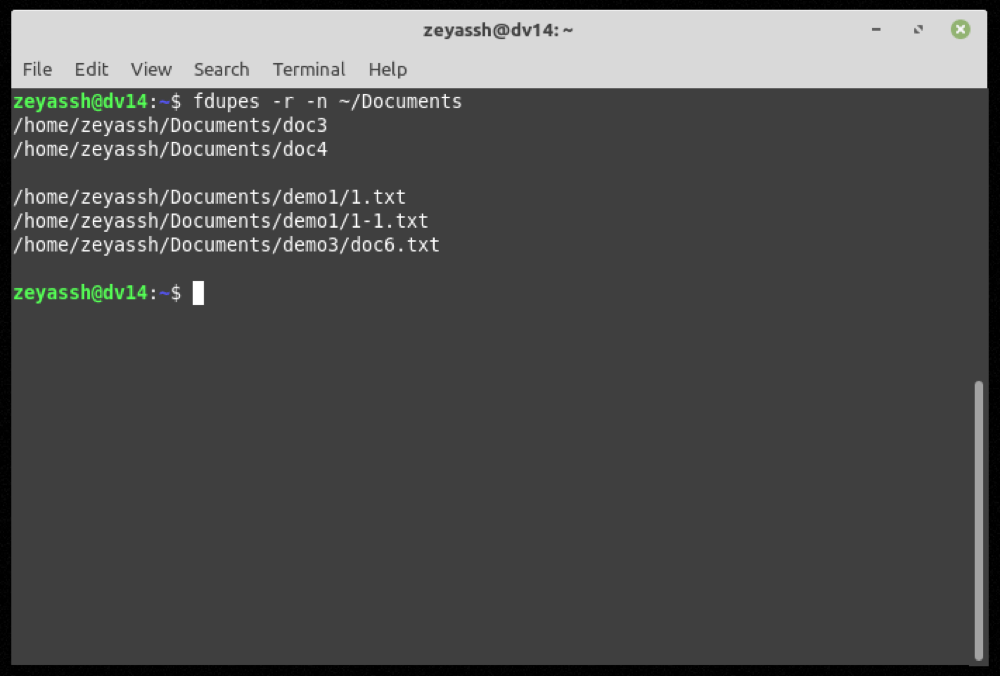

To only search for non-empty duplicate files on your machine:

fdupes -n ~/Documents

Output:

If you're dealing with multiple sets of duplicate files, it's wise to output the results to a text file for future reference.

To do this, run:

fdupes path/to/directory > file_name.txt

...where path/to/directory is the directory in which you want to perform the search.

To search for duplicate files in the Documents directory and then send the output to a file:

fdupes /home/Documents > output.txt

Last but not least, if you wish to see a summary of all information related to duplicate files in a directory, you can use the -m flag in your commands:

fdupes -m path/to/directory

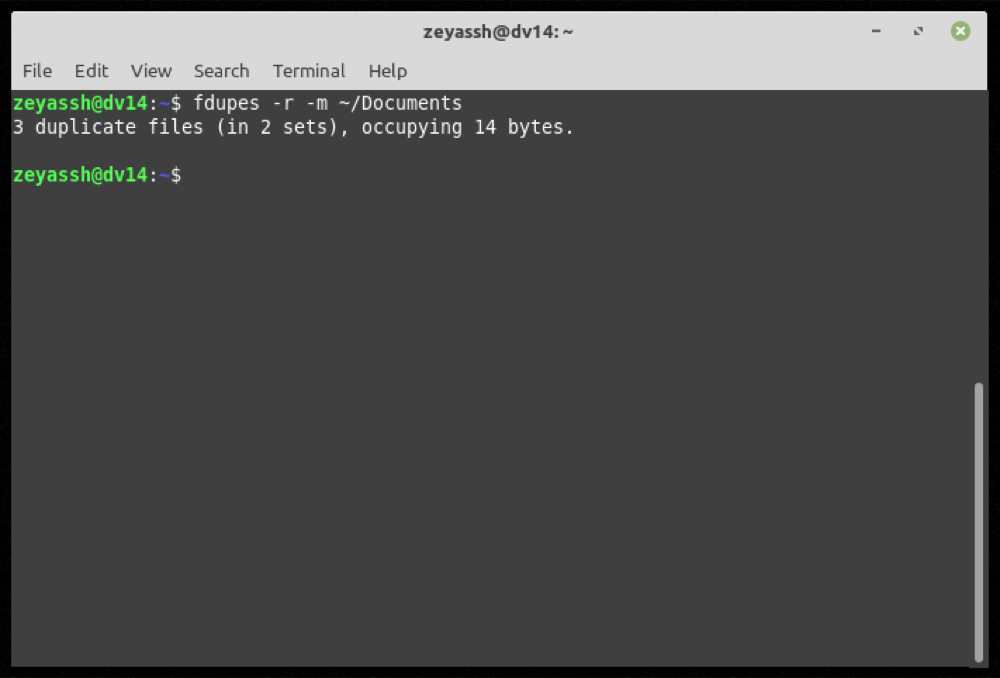

To get duplicate file information for the Documents directory:

fdupes -m ~/Documents

Output:

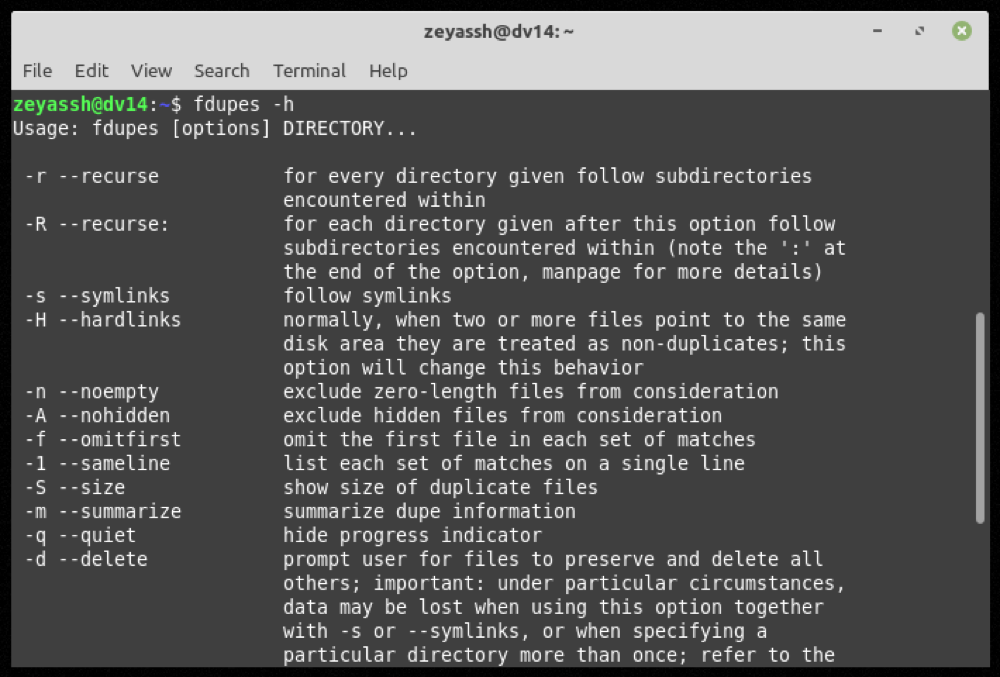

At any time during your use of fdupes, if you want help with a command or function, use the -h option to get command-line help:

fdupes -h

Deleting Duplicate Files in Linux With fdupes

After you've identified the duplicate files in a directory, you can proceed with removing/deleting these files from your system to clear clutter and free up storage space.

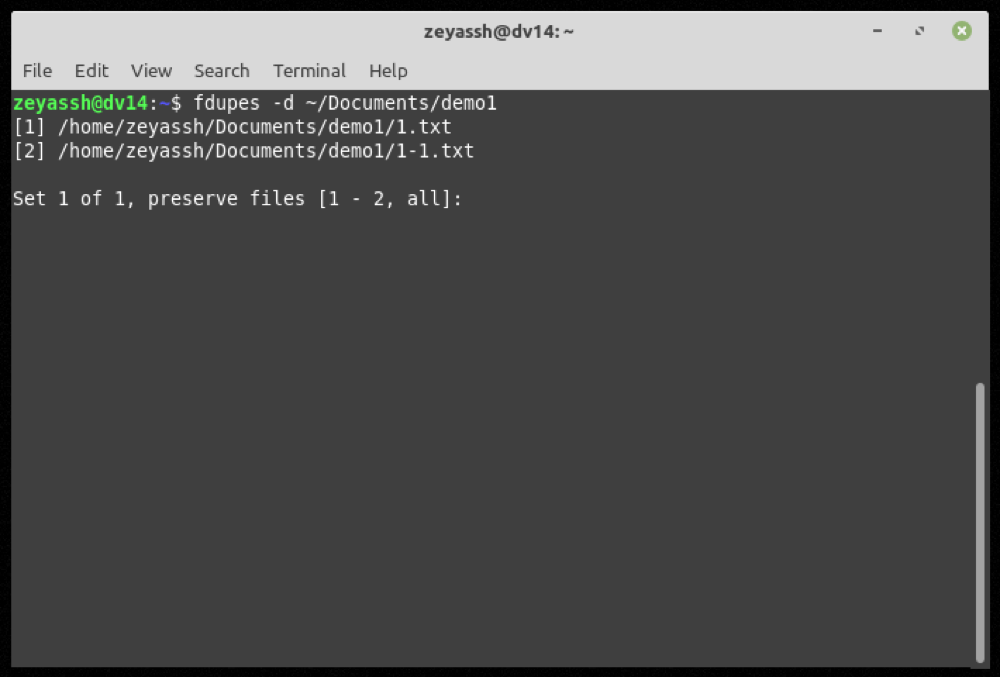

To delete a duplicate file, specify the -d flag with the command and hit Enter:

fdupes -d path/to/directory

To remove duplicate files in the Downloads folder:

fdupes -d ~/Downloads

Fdupes will now present you with a list of all the duplicate files in that directory and will give you the option to preserve the ones you want to keep on your computer.

For instance, if you want to preserve the first file in set 1, you'd enter 1 after the output of a fdupes search and hit Enter.

Moreover, if required, you can also save multiple file instances in a set of returned duplicate files. For this, you need to enter the numbers corresponding to the duplicate files in a comma-separated list and press Enter.

For example, if you want to save files 1, 3, and 5, you need to enter:

1,3,5

In case you want to preserve the first instance of a file in every set of duplicate files and want to ignore the prompt, you can do so by including the -N switch, as shown in the following command:

fdupes -d -N path/to/directory

For example:

fdupes -d -N ~/Documents

Successfully Deleting Duplicate Files in Linux

Organizing files is a tedious task in and of itself. Add to it the trouble duplicate files cause, and you're looking at a few hours in time and effort wasted on organizing your disarrayed storage.

But thanks to utilities like fdupes, it's much easier and efficient to identify duplicate files and delete them. And the guide above should assist you with these operations on your Linux machine.

Much like duplicate files, duplicate words and repeated lines in a file can also be frustrating to deal with and require advanced tools to be removed. If you face such issues, too, you can use uniq to remove duplicate lines from a text file.