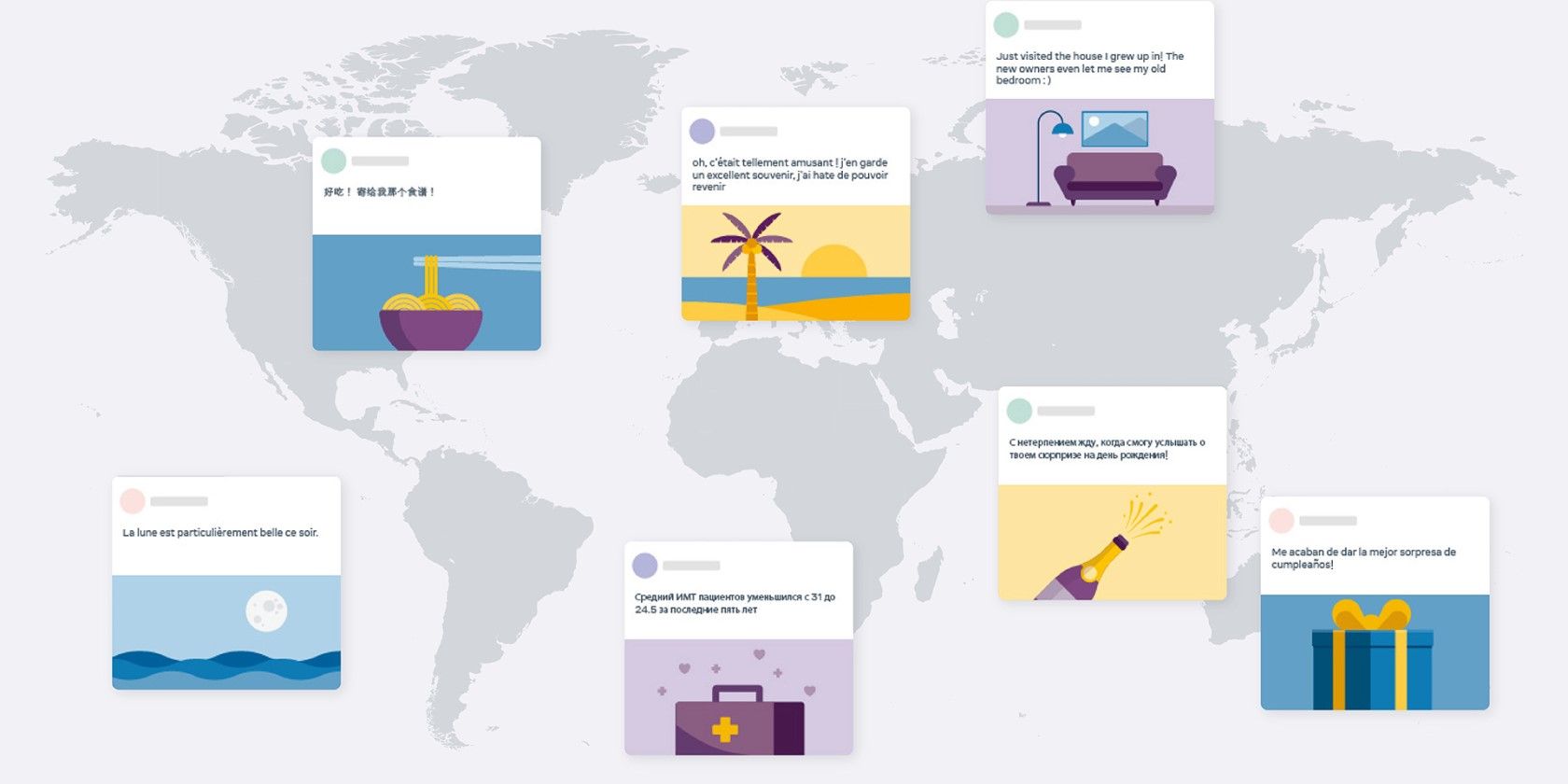

Facebook unveiled a new open-source AI model that has the ability to translate between 100 different languages. Its AI model doesn't even need to convert the existing text to English, allowing for a more efficient and accurate translation.

Introducing a New Way to Translate Text

In an About Facebook blog post, the platform detailed its new multilingual machine translation (MMT) model, also known as M2M-100. Impressively enough, this open-source machine learning model "can translate between any pair of 100 languages without relying on English data."

While this is still a research project, it shows a lot of promise. Angela Fan, a research assistant at Facebook, noted that "typical" machine translation models utilize different models for every language, making them incredibly inefficient for large platforms like Facebook.

Even advanced models don't cut it, as they use English as a middleman between languages. This means that the system must first translate the source text into English, and then translate that into the target language.

English-reliant models don't produce the best translations. Fan notes that by taking English out of the picture, Facebook's MMT system can produce more accurate translations, stating:

When translating, say, Chinese to French, most English-centric multilingual models train on Chinese to English and English to French, because English training data is the most widely available. Our model directly trains on Chinese to French data to better preserve meaning.

So instead of using English as a bridge, Facebook's MMT model can translate back and forth between 100 different languages. According to Fan, Facebook has built "the most diverse many-to-many MMT data set to date," which consists of 7.5 billion sentence pairs for 100 languages.

To accomplish this feat, the research team mined language translation data on the web, focusing first on languages "with the most translation requests." The researchers then classified those languages into 14 groups based on shared characteristics.

From here, researchers established bridge languages for each group, and mined training data for all possible combinations. This resulted in 7.5 billion parallel sentences across 2,200 directions.

And as for languages that aren't as widespread, Facebook used something called back-translation to create synthetic translations.

This entire process is bringing the Facebook AI team closer to their goal of creating a "single model that supports all languages, dialects, and modalities."

Facebook Gets Closer to Providing Better Translations

Facebook already performs 20 billion translations every day on its News Feed, and Facebook AI will only make the process more efficient. Although the new translation model hasn't been implemented yet, it will definitely come in handy for international Facebook users who need specific translations.