If you’ve been following Nvidia and AMD, you probably know about the specifications of their GPUs that both of these companies like to use. For instance, Nvidia likes to emphasize CUDA core counts to differentiate its offering from AMD’s cards, while AMD does the same with its Compute Units.

But what do these terms actually mean? Is a CUDA core the same thing as a Compute Unit? If not, then what’s the difference?

Let’s answer these questions and see what makes an AMD GPU different from an Nvidia one.

General Architecture of a GPU

All GPUs, whether from AMD, Nvidia, or Intel, work the same way in general. They have the same key components and the overall layout of those components is similar at a higher level.

So, from a top-down perspective, all GPUs are the same.

When we look at the specific, proprietary components that each manufacturer packs into their GPU, the differences start to emerge. For instance, Nvidia builds Tensor cores into their GPUs, whereas AMD GPUs do not have Tensor cores.

Similarly, AMD uses components like the Infinity Cache, which Nvidia GPUs don’t have.

So, to understand the difference between Compute Units (CUs) and CUDA cores, we have to look at the overall architecture of a GPU first. Once we can understand the architecture and see how a GPU works, we can clearly see the difference between Compute Units and CUDA cores.

How Does a GPU Work?

The first thing that you need to understand is that a GPU processes thousands or even millions of instructions simultaneously. Therefore, a GPU needs lots of small, highly parallel cores to handle those instructions.

These small GPU cores are different from big CPU cores that process one complex instruction per core at a time.

For instance, an Nvidia RTX 3090 has 10496 CUDA cores. On the other hand, the top-of-the-line AMD Threadripper 3970X has only 64 cores.

So, we can’t compare GPU cores to CPU cores. There are quite many differences between a CPU and GPU because the engineers have designed them to perform different tasks.

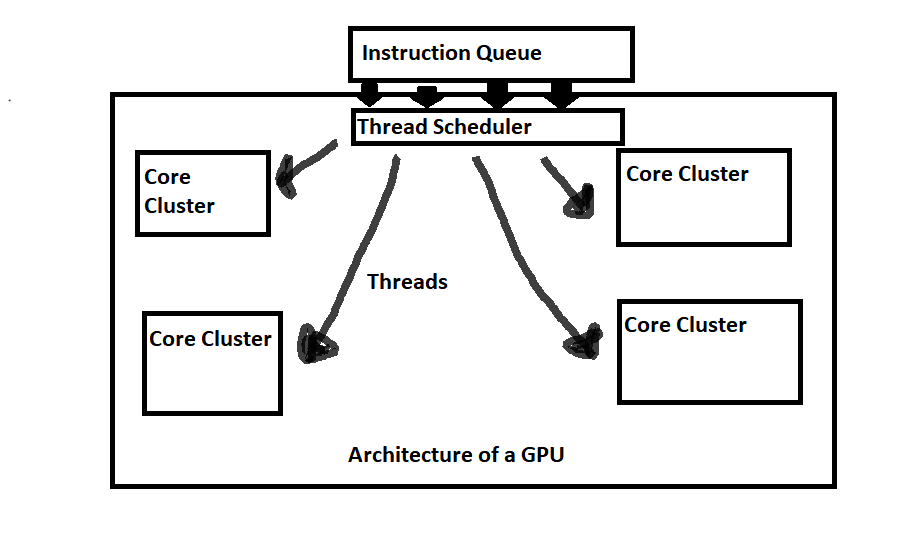

Furthermore, unlike an average CPU, all of the GPU cores are arranged in clusters or groups.

Finally, a cluster of cores on a GPU has other hardware components like texture processing cores, floating points units, and caches

to help process millions of instructions at the same time. This parallelism defines the architecture of a GPU. From loading an instruction to processing it, a GPU does everything according to the principles of parallel processing.

- First, the GPU receives an instruction to process from a queue of instructions. These instructions are almost always overwhelmingly vector-related.

- Next, to solve these instructions, a thread scheduler passes them on to individual core clusters for processing.

- After receiving the instructions, a built-in core cluster scheduler assigns the instructions to cores or processing elements for processing.

- Finally, different core clusters process different instructions in parallel, and the results are displayed on the screen. So, all the graphics that you see on screen, a video game, for example, are just a collection of millions of processed vectors.

In short, a GPU has thousands of processing elements which we call “cores” arranged in clusters. Schedulers assign work to these clusters to achieve parallelism.

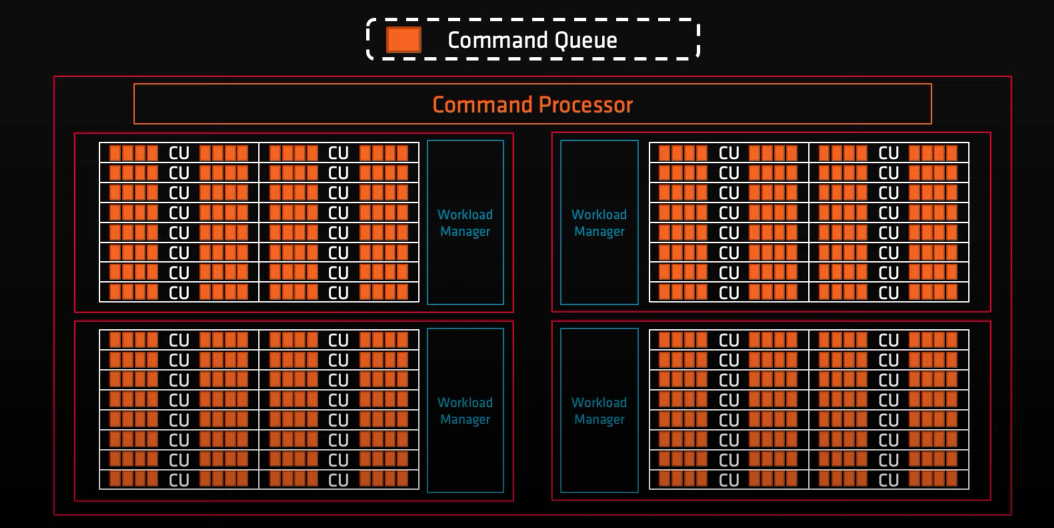

What Are Compute Units?

As seen in the previous section, every GPU has clusters of cores containing processing elements. AMD calls these core clusters “Compute Units.”

www.youtube.com/watch?v=uu-3aEyesWQ&t=202s

Compute Units are a collection of processing resources like parallel Arithmetic and Logical Units (ALUs), caches, floating-point units or vector processors, registers, and some memory to store thread information.

To keep it simple, AMD only advertises the number of Compute Units of their GPUs and doesn’t detail the underlying components.

So, whenever you see the number of Compute Units, think of them as a group of processing elements and all of the related components.

What Are CUDA Cores?

Where AMD likes to keep things simple with the number of Compute Units, Nvidia complicates things by using terms like CUDA cores.

CUDA cores aren’t exactly cores. They are just floating point units that Nvidia likes to term as cores for marketing purposes. And, if you remember, core clusters have many floating-point units built-in. These units perform vector calculations and nothing else.

So, calling them a “core” is pure marketing.

Therefore, a CUDA core is a processing element that performs floating-point operations. There can be many CUDA cores inside a single core cluster.

Finally, Nvidia calls core clusters “Streaming Multiprocessors or SMs.” SMs are equivalent to AMD Compute Units since Compute Units are core clusters themselves.

What's the Difference Between Compute Units and CUDA Cores?

The main difference between a Compute Unit and a CUDA core is that the former refers to a core cluster, and the latter refers to a processing element.

To understand this difference better, let us take the example of a gearbox.

A gearbox is a unit comprising of multiple gears. You can think of the gearbox as a Compute Unit and the individual gears as floating-point units of CUDA cores.

In other words, where Compute Units are a collection of components, CUDA cores represent a specific component inside the collection. So, Compute Units and CUDA cores aren’t comparable.

This is also why when AMD mentions the number of Compute Units for their GPUs they are always quite lower compared to competing Nvidia cards and their CUDA core count. A more favorable comparison would be between the number of Streaming Multiprocessors of the Nvidia card and the number of Compute Units of the AMD card.

CUDA Cores and Compute Units are Different and Not Comparable

Companies have the habit of using confusing terminology to present their products in the best light. Not only does this confuse the customer, but it also makes it hard to keep track of the things that matter.

So, make sure you know what to look for when searching for a GPU. Staying far away from marketing jargon will make your decision a lot better and more stress-free.