Want to learn web scraping with Python but are confused about whether to use Beautiful Soup, Selenium, or Scrapy for your next project? While all these Python libraries and frameworks are powerful in their own right, they don't cater to all web scraping needs, and hence, it's important to know which tool you should use for a particular job.

Let's take a look at the differences between Beautiful Soup, Scrapy, and Selenium, so you can make a wise decision before starting your next Python web scraping project.

1. Ease of Use

If you're a beginner, your first requirement would be a library that's easy to learn and use. Beautiful Soup offers you all the rudimentary tools you need to scrape the web, and it's especially helpful for people who've minimal experience with Python but want to hit the ground running with web scraping.

The only caveat is, due to its simplicity, Beautiful Soup isn't as powerful as compared to Scrapy or Selenium. Programmers with development experience can easily master both Scrapy and Selenium, but for beginners, the first project can take a lot of time to build if they choose to go with these frameworks instead of Beautiful Soup.

To scrape the title tag content on example.com using Beautiful Soup, you'd use the following code:

url = "https://example.com/"

res = requests.get(url).text

soup = BeautifulSoup(res, 'html.parser')

title = soup.find("title").text

print(title)

To achieve similar results using Selenium, you'd write:

url = "https://example.com"

driver = webdriver.Chrome("path/to/chromedriver")

driver.get(url)

title = driver.find_element(By.TAG_NAME, "title").get_attribute('text')

print(title)

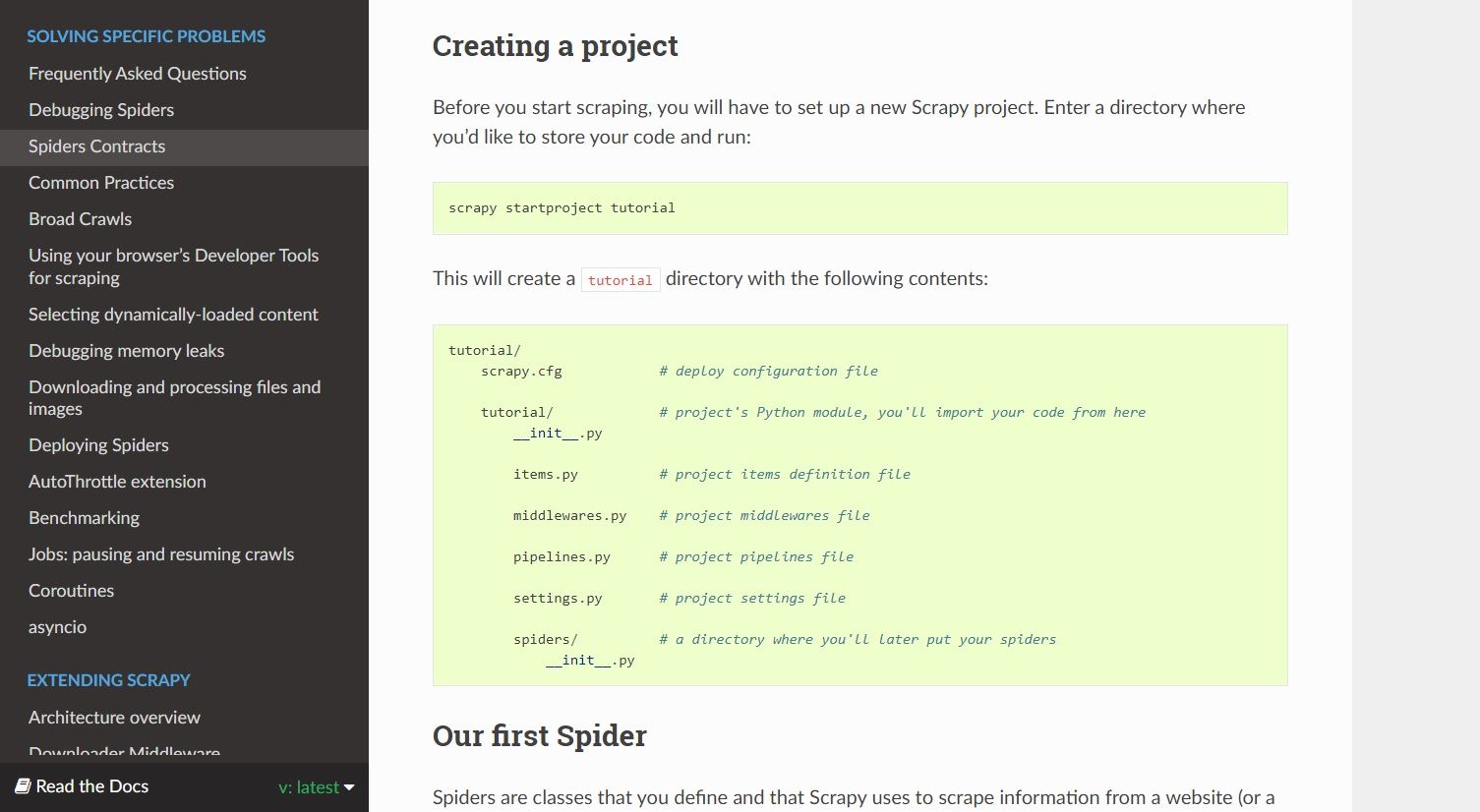

The file structure of a Scrapy project consists of multiple files, which adds to its complexity. The following code scrapes the title from example.com:

import scrapy

class TitleSpider(scrapy.Spider):

name = 'title'

start_urls = ['https://example.com']

def parse(self, response):

yield {

'name': response.css('title'),

}

If you wish to extract data from a service that offers an official API, it might be a wise decision to use the API instead of developing a web scraper.

2. Scraping Speed and Parallelization

Out of the three, Scrapy is the clear winner when it comes to speed. This is because it supports parallelization by default. Using Scrapy, you can send multiple HTTP requests at once, and when the script has downloaded the HTML code for the first set of requests, it's ready to send another batch.

With Beautiful Soup, you can use the threading library to send concurrent HTTP requests, but it's not convenient and you'll have to learn multithreading to do so. On Selenium, it's impossible to achieve parallelization without launching multiple browser instances.

If you were to rank these three web scraping tools in terms of speed, Scrapy is the fastest, followed by Beautiful Soup and Selenium.

3. Memory Usage

Selenium is a browser automation API, which has found its applications in the web scraping field. When you use Selenium to scrape a website, it spawns a headless browser instance that runs in the background. This makes Selenium a resource-intensive tool when compared with Beautiful Soup and Scrapy.

Since the latter operate entirely in the command line, they use fewer system resources and offer better performance than Selenium.

4. Dependency Requirements

Beautiful Soup is a collection of parsing tools that help you extract data from HTML and XML files. It ships with nothing else. You have to use libraries like requests or urllib to make HTTP requests, built-in parsers to parse the HTML/XML, and additional libraries to implement proxies or database support.

Scrapy, on the other hand, comes with the whole shebang. You get tools to send requests, parse the downloaded code, perform operations on the extracted data, and store the scraped information. You can add other functionalities to Scrapy using extensions and middleware, but that would come later.

With Selenium, you download a web driver for the browser you want to automate. To implement other features like data storage and proxy support, you'd need third-party modules.

5. Documentation Quality

Overall, each of the project's documentation is well-structured and describes every method using examples. But the effectiveness of a project's documentation heavily depends on the reader as well.

Beautiful Soup's documentation is much better for beginners who are starting with web scraping. Selenium and Scrapy have detailed documentation, no doubt, but the technical jargon can catch many newcomers off-guard.

If you're experienced with programming concepts and terminologies, then either of the three documentation would be a cinch to read through.

6. Support for Extensions and Middleware

Scrapy is the most extensible web scraping Python framework, period. It supports middleware, extensions, proxies, and more, and helps you develop a crawler for large-scale projects.

You can write foolproof and efficient crawlers by implementing middlewares in Scrapy, which are basically hooks that add custom functionality to the framework's default mechanism. For example, the HttpErrorMiddleware takes care of HTTP errors so the spiders don't have to deal with them while processing requests.

Middleware and extensions are exclusive to Scrapy but you can achieve similar results with Beautiful Soup and Selenium by using additional Python libraries.

7. JavaScript Rendering

Selenium has one use case where it surpasses other web scraping libraries, and that is, scraping JavaScript-enabled websites. Although you can scrape JavaScript elements using Scrapy middlewares, the Selenium workflow is the easiest and most convenient of all.

You use a browser to load a website, interact with it using clicks and button presses, and when you've got the content you need to scrape on screen, extract it using Selenium's CSS and XPath selectors.

Beautiful Soup can select HTML elements using either XPath or CSS selectors. It doesn't offer functionality to scrape JavaScript-rendered elements on a web page, though.

Web Scraping Made Easy With Python

The internet is full of raw data. Web scraping helps convert this data into meaningful information that can be put to good use. Selenium is most probably your safest bet if you want to scrape a website with JavaScript or need to trigger some on-screen elements before extracting the data.

Scrapy is a full-fledged web scraping framework for all your needs, whether you want to write a small crawler or a large-scale scraper that repeatedly crawls the internet for updated data.

You can use Beautiful Soup if you're a beginner or need to quickly develop a scraper. Whatever framework or library you go with, it's easy to start learning web scraping with Python.