Linux users often cite security benefits as one of the reasons to prefer open source software. Since the code is open for everyone to see, there are more eyes searching for potential bugs. They refer to the opposite approach, where code is only visible to the developers, as security through obscurity. Only a few people can see the code, and the people who want to take advantage of bugs aren't on that list.

While this language is common in the open source world, this isn't a Linux-specific issue. In fact, this debate is older than computers. So is the question settled? Is one approach actually safer than the other, or is it possible that there's truth to both?

What Is Security Through Obscurity?

Security through obscurity is the reliance on secrecy as a means of protecting components of a system. This method is partially adopted by the companies behind today's most successful commercial operating systems: Microsoft, Apple, and to a lesser extent, Google. The idea is that if bad actors don't know a flaw exists, how can they take advantage of them?

You and I cannot take a peak at the code that makes Windows run (unless you happen to have a relationship with Microsoft). The same is true of macOS. Google open sources the core components of Android, but most apps remain proprietary. Similarly, Chrome OS is largely open source, except for the special bits that separate Chrome from Chromium.

What Are the Drawbacks?

Since we cannot see what's going on in the code, we have to trust companies when they say their software is secure. In reality, they may have the strongest security in the industry (as seems to be the case with Google's online services), or they may have glaring holes that embarrassingly linger around for years.

Security by obscurity, on its own, does not provide a system with security. This is taken as a given in the world of cryptography. Kerckhoff's principle argues that a cryptosystem should be secure even if the mechanisms fall into the hands of the enemy. This principle dates all the way back to the late 1800s.

Shannon's maxim followed in the 20th century. It says that people should design systems under the assumption that opponents will immediately become familiar with them.

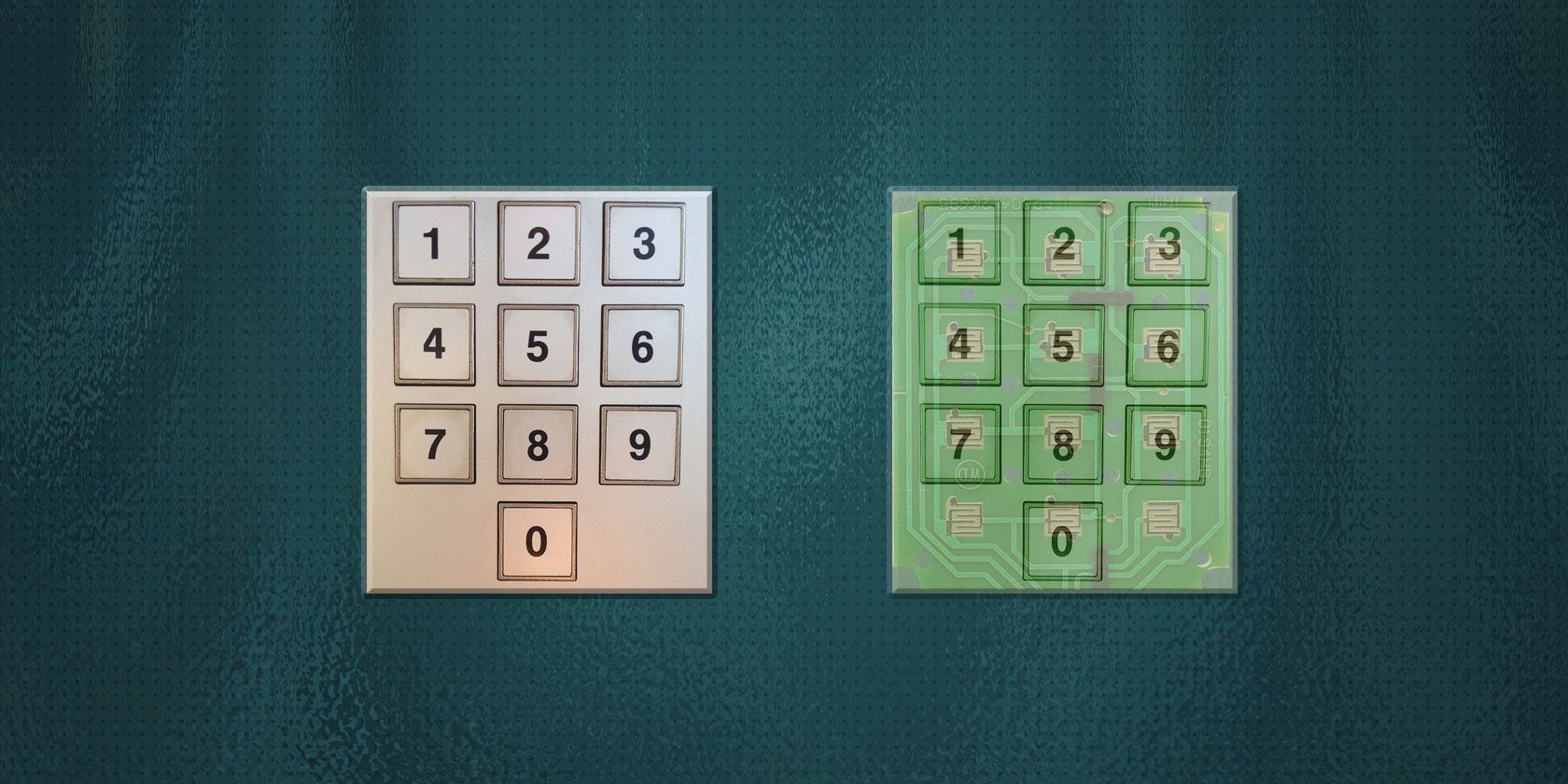

Back in the 1850s, American locksmith Alfred Hobbs demonstrated how to pick state-of-the-art locks made by manufacturers who claimed that secrecy made their designs safer. People who make their livelihoods (so to speak) picking locks get really good at picking locks. Just because they may not have seen one before doesn't make it impenetrable.

This can be seen in the regular security updates that arrive on Windows, macOS, and other proprietary operating systems. If keeping the code private were enough to keep flaws hidden, they wouldn't need to be patched.

Security Through Obscurity Can't Be the Only Solution

Fortunately, this approach is only part of the defensive plan these companies take. Google rewards people who discover security flaws in Chrome, and it's hardly the only the only tech giant to use this tactic.

Proprietary tech companies spend billions on making their software safe. They aren't relying entirely on smoke and mirrors to keep bad guys away. Instead, they rely on secrecy as only the first layer of defense, slowing attackers down by making it harder for them to get information on the system they're looking to infiltrate.

The thing is, sometimes the threat doesn't come from outside the operating system. The release of Windows 10 showed many users that unwanted behavior can come from the software itself. Microsoft has ramped up its efforts to collect information on Windows users in order to further monetize its product. What it does with that data, we don't know. We can't take a look at the code to see. And even when Microsoft does open up, it remains purposefully vague.

Is Open Source Security Better?

When source code is public, more eyes are available to spot vulnerabilities. If there are bugs in the code, the thinking goes, then someone will spot them. And don't think of sneaking a backdoor into your software. Someone will notice, and they will call you out.

Few people expect end users to view and make sense of source code. That's for other developers and security experts to do. We can rest easy knowing that they're doing this work on our behalf.

Or can we? We can draw an easy parallel with government. When new legislation or executive orders are passed, sometimes journalists and law professionals scrutinize the material. Sometimes it goes under the radar.

Bugs such as Heartbleed have shown us that security isn't guaranteed. Sometimes bugs are so obscure that they go decades without detection, even though the software is in use by millions (not to say this doesn't happen on Windows too). It can take a while to discover quirks such as hitting the Backspace key 28 times to bypass the lockscreen. And just because many people can look at code doesn't mean that they do. Again, as we sometimes see in government, public material can go ignored simply because it's boring.

So why is Linux widely regarded as being a secure operating system? While this is partly due to the advantages of Unix-style design, Linux also benefits from the sheer number of people invested in its ecosystem. With organizations as varied and diverse as Google and IBM to the U.S. Department of Defense and the Chinese government, there are many parties invested in keeping the software secure. Since the code is open, people are free to make improvements and submit them back for other Linux users to benefit from. Or they can keep those improvements for themselves. By comparison, Windows and macOS are limited to the improvements that come directly from Microsoft and Apple.

Plus, while Windows may be dominant on desktops, Linux is widely used on servers and other pieces of mission critical hardware. Many companies like having the option to make their own fixes when the stakes are this high. And if you're truly paranoid or need to guarantee that no one is monitoring what's happening on your PC, you can only do that if you can verify what the code on your machine is doing.

Which Security Model Do You Prefer?

There is a general consensus that encryption algorithms must be open, as long as keys are private. But there is no consensus that all software would be safer if the code were open. This may not even be the right question to ask. Other factors impact how vulnerable your system may be, such as how often exploits are discovered and how quickly they're fixed.

Nonetheless, does the closed-source nature of Windows or macOS leave you feeling uncomfortable? Do you use them anyway? Do you consider that a perk, not a detriment? Chime in!