You may have heard the term "Markov chain" before, but unless you've taken a few classes on probability theory or computer science algorithms, you probably don't know what they are, how they work, and why they're so important.

The notion of a Markov chain is an "under the hood" concept, meaning you don't really need to know what they are in order to benefit from them. However, you can certainly benefit from understanding how they work. They're simple yet useful in so many ways.

So here's a crash course -- everything you need to know about Markov chains condensed down into a single, digestible article. If you want to delve even deeper, try the free information theory course on Khan Academy (and consider other online course sites too).

Markov Chains 101

Let's say you want to predict what the weather will be like tomorrow. A true prediction -- the kind performed by expert meteorologists -- would involve hundreds, or even thousands, of different variables that are constantly changing. Weather systems are incredibly complex and impossible to model, at least for laymen like you and me. But we can simplify the problem by using probability estimates.

Imagine you had access to thirty years of weather data. You start at the beginning, noting that Day 1 was sunny. You keep going, noting that Day 2 was also sunny, but Day 3 was cloudy, then Day 4 was rainy, which led into a thunderstorm on Day 5, followed by sunny and clear skies on Day 6.

Ideally you'd be more granular, opting for an hour-by-hour analysis instead of a day-by-day analysis, but this is just an example to illustrate the concept, so bear with me!

You do this over the entire 30-year data set (which would be just shy of 11,000 days) and calculate the probabilities of what tomorrow's weather will be like based on today's weather. For example, if today is sunny, then:

- A 50 percent chance that tomorrow will be sunny again.

- A 30 percent chance that tomorrow will be cloudy.

- A 20 percent chance that tomorrow will be rainy.

Now repeat this for every possible weather condition. If today is cloudy, what are the chances that tomorrow will be sunny, rainy, foggy, thunderstorms, hailstorms, tornadoes, etc? Pretty soon, you have an entire system of probabilities that you can use to predict not only tomorrow's weather, but the next day's weather, and the next day.

Transitional States

This is the essence of a Markov chain. You have individual states (in this case, weather conditions) where each state can transition into other states (e.g. sunny days can transition into cloudy days) and those transitions are based on probabilities. If you want to predict what the weather might be like in one week, you can explore the various probabilities over the next seven days and see which ones are most likely. Thus, a Markov "chain".

Who is Markov? He was a Russian mathematician who came up with the whole idea of one state leading directly to another state based on a certain probability, where no other factors influence the transitional chance. Basically, he invented the Markov chain, hence the naming.

How Markov Chains Are Used in the Real World

With the explanation out of the way, let's explore some of the real world applications where they come in handy. You might be surprised to find that you've been making use of Markov chains all this time without knowing it!

Name Generation

Have you ever participated in tabletop gaming, MMORPG gaming, or even fiction writing? You may have agonized over the naming of your characters (at least at one point or another) -- and when you just couldn't seem to think of a name you like, you probably resorted to an online name generator.

Have you ever wondered how those name generators worked? As it turns out, many of them use Markov chains, making it one of the most-used solutions. (There are other algorithms out there that are just as effective, of course!)

All you need is a collection of letters where each letter has a list of potential follow-up letters with probabilities. So, for example, the letter "M" has a 60 percent chance to lead to the letter "A" and a 40 percent chance to lead to the letter "I". Do this for a whole bunch of other letters, then run the algorithm. Boom, you have a name that makes sense! (Most of the time, anyway.)

Google PageRank

One of the interesting implications of Markov chain theory is that as the length of the chain increases (i.e. the number of state transitions increases), the probability that you land on a certain state converges on a fixed number, and this probability is independent of where you start in the system.

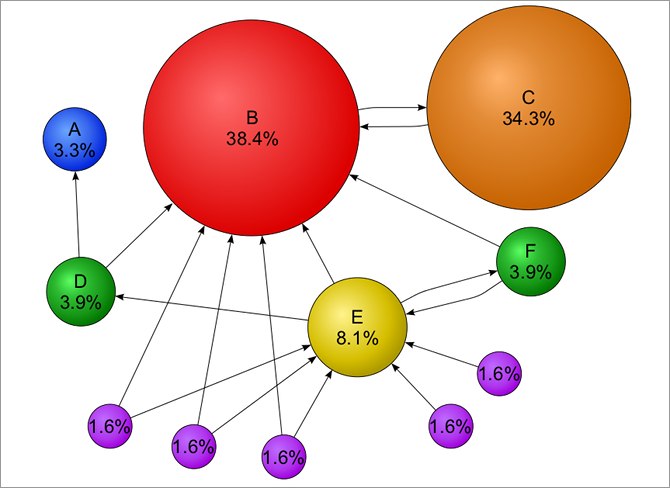

This is extremely interesting when you think of the entire world wide web as a Markov system where each webpage is a state and the links between webpages are transitions with probabilities. This theorem basically says that no matter which webpage you start on, your chance of landing on a certain webpage X is a fixed probability, assuming a "long time" of surfing.

And this is the basis of how Google ranks webpages. Indeed, the PageRank algorithm is a modified (read: more advanced) form of the Markov chain algorithm.

The higher the "fixed probability" of arriving at a certain webpage, the higher its PageRank. This is because a higher fixed probability implies that the webpage has a lot of incoming links from other webpages -- and Google assumes that if a webpage has a lot of incoming links, then it must be valuable. The more incoming links, the more valuable it is.

It's more complicated than that, of course, but it makes sense. Why does a site like About.com get higher priority on search result pages? Because it turns out that users tend to arrive there as they surf the web. Interesting, isn't it?

Typing Word Prediction

Mobile phones have had predictive typing for decades now, but can you guess how those predictions are made? Whether you're using Android (alternative keyboard options) or iOS (alternative keyboard options), there's a good chance that your app of choice uses Markov chains.

This is why keyboard apps ask if they can collect data on your typing habits. For example, in Google Keyboard, there's a setting called Share snippets that asks to "share snippets of what and how you type in Google apps to improve Google Keyboard". In essence, your words are analyzed and incorporated into the app's Markov chain probabilities.

That's also why keyboard apps often present three or more options, typically in order of most probable to least probable. It can't know for sure what you meant to type next, but it's correct more often than not.

Subreddit Simulation

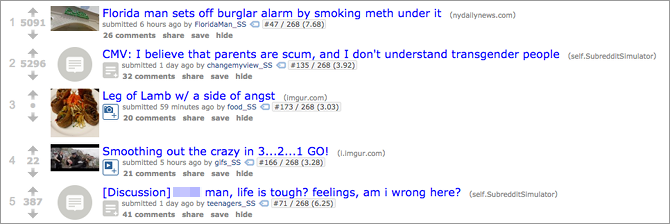

If you've never used Reddit, we encourage you to at least check out this fascinating experiment called /r/SubredditSimulator.

Simply put, Subreddit Simulator takes in a massive chunk of ALL the comments and titles made across Reddit's numerous communities, then analyzes the word-by-word makeup of each sentence. Using this data, it generates word-to-word probabilities -- then uses those probabilities to come generate titles and comments from scratch.

One interesting layer to this experiment is that comments and titles are categorized by the community from which the data came, so the kinds of comments and titles generated by /r/food's data set are wildly different from the comments and titles generates by /r/soccer's data set.

And the funniest -- or perhaps the most disturbing -- part of all this is that the generated comments and titles can frequently be indistinguishable from those made by actual people. It's absolutely fascinating.

Do you know of any other cool uses for Markov chains? Got any questions that still need answering? Let us know in a comment down below!