Are you a believer in the idea that once something is published on the Internet, it's published forever? Well, today we're going to dispel that myth.

The truth is that in many cases it's quite possible to eradicate information from the Internet. Sure, there's a record of web pages that have been deleted if you search the Wayback Machine, right? Yup, absolutely. On the Wayback Machine there are records of web pages going back many years -- pages that you won't find with a Google search because the web page no longer exists. Someone deleted it, or the website got shut down.

So, there's no getting around it, right? Information will forever be engraved into the stone of the Internet, there for generations to see? Well, not exactly.

The truth is that while it might be difficult or impossible to wipe out major news stories that have proliferated from one news website or blog to another like a virus, it is actually quite easy to completely eradicate a web page or several web pages from all records of existence -- to remove that page for both search engines as well as the Wayback Machine. There is a catch of course, but we'll get to that.

3 Ways to Remove Blog Pages From the Net

The first method is the one that majority of website owners use, because they don't know any better -- simply deleting web pages. This might happen because you've realized that you have duplicate content on your site, or because you have a page that you don't want to show up in search results.

Simply Delete the Page

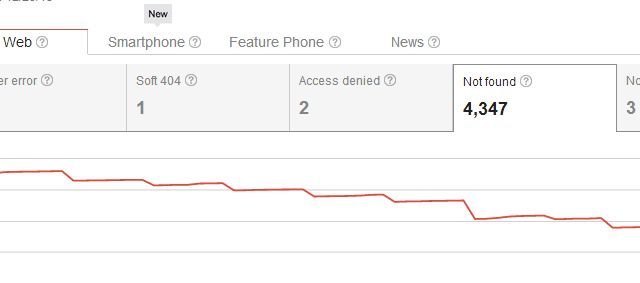

The problem with entirely deleting pages from your website is that since you've already established the page on the net, there are likely to be links from your own site as well as external links from other sites to that particular page. When you delete it, Google immediately recognizes that page of yours as a missing page.

So, in deleting your page you've not only created an issue with "Not found" crawl errors for yourself, but you've also created a problem for anyone who ever linked to the page. Usually, users that get to your site from one of those external links will see your 404 page, which isn't a major problem, if you use something like Google's custom 404 code to give users helpful suggestions or alternatives. But, you'd think there could be more graceful ways of deleting pages from search results without kicking off all of those 404's for existing incoming links, right?

Well, there are.

Remove a Page From Google Search Results

First of all, you should understand that if the web page you want to remove from Google search results isn't a page from your own site, then you're out of luck unless there are legal reasons or if the site has posted your personal information online without your permission. If that's the case, then use Google's removal troubleshooter to submit a request to have the page removed from search results. If you have a valid case, your may find some success having the page removed -- of course you might have even greater success just contacting the website owner as I described how to do back in 2009.

Now, if the page you want to remove from search results is on your own site, you're in luck. All you need to do is create a robots.txt file and make sure that you've disallowed either the specific page you don't want in the search results, or the entire directory with the contents that you don't want indexed. Here's what blocking a single page looks like.

User-agent: *

Disallow: /my-deleted-article-that-i-want-removed.html

You can block bots from crawling entire directories of your site as follows.

User-agent: *

Disallow: /content-about-personal-stuff/

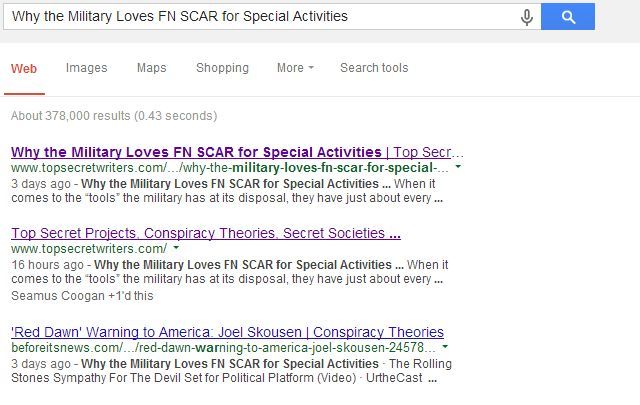

Google has an excellent support page that can help you create a robots.txt file if you've never created one before. This works extremely well, as I explained recently in an article about structuring syndication deals so that they don't hurt you (asking syndication partners to disallow indexing of their pages where you are syndicated). Once my own syndication partner agreed to do this, the pages that were duplicated content from my blog completely disappeared from search listings.

Only the main website comes up at third place for the page where they list our title, but my blog is now listed at both the first and second spots; something that would have been nearly impossible had a higher-authority website left the duplicated page indexed.

What many people don't realize is that this is also possible to accomplish with the Internet Archive (the Wayback Machine) as well. Here are the lines you need to add to your robots.txt file to make it happen.

User-agent: ia_archiver

Disallow: /sample-category/

In this example, I'm telling the Internet Archive to remove anything in the sample-category subdirectory on my site from the Wayback Machine. The Internet archive explains how to do this on their Exclusion help page. This is also where they explain that "The Internet Archive is not interested in offering access to web sites or other Internet documents whose authors do not want their materials in the collection."

This flies contrary to the commonly-held belief that anything posted to the Internet gets swept up into the archive for all eternity. Nope - webmasters that own the content can specifically have the content removed from the archive by using the robots.txt approach.

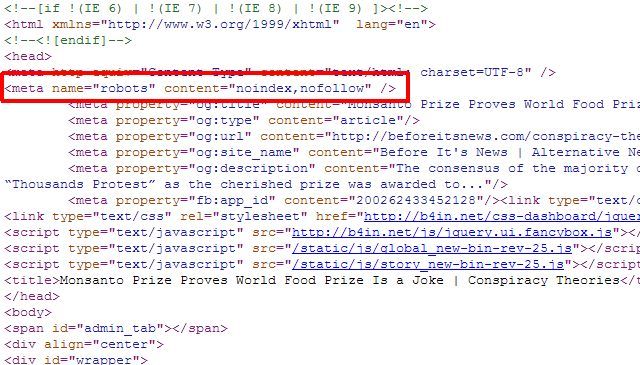

Remove an Individual Page With Meta Tags

If you only have a few individual pages that you want to remove from Google Search results, you actually don't have to use the robots.txt approach at all, you could simply add the correct "robots" meta tag to the individual pages, and tell the robots not to index or follow links on the entire page.

You could use the "robots" meta above to stop robots from indexing the page, or you could specifically tell the Google robot not to index so the page is only removed from Google search results, and other search robots could still access the page content.

<meta name="googlebot" content="noindex" />

It's completely up to you how you'd like to manage what robots do with the page and whether or not the page gets listed. For just a few individual pages, this may be the better approach. To remove an entire directory of content, go with the robots.txt method.

The Idea of "Removing" Content

This sort of turns the whole notion of "deleting content from the Internet" on its head. Technically, if you remove all of your own links to a page on your site, and you remove it from Google Search and the Internet Archive using the robots.txt technique, the page is for all intents and purposes "deleted" from the Internet. The cool thing though is that if there are existing links to the page, those links will still work and you won't trigger 404 errors for those visitors.

It's a more "gentle" approach to removing content from the Internet without entirely messing up your site's existing link popularity throughout the Internet. In the end, how you go about managing what content gets collected by search engines and the Internet Archive is up to you, but always remember that despite what people say about the lifespan of things that get posted online, it really is completely within your control.